Before You Start

Some of the math in these notes does not render correctly in Safari. I haven't tested it on any of the Microsoft-specific browsers. It is recommended that you view these notes in Firefox or Chrome.

Given the tools I use to generate these notes, I have little control over the scroll position of an anchor referenced by a link. Thus, when you follow a link, the referenced element will almost always appear just above the visible portion of the page. Remember to scroll up. I need to figure out a way to fix this, but right now finishing these notes is more important.

The material in these notes goes beyond what we cover in class. Sections that are marked with a star (*) are not covered in class; you can read them if you wish, and I am more than happy to discuss the material they cover with you, but you are not required to read them. The sections that are not marked are material we cover in class.

Some of the figures contain a lot of information and are scaled down to fit the width of a text column. If you hover the mouse over any figure, it gets scaled up, so figures should become easier to read.

1. Overview

1.1. Topics Covered

A one-semester course on advanced algorithms is necessarily incomplete, both in terms of the choice of topics covered and in terms of the depth in which it covers the topics that it does cover. I chose the topics for this course based on two criteria: First, the studied problems should have practical relevance, where "practical" is to be interpreted quite generally; the algorithms should be useful either for tackling problems arising in real-world applications or as building blocks for other algorithms. Second, the techniques used in developing these algorithms should be interesting and instructive. Based on these two criteria, there are three major themes this course will cover.

Advanced graph algorithms are used as building blocks of many other algorithms. It is amazing how many problems reduce to network flow or matching problems. So the first part of the course focuses on studying efficient algorithms for these problems. Before covering advanced graph algorithms, we discuss (integer) linear programming because it is a useful tool for modelling a wide range of optimization problems and is used as a building block for some of the algorithms discussed in this class.

Classical algorithm theory views NP-hardness as a "death sentence" for a problem in the sense that no "efficient" (i.e., polynomial-time) algorithm for such a problem is likely to exist. This view is too limited for at least two reasons: First, advances in the design of algorithms have led to very efficient solutions to many NP-hard problems, albeit obviously not polynomial-time solutions. Remember that polynomial time is just an approximation of efficiency; there exist very inefficient polynomial-time algorithms and rather efficient exponential-time algorithms. Second, there is a great need for algorithms that solve NP-hard problems because an increasing number of application areas give rise to a wealth of NP-hard problems that simply need to be solved. The second part of this course thus focuses on techniques for coping with NP-hardness. This part is divided into two components. The first one is concerned with approximation algorithms. If an optimization problem is NP-hard, we should not hope to find an optimal solution in polynomial time. However, what if an approximate solution is good enough in a particular application? How well can the optimal solution be approximated in polynomial time? This is the central question answered by the theory of approximation algorithms. The second component covers two types of algorithms that both find exact answers to NP-hard problems and thus necessarily take exponential time. Just as a linear-time algorithm is preferable over a quadratic-time algorithm, even though both take polynomial time, an \(O\bigl(1.1^n\bigr)\)-time algorithm is obviously much better than one that takes \(O\bigl(4^n\bigr)\) time. Exact exponential algorithms have the goal to achieve the fastest possible exponential running time for solving an NP-hard problem. Fixed-parameter algorithms go a step further in that they aim to achieve a running time that is polynomial in the input size and exponential only in some hardness parameter of the input; for an NP-hard problem, this hardness parameter must be linear in the input size for some inputs, but the hope is that for many inputs, particularly those arising in certain applications, the parameter is much smaller than \(n\). Since there is a lot of overlap between the techniques used in designing exact exponential algorithms and fixed-parameter algorithms, we discuss them together.

There are a number of topics that fully deserve to be covered in this course but which I chose not to cover:

Advanced data structures. Almost all non-trivial algorithms rely on support from good data structures, so data structures are of tremendous importance. There is a simple reason why advanced data structures are not a major focus of this course: Meng He already offers an advanced data structures course, which you are strongly encouraged to take.

Parallel algorithms. Multicore processors and massively parallel systems based on cloud architectures or GPUs have become ubiquitous. Designing parallel algorithms for such systems poses a whole new layer of challenges that are of no concern when designing traditional, sequential algorithms. Once again, parallel algorithms are not a focus of this course because there already is a parallel computing course in our Faculty. Designing efficient parallel algorithms can require a very different way of thinking about problem decomposition than when designing sequential algorithms. You are strongly encouraged to take the parallel computing course to learn about these questions.

Computational geometry. This is probably the least justified omission. Computational geometry is a treasure trove of interesting problems and techniques to solve them. Many widely applicable data structuring techniques were developed in the context of developing data structures for geometric problems. However, it would be simply impossible to cover graph algorithms, approximation and exact exponential algorithms, and computational geometry in the same course without reducing the exposition of each topic to a mere scratch of the surface. In my personal opinion, network flows, cuts, and matchings are more fundamental, with a wider range of applications than many geometric algorithms, and coming to terms with NP-hardness also seems to be of tremendous importance nowadays. So, purely subjectively, I decided not to cover geometric algorithms in this course, even though computational geometry is full of beautiful ideas and algorithms based on them.

Memory hierarchies. While today's processors have the computing power of supercomputers of the 1980s, memory access speeds have not increased nearly as fast. As a result, it is challenging to supply modern CPUs with data at the rate at which they can process it. To bridge the widening gap between processor and memory speeds, modern computers have a memory hierarchy consisting of several layers of cache, RAM, and hard disks or SSDs. The farther away a memory level is from the CPU, the larger it is, but its access cost also increases. To amortize addressing costs, data is transferred between adjacent levels in blocks of a certain size. If algorithms have high access locality, this is an effective strategy to alleviate the bottleneck posed by RAM and disk accesses. Designing algorithms with locality of access, on the other hand, is extremely challenging for some problems and, even for problems where such efforts were successful, the required techniques often depart significantly from the ones used in classical algorithms, in a manner similar to parallel algorithms. I used to teach an entire course on algorithms for memory hierarchies since this was my main research area. Not talking about algorithms for memory hierarchies in this course was another sacrifice I had to make in order to be able to cover the topics I do cover in sufficient depth.

Online algorithms. Most of the time when we talk about algorithms, we assume that the entire input is given to the algorithm in one piece. The algorithm can analyze this input any way it wants, in order to compute the desired solution as efficiently as possible. There are applications, however, where this assumption is not satisfied. Paging, the process of deciding which data items to keep in cache, is one such example: When a page is requested that is not in cache, it is brought into cache and the algorithm has to make a decision which page to evict from the cache in order to make room for it. The choice of the evicted page can have significant impact on the cost of future memory accesses, so it would be nice to know all future memory accesses. However, this information is simply not available, so we have to make the best possible decision with the information we have available at the time it needs to be made. There are numerous other problems of the same flavour. The theory and practice of online algorithms studies how good approximations to an optimal solution can be computed in such a scenario. Again, there are many beautiful ideas that were developed to obtain such algorithms. Not covering them was again a trade-off I made in order to cover the topics I do cover in sufficient depth.

Streaming algorithms. These algorithms are closely related to online algorithms and I/O-efficient algorithms. Where I/O-efficient algorithms try to alleviate the I/O bottleneck by being careful about how they access the hard drive in computations on data sets beyond the size of the computer's RAM, streaming algorithms completely eliminate the I/O bottleneck by processing data as it arrives (similarly to online algorithms) and only storing as much information about the data elements seen so far as fits in memory. As is the case for online algorithms, this raises the question of how good approximations of the correct answer to a given problem can be computed in this fashion. There is a simple reason why streaming algorithms are not covered in this course: I do not know enough about them (yet).

1.2. Prerequisites

A discussion of advanced algorithms needs as a foundation a solid understanding of basic algorithmic concepts, as covered in CSCI 3110.

In terms of specific problems and algorithms to solve them, the prerequisites for this course are fairly modest. I assume that you know about sorting, selection, and binary search and understand the algorithms that solve these problems. I also expect you to know about basic graph traversals (BFS and DFS), their cost, and the type of problems they can be used to solve. Finally, I assume that you know what a minimum spannning tree or shortest path tree is, along with algorithms to compute them (Bellman-Ford, Ford-Fulkerson, Dijkstra, Prim, Kruskal). Any other algorithms needed as building blocks of algorithms discussed in this course are introduced as needed.

I assume a greater degree of preparedness in terms of your comfort with simply talking about algorithmic problems. You should have a solid understanding of and be able to comfortably work with \(O\)-, \(\Omega\)-, \(\Theta\)-, \(o\)-, and \(\omega\)-notation and you should have an appreciation for the need for formalism and rigour in describing algorithms and in arguing about their correctness and running times. Having said that, I will often appeal to your intuition about various algorithmic questions and will implicitly assume that you are able to translate this intuition into formal arguments.

As far as algorithmic techniques go, divide and conquer should be your bread and butter and you should be comfortable with greedy algorithms and dynamic programming, as they will be used extensively in this course to solve various subproblems in approximate and exact solutions to NP-hard problems.

If you cannot put a checkmark beside each of these topics, now is the time to open your CSCI 3110 textbook (most likely Cormen et al. or Kleinberg/Tardos) and refresh your memory on these topics.

1.3. A Note on Graphs

Many of the problems studied in this course are graph problems, because almost anything can be modelled as a graph problem. For some of these problems, it is important whether the underlying graph is directed or undirected; for others, it is irrelevant beyond the fact that, in a directed graph, the two edges \((v,w)\) and \((w,v)\) are separate entities while they are the same in an undirected graph. For problems that can be defined for both directed and undirected graph, I will simply use the term "graph", without specifying whether it is directed or undirected. You should check in these cases that the problem definition and the developed algorithms are indeed sound both if the graph is undirected and if it is directed. When it is important whether the graph is directed or undirected, I state this explicitly.

2. Linear Programming

Linear programming (LP), as a tool for modelling optimization problems and as an algorithmic problem to be solved efficiently, can easily fill an entire course. Since this course focuses mainly on combinatorial algorithms for a wide range of problems, we do not cover LP in much depth. However, many of the problems discussed in this course have elegant LP or integer linear programming (ILP) formulations that shed light on the structure of the problems, and some of the developed algorithms use linear programming as a building block. Thus, a basic understanding of LP and ILP and of the basic techniques to solve LP and ILP problems is important for later topics covered in this course.

The discussion of LPs is split into three parts. In this chapter, we introduce what linear programs and integer linear programs are, and how to use them to model optimization problems. Chapter 3 introduces a classical algorithm for solving linear programs, the Simplex Algorithm. The proof of correctness of this algorithm is deferred to Chapter 4, which introduces LP duality and complementary slackness as two central tools for proving that a given solution of an LP is optimal.

The roadmap for this chapter is as follows:

-

Section 2.1 defines what a linear program is.

-

Section 2.2 illustrates the use of linear programs to model optimization problems using the single-source shortest paths problem as an example.

-

Section 2.3 introduces integer linear programming and illustrates its usefulness as a tool for modelling optimization problems by showing how to convert the minimum spanning tree problem into an ILP.

-

Section 2.4 explores the computational complexity of linear programming and integer linear programming. Linear programs can be solved in polynomial time, often even if they have an exponential number of constraints. Integer linear programming, on the other hand, is NP-hard. This raises the question whether we can use the tools to solve linear programs efficiently also to obtain good, albeit not optimal, solutions of ILPs.

-

Section 2.5 discusses LP relaxations as a way to convert an ILP into a related LP. LP relaxation plays an important role in approximation algorithms, discussed in the third part of this book.

-

Section 2.6 finally, discusses two commonly used forms of linear programs. One of them—canonical form—plays a key role in the theory of LP duality. The other—standard form—is the input expected by the Simplex Algorithm.

2.1. Linear Programs

A linear program (LP) \(P\) is an optimization problem over a set of real-valued variables \(\{x_1, \ldots, x_n\}\). We usually represent this set of variables as a vector \(x = (x_1, \ldots, x_n)\). Its description includes a linear objective function \(f(x_1, \ldots, x_n)\) and a collection of \(m\) linear constraints, that is, linear inequalities involving the variables \(x_1, \ldots, x_n\). The goal is to find an assignment of real values \({\hat x} = (\hat x_1, \ldots, \hat x_n)\) to the variables in \(x = (x_1, \ldots, x_n)\) that satisfies all constraints in \(P\) and either minimizes or maximizes the objective function value \(f(\hat x_1, \ldots, \hat x_n)\). Whether a minimum or maximum objective function value is sought is part of the problem description and the problem is accordingly called a minimization LP or a maximization LP. An assignment \(\hat x\) that minimizes or maximizes the objective function value subject to the given set of linear constraints is called an optimal solution of the LP \(P\). If \(\hat x\) satisfies the constraints in \(P\) but may or may not maximize \(f(\hat x_1, \ldots, \hat x_n)\), then \(\hat x\) is called a feasible solution of \(P\).

Reading \((\hat x_1, \ldots, \hat x_n)\) as an \(n\)-dimensional vector, the set of feasible solutions for a single linear constraint is always a halfspace, the portion of \(\mathbb{R}^n\) on one side of an \((n-1)\)-dimensional hyperplane. This is called the feasible region of the constraint. Since a feasible solution for a set of constraints must satisfy every constraint in the set, the feasible region of the constraints in \(P\) is the intersection of the feasible regions of the individual constraints and is thus convex. This convexity, together with the linearity of the constraints and objective function is the key to solving linear programs efficiently.

As an example, consider the following linear program over two variables \(x\) and \(y\):

\[\begin{gathered} \text{Maximize } x + 2y\\ \begin{aligned} \text{s.t. (subject to) } \hphantom{3x + y} \llap{x} &\ge 0\\ y &\ge 0\\ 3x + y &\le 26\\ 4x - y &\le 31\\ 2y - x &\le 4 \end{aligned} \end{gathered}\tag{2.1}\]

The constraints and feasible region of (2.1) are shown in Figure 2.1. The optimal solution is the red point \(\bigl(\frac{48}{7}, \frac{38}{7}\bigr)\) with objective function value \(\frac{48}{7} + 2 \cdot \frac{38}{7} = \frac{124}{7}\).

Figure 2.1: The feasible region of the constraint \(3x + y \le 26\) is shaded light blue. The feasible region of the set of all constraints in (2.1) is shaded light red. The red dot is the optimal solution of (2.1). All solutions on each of the blue lines have the same objective function value. Moving in the direction indicated by the red arrow increases the objective function value.

The fact that, in this example, the optimal solution is a vertex of the feasible region is not a coincidence. Since moving the solution in a particular direction (the red arrow in Figure 2.1) increases the objective function, the maximum objective function value is always obtained at a point on the boundary of the feasible region. If this point is not a vertex of the feasible region, it is an interior point of a boundary facet of the feasible region (a segment in 2-d, an up to \((n-1)\)-dimensional convex region in higher dimensions), and every point in this facet has the same objective function value. Thus, the solution can be moved to a boundary vertex of this facet without changing the objective function value; this boundary vertex of this facet is a boundary vertex of the feasible region. The Simplex Algorithm exploits the fact that only vertices of the feasible region need to be considered in the search for an optimal solution.

2.2. Shortest Paths as an LP

2.2.1. The Single-Source Shortest Paths Problem

As a first example of the use of linear programs to model optimization problems, consider the single-source shortest paths problem.

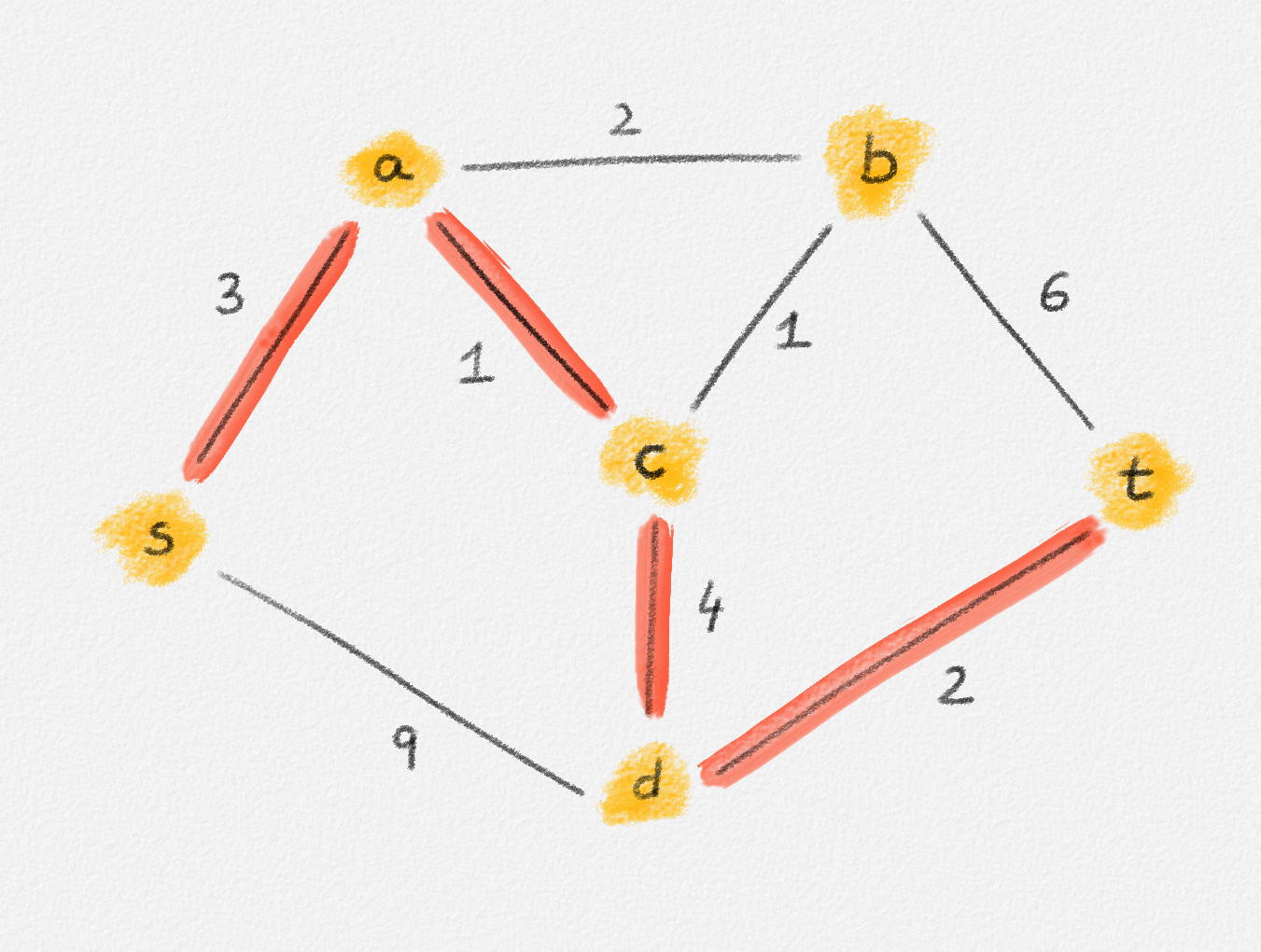

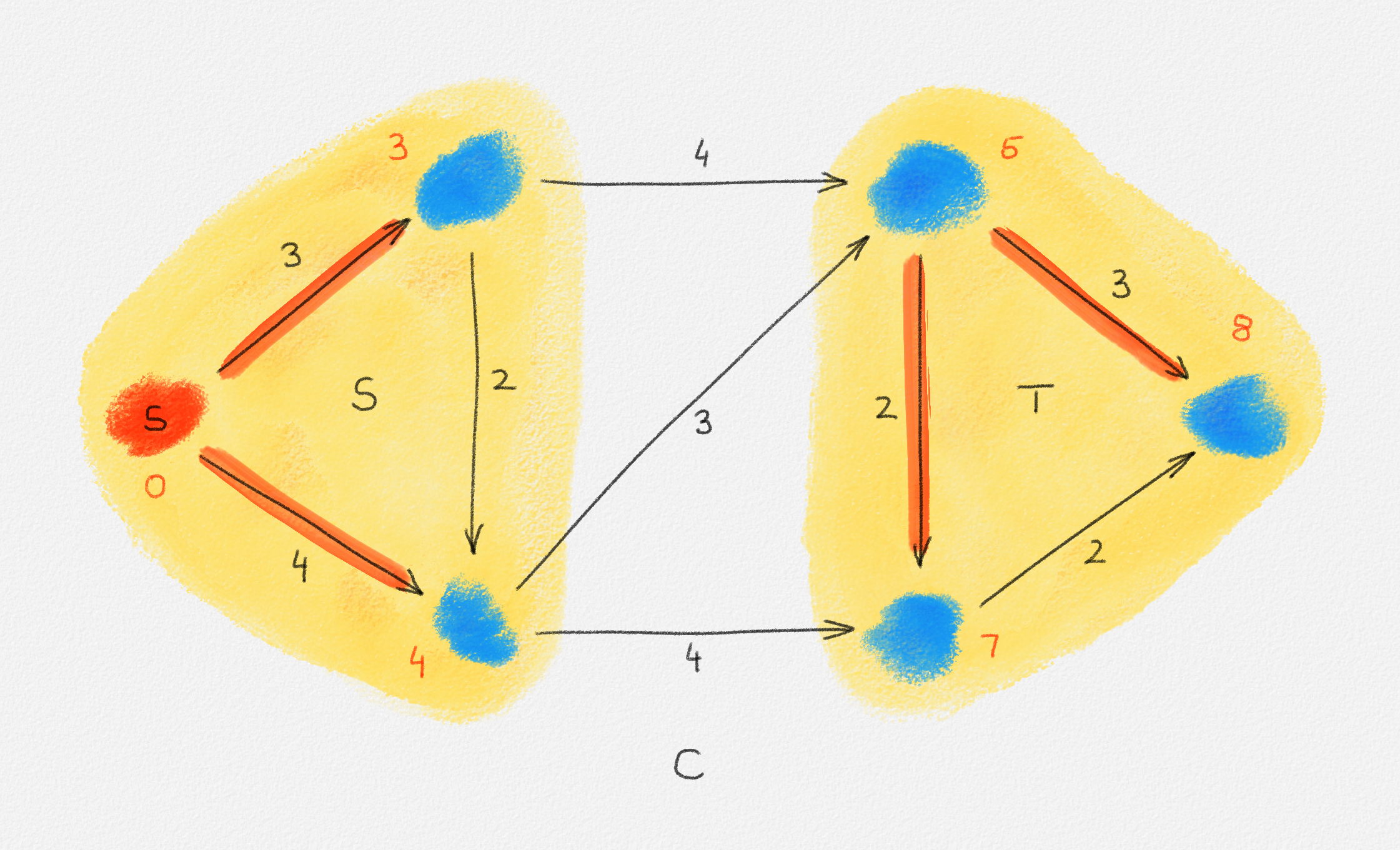

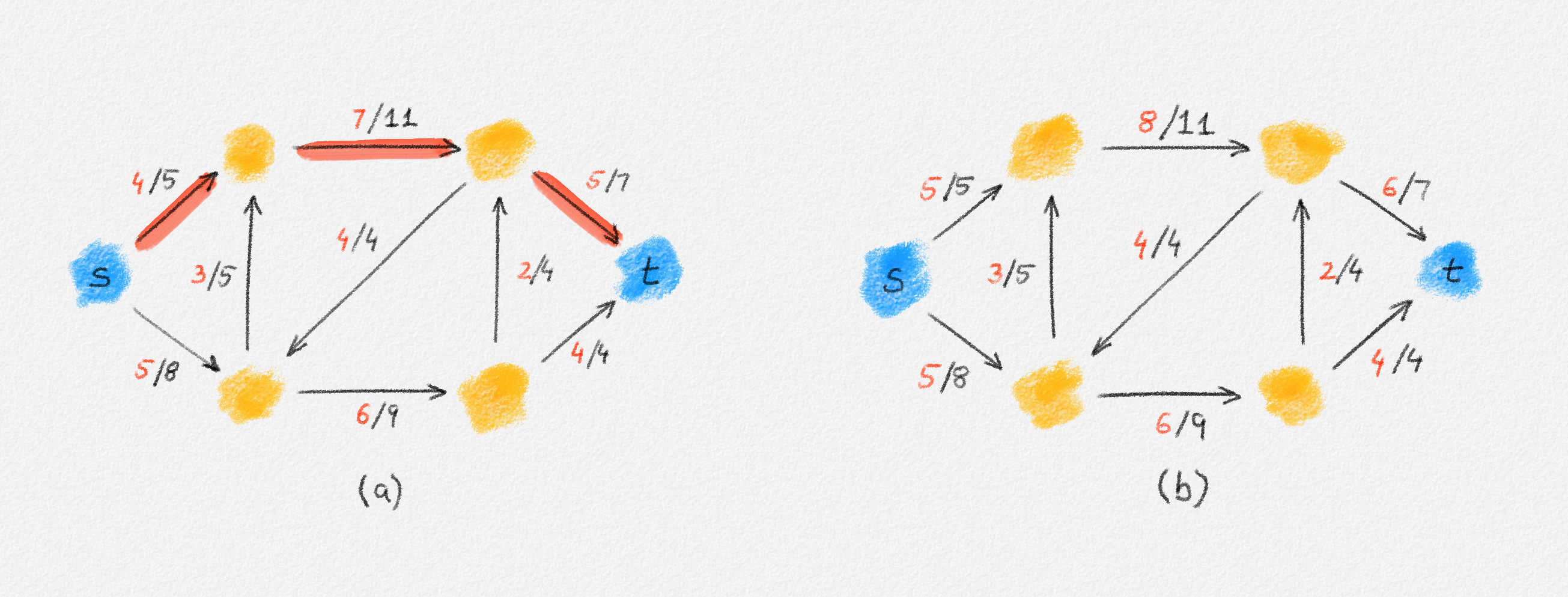

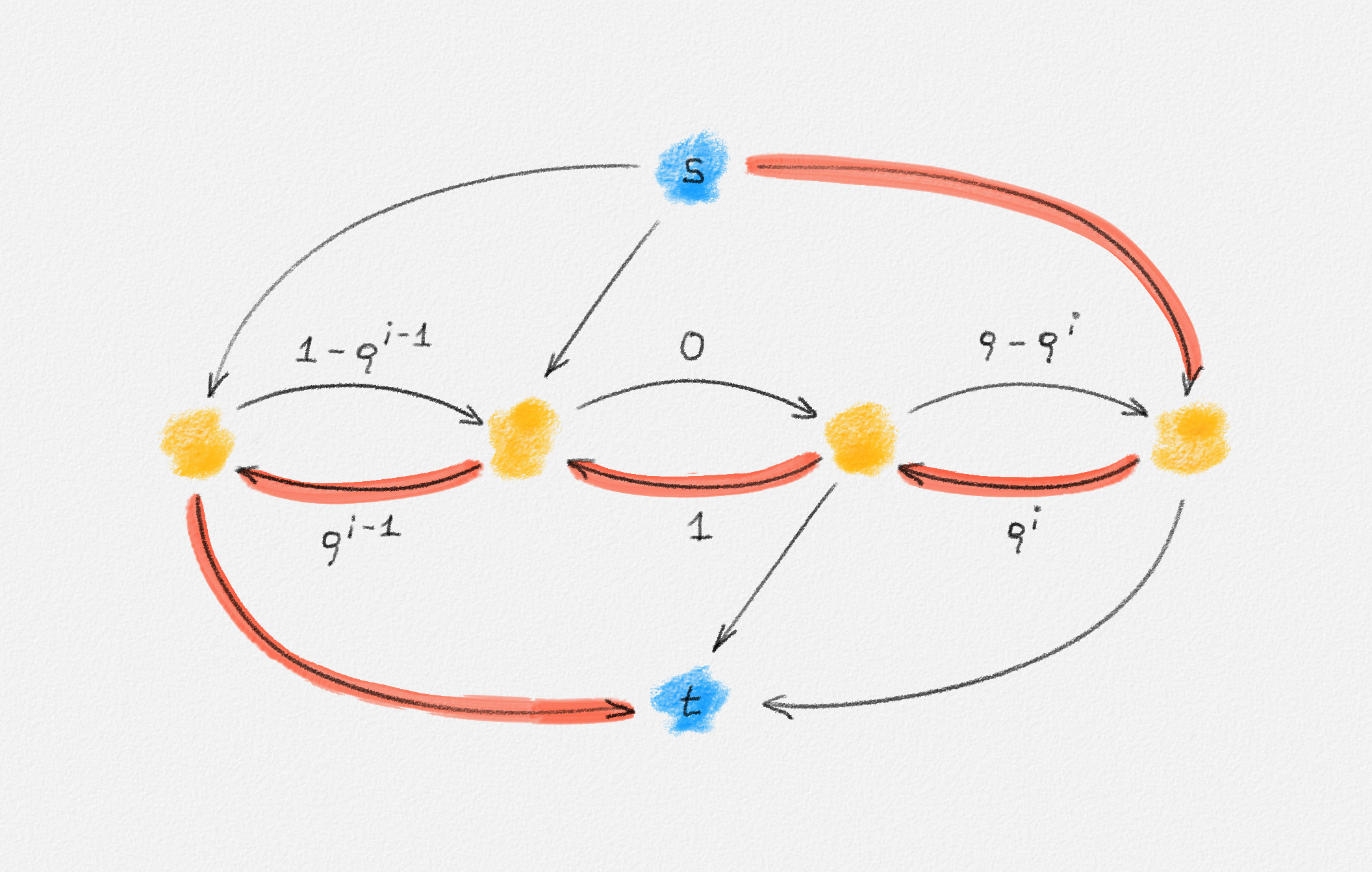

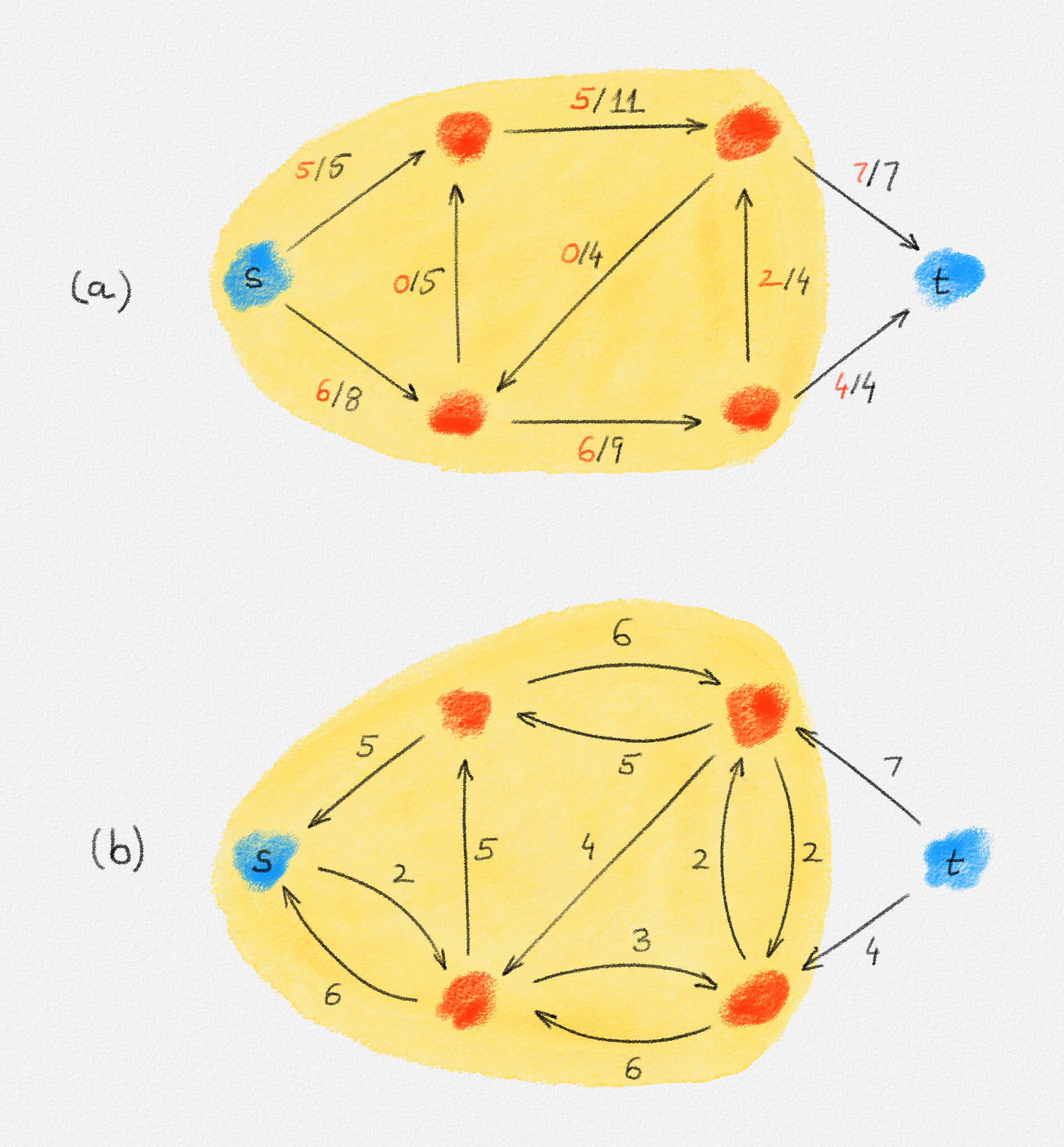

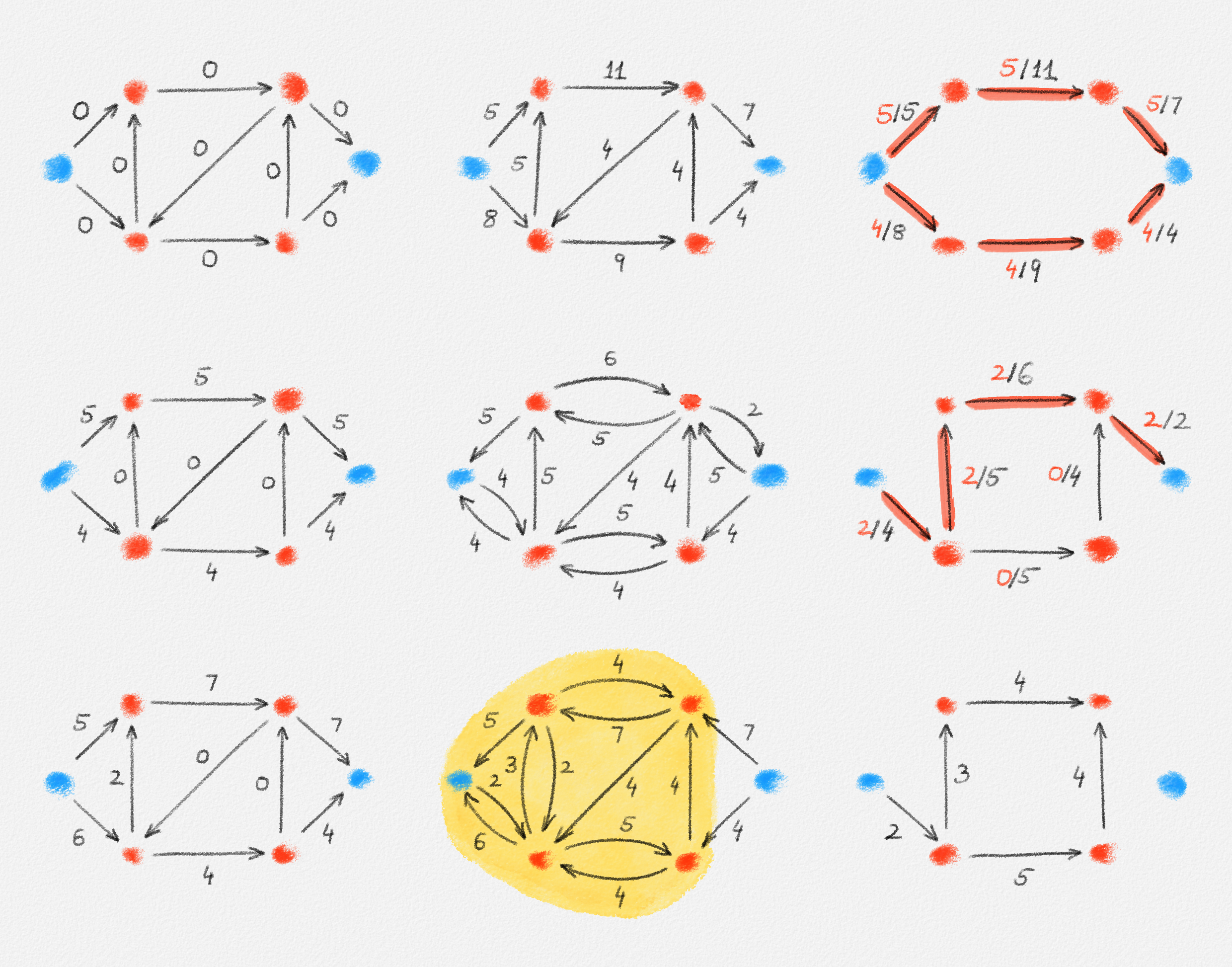

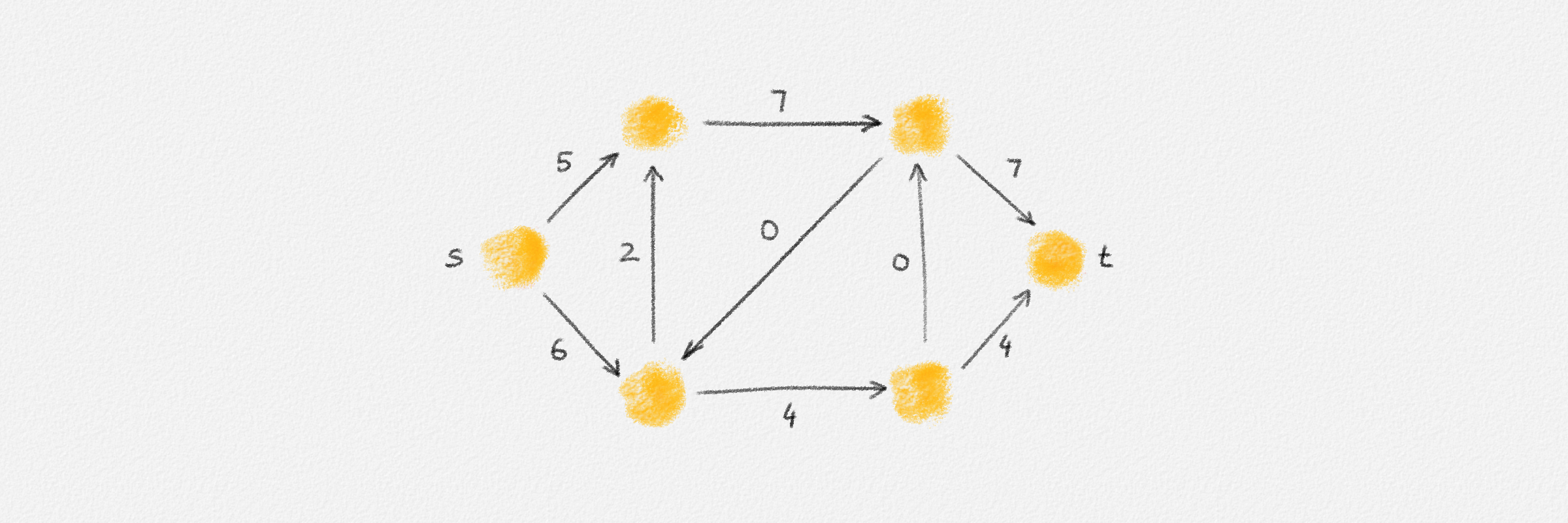

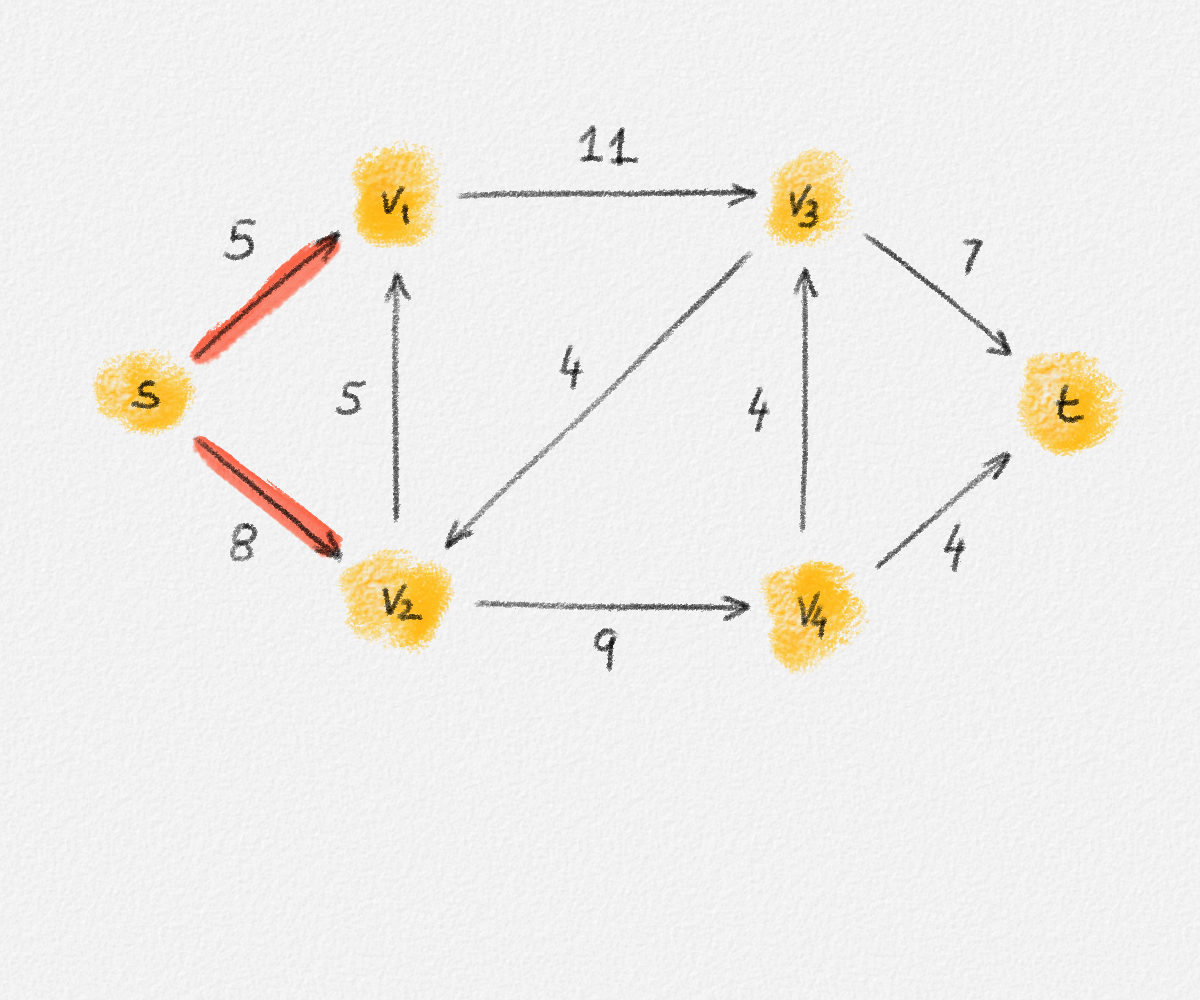

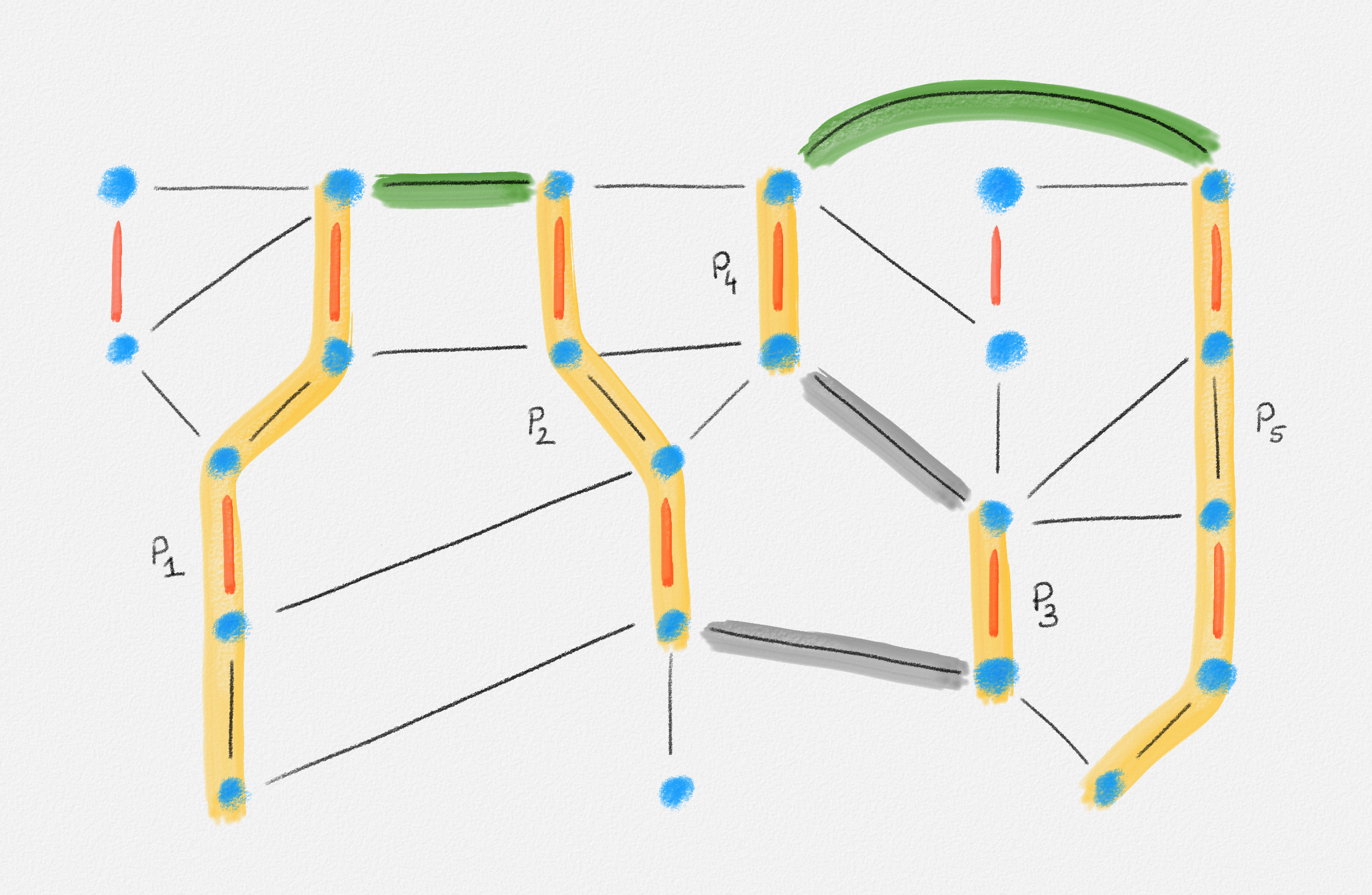

Given a graph \(G = (V, E)\), a path from a vertex \(s\) to a vertex \(t\) is a sequence of vertices \(P = \langle v_0, \ldots, v_k \rangle\) such that \(v_0 = s\), \(v_k = t\), and there exists an edge \(e_i = (v_{i-1},v_i) \in E\) for all \(1 \le i \le k\). See Figure 2.2.

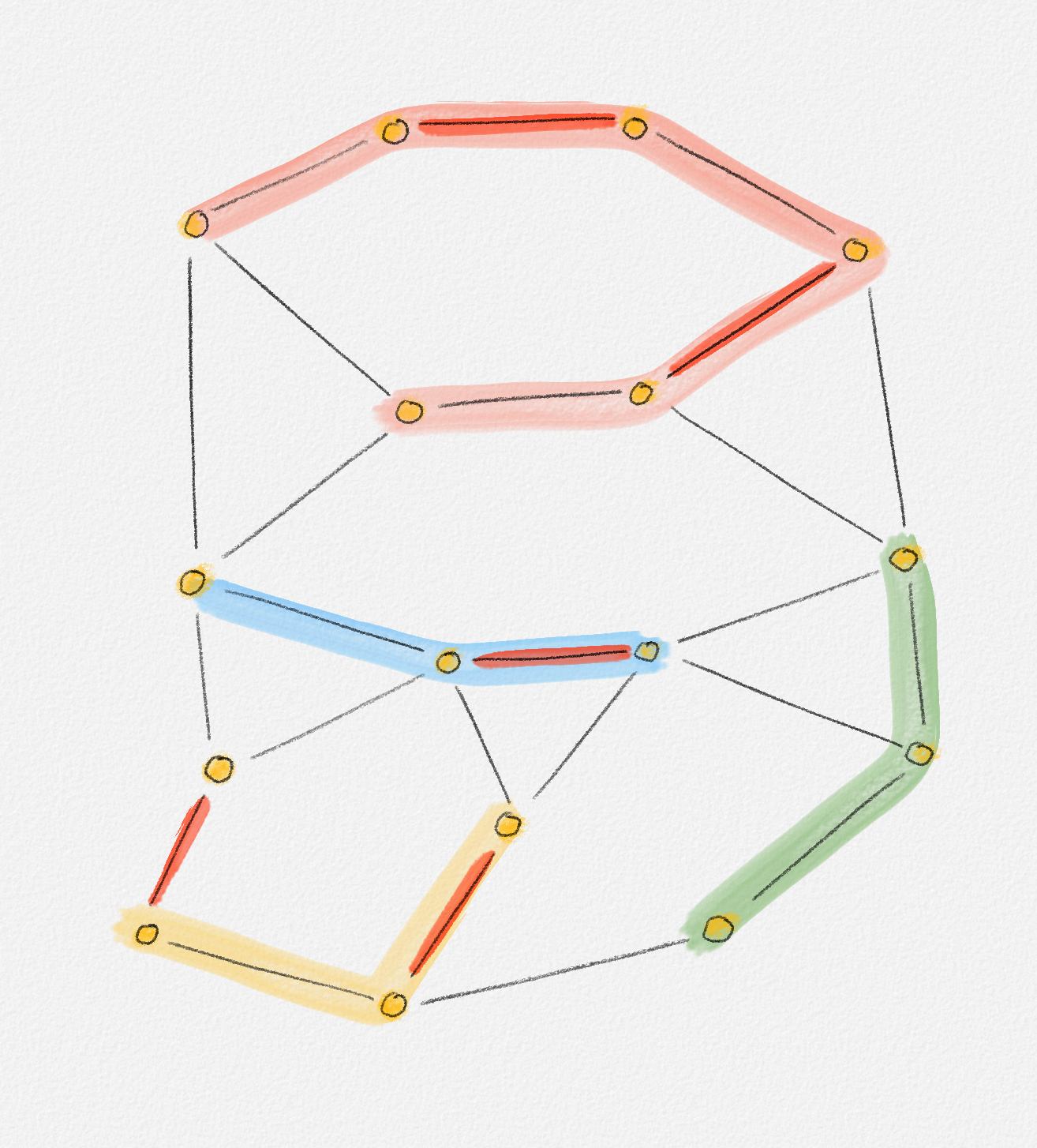

Figure 2.2: The red path \(\langle s, a, c, d, t\rangle\) is a shortest path from \(s\) to \(t\) of length \(10\). \(\langle s, d, t\rangle\) is another path from \(s\) to \(t\), but it is not a shortest path because its length is \(11\).

Depending on the context, \(P\) may be viewed as the sequence of its vertices, the sequence of its edges \(\langle e_1, \ldots, e_k \rangle\) or the alternating sequence of both \(\langle v_0, e_1, v_1, \ldots, e_k, v_k \rangle\).

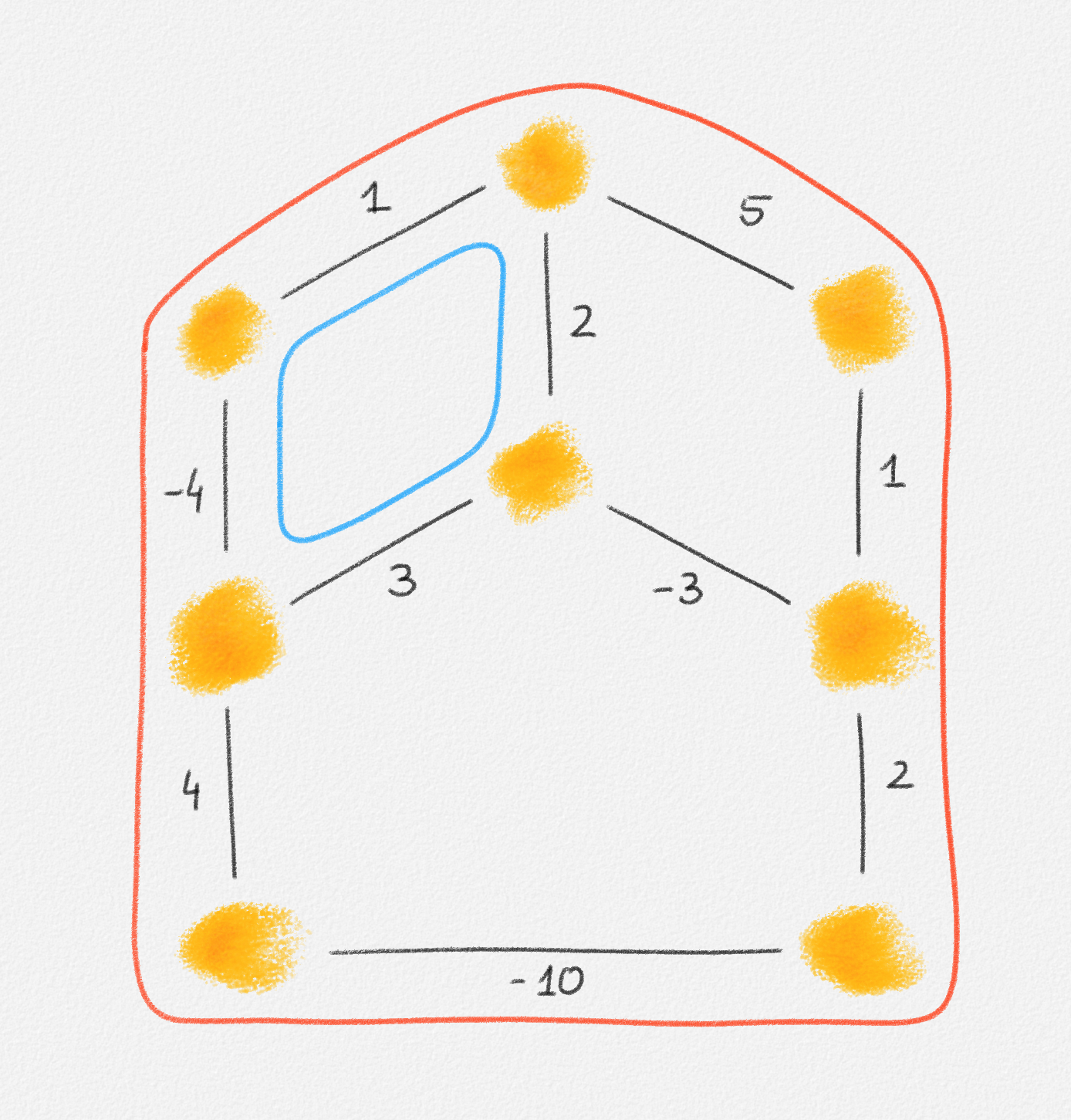

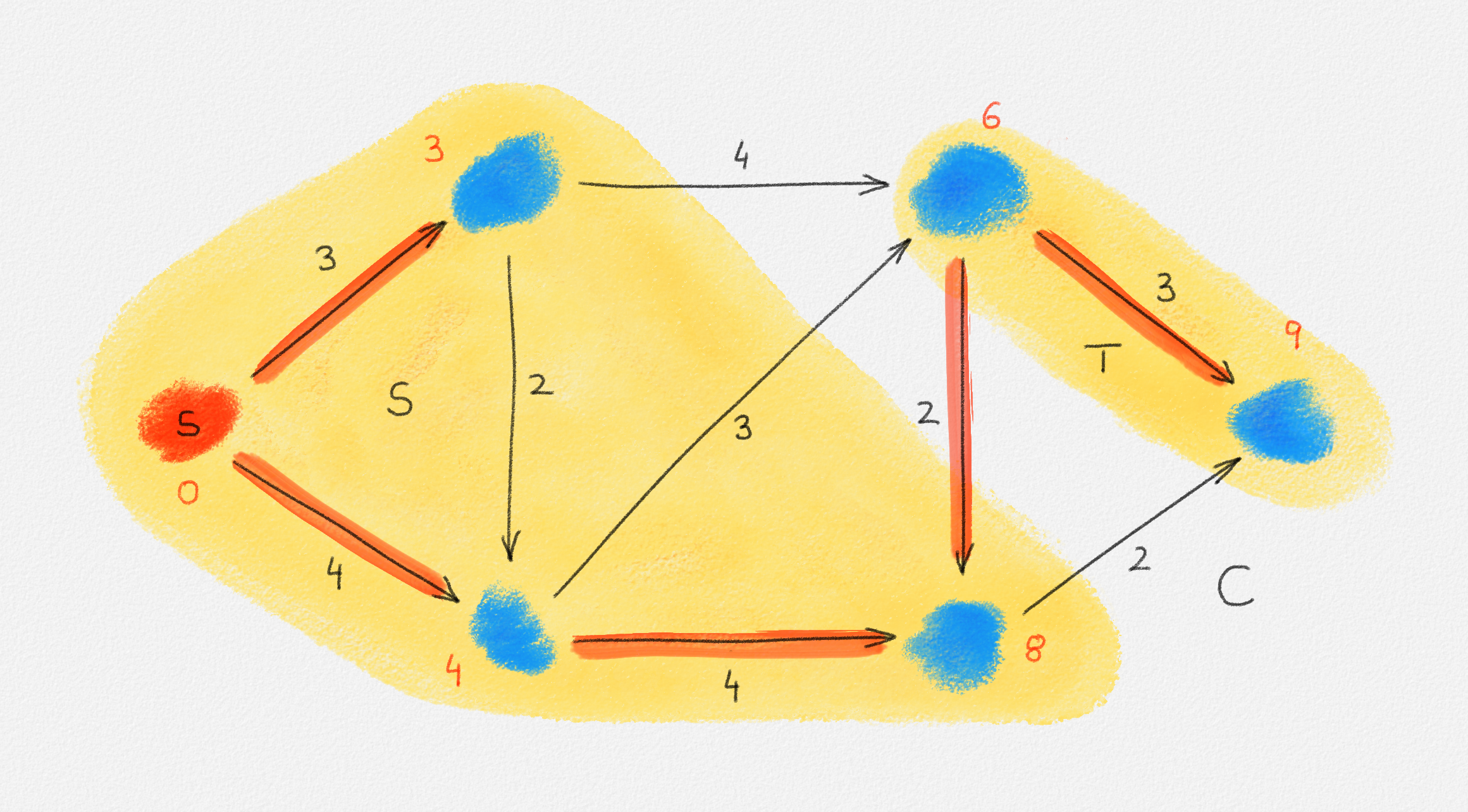

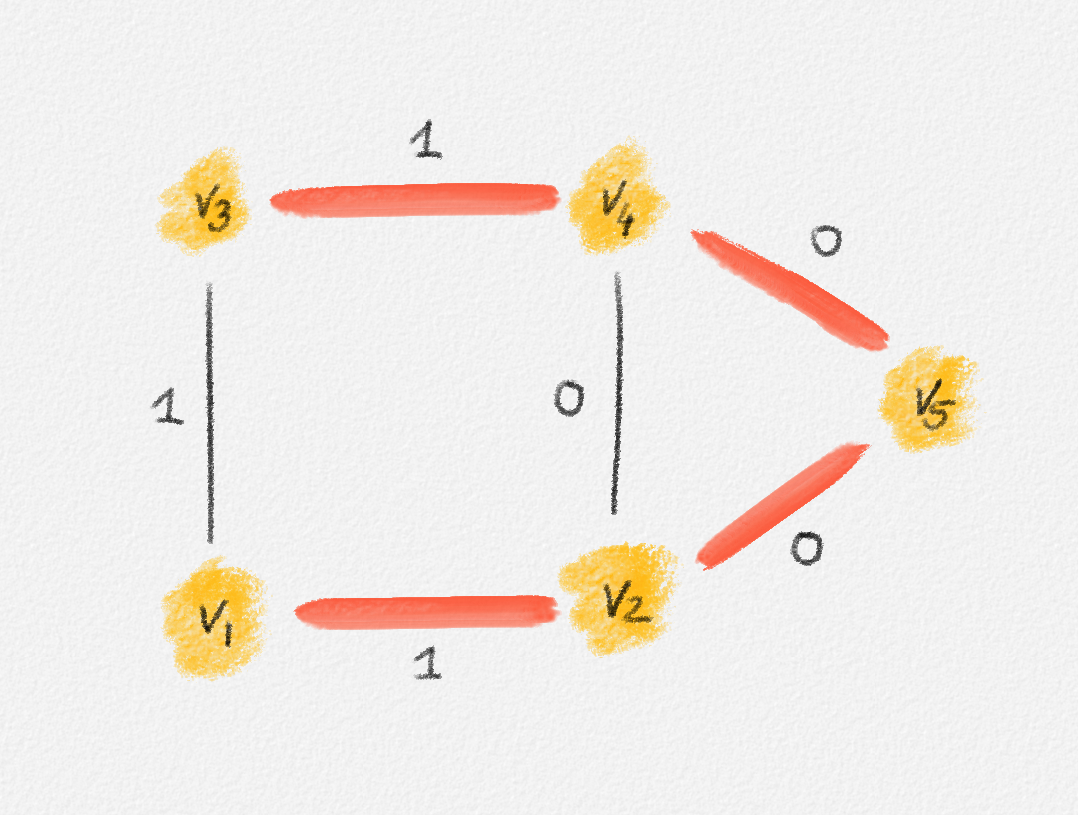

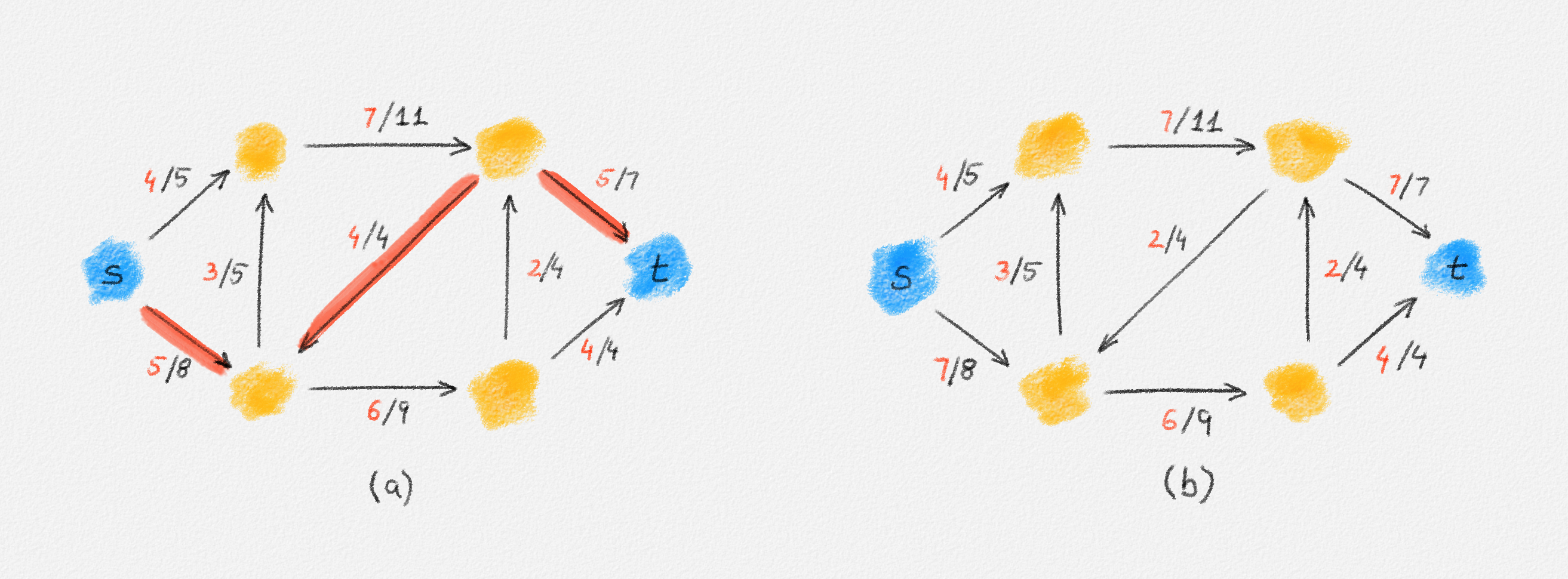

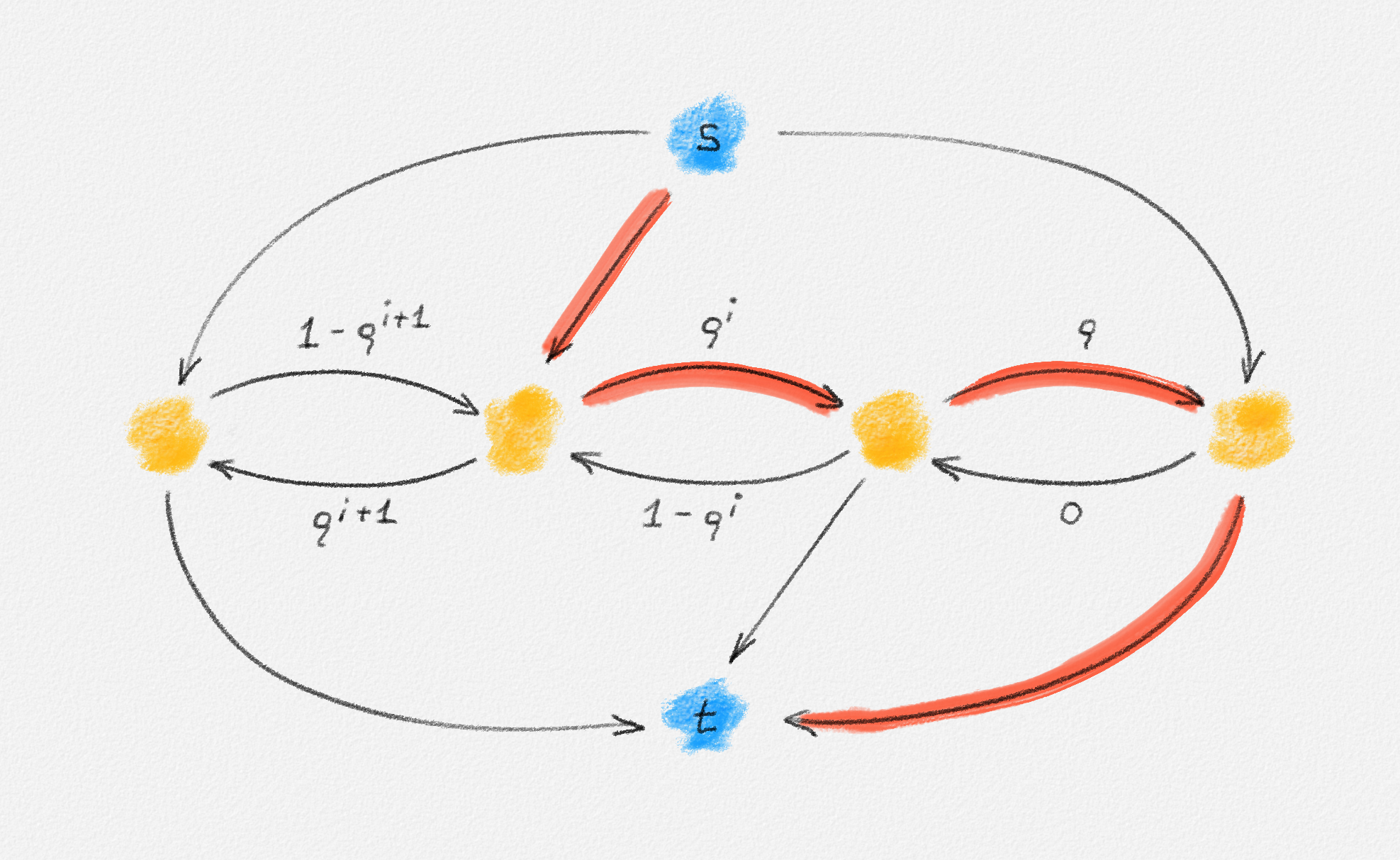

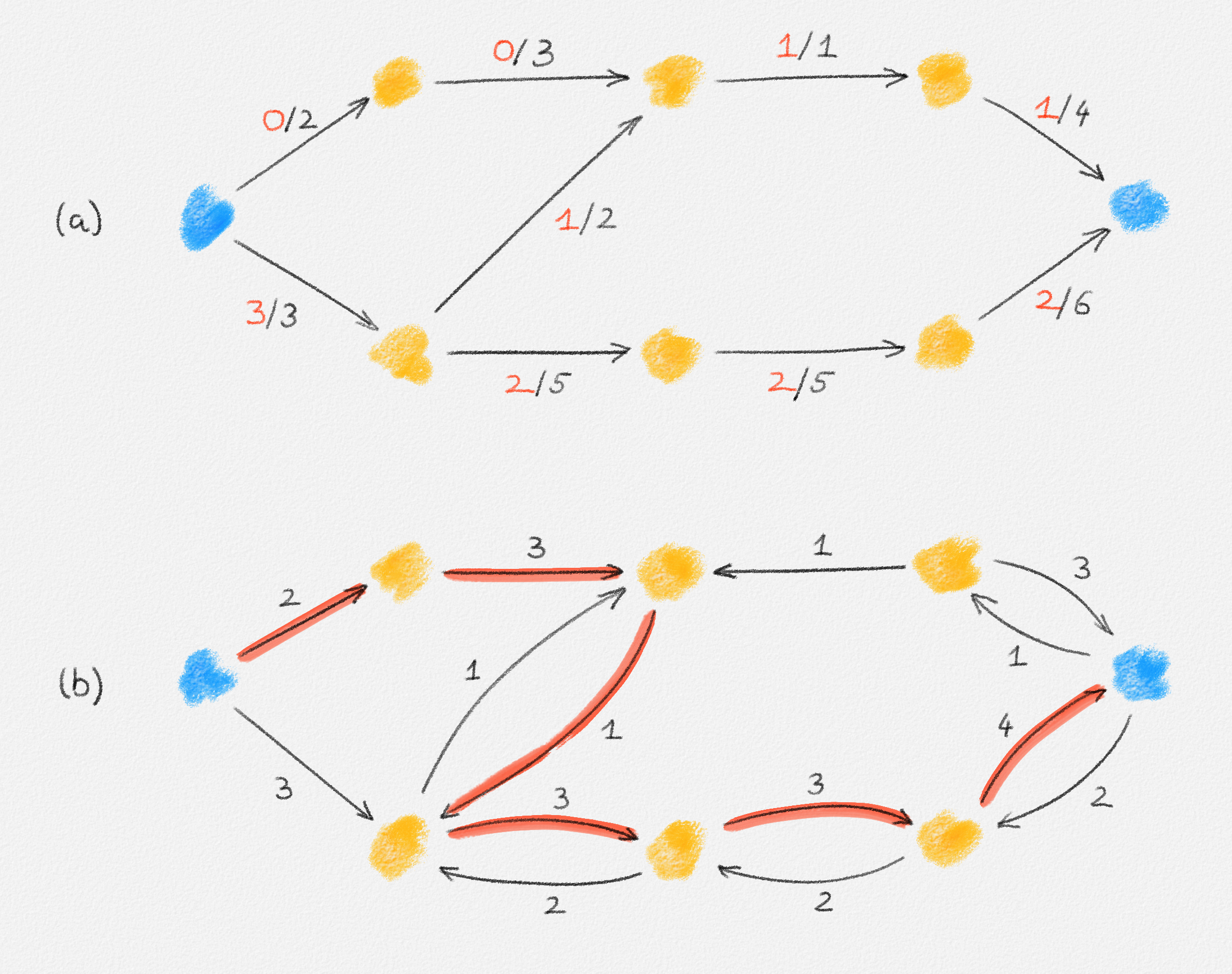

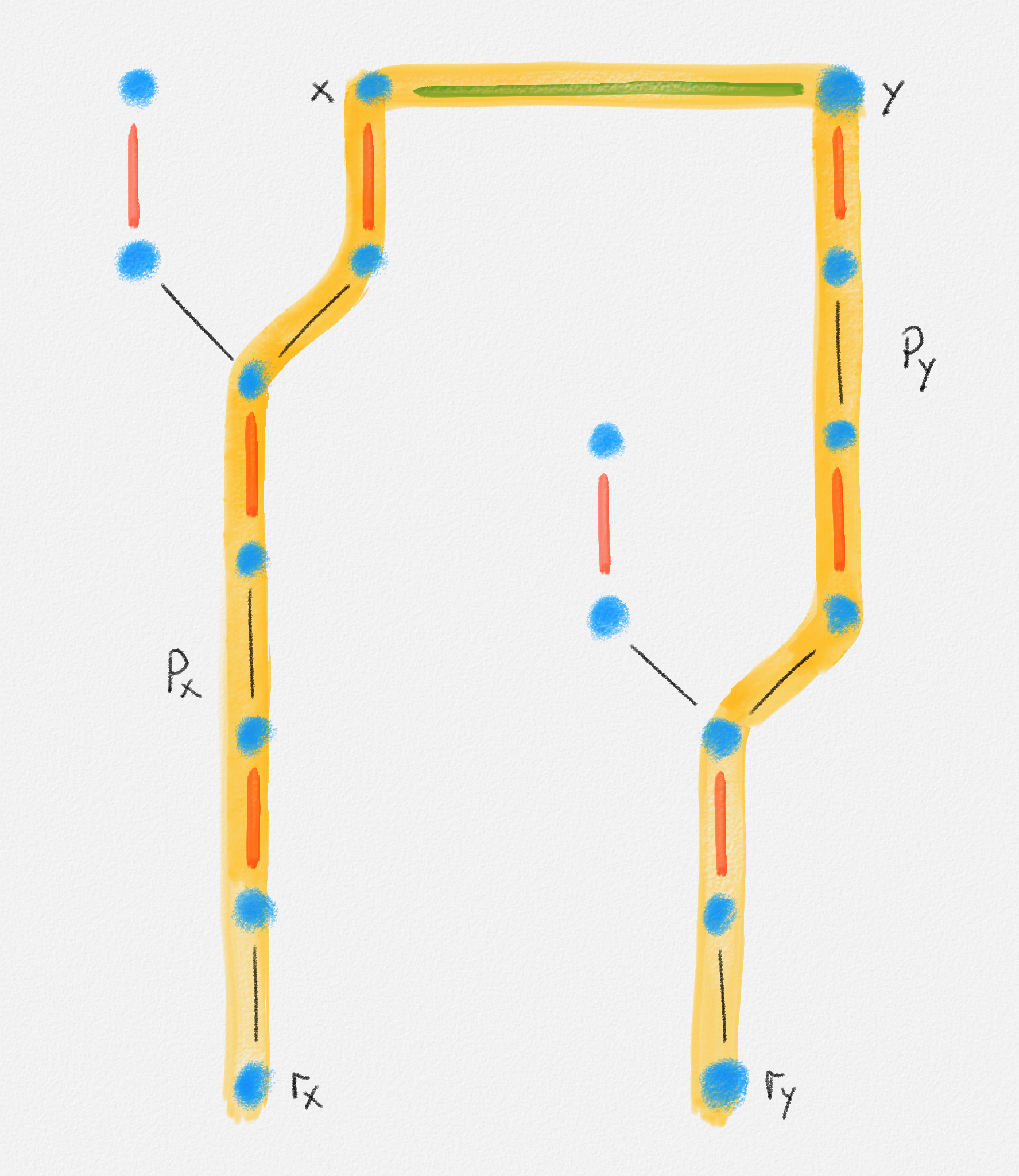

A path \(P\) from a vertex \(s\) to a vertex \(t\) is a cycle if \(s = t\). See Figure 2.3.

Figure 2.3: The red and blue paths are cycles. The red one is a negative cycle of weight \(-1\). The blue one is not a negative cycle; its weight is \(2\).

An edge-weighted graph is a pair \((G,w)\) consisting of a graph \(G = (V,E)\) and an assignment \(w : E \rightarrow \mathbb{R}\) of weights to its edges.

The weight or length of a path \(P\) in an edge-weighted graph \((G,w)\) is the total weight of all edges in \(P\):

\[w(P) = \sum_{e \in P} w_e\]

A shortest path from a vertex \(s \in V\) to a vertex \(t \in V\) is a path \(\Pi_{G,w}(s,t)\) from \(s\) to \(t\) in \(G\) such that every path \(P\) from \(s\) to \(t\) in \(G\) satisfies \(w(P) \ge w(\Pi_{G,w}(s,t))\).

The distance from \(s\) to \(t\) is

\[\mathrm{dist}_{G,w}(s,t) = w(\Pi_{G,w}(s,t)).\]

Shortest paths in an edge-weighted graph \((G,w)\) are well defined only if there are no negative cycles in \((G,w)\), where

A negative cycle is a cycle \(C\) in \(G\) with \(w(C) < 0\).

Indeed, if there exists a path from \(s\) to \(t\) that includes a vertex in some negative cycle \(C\), then it is possible to generate arbitrarily short paths from \(s\) to \(t\) by including sufficiently many passes through \(C\) in the path.

The single-source shortest paths problem is to compute the distance from some source vertex \(s\) to every vertex \(v \in V\):

Single-source shortest paths problem (SSSP): For an edge-weighted graph \((G,w)\) and a source vertex \(s \in V\), compute \(\mathrm{dist}_{G,w}(s, v)\) for all \(v \in V\).

In general, the goal is to find the actual shortest paths \(\Pi_{G,w}(s,v)\), not only the distances \(\mathrm{dist}_{G,w}(s,v)\). By the following two exercises, computing the distances is the hard part.

Exercise 2.1: In general, there may be more than one shortest path between two vertices \(s\) and \(t\) in a connected edge-weighted graph \((G, w)\). Prove that it is possible to choose a particular shortest path \(\Pi_{G,w}(s, v)\) for each vertex \(v \in V\) such that the union of these shortest paths is a tree. This tree is called a shortest path tree.

Exercise 2.2: Show that finding the shortest paths from a vertex \(s\) to all vertices \(v\) in an edge-weighted graph \((G, w)\) is no harder than computing the distances from \(s\) to all vertices in \(G\). Specifically, if \(d : V \rightarrow \mathbb{R}\) is a labelling of the vertices of \(G\) such that \(d_v = \mathrm{dist}_{G,w}(s,v)\) for all \(v \in V\), show how to compute a shortest path tree \(T\) with root \(s\) in \(O(n + m)\) time.

2.2.2. Properties of Shortest Paths

The LP formulation of the SSSP problem is based on the following two properties of distances from \(s\).

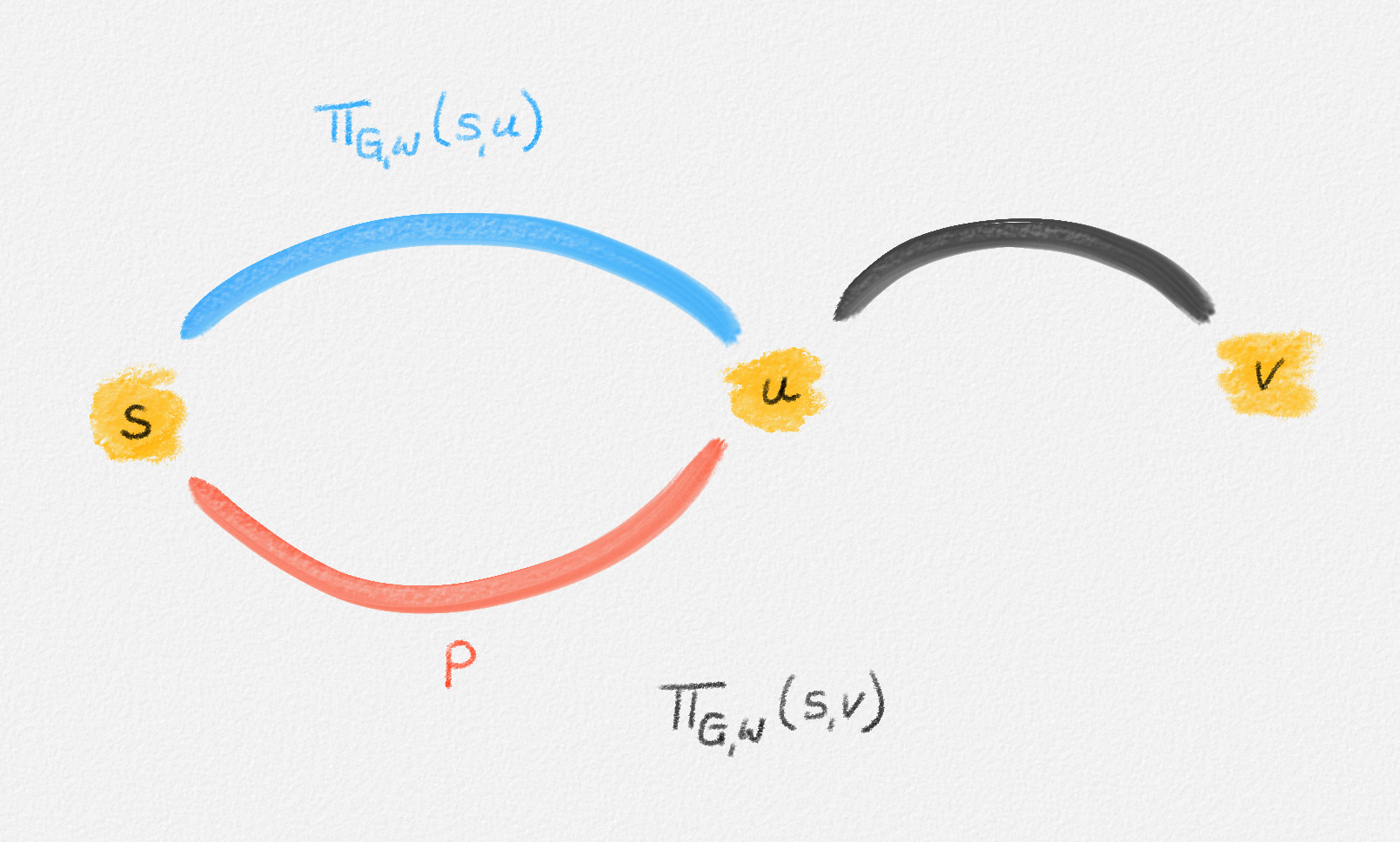

Lemma 2.1: Let \(v \ne s\) and let \(u\) be \(v\)'s predecessor in \(\Pi_{G,w}(s,v)\). Then the subpath \(P\) of \(\Pi_{G,w}(s,v)\) from \(s\) to \(u\) is a shortest path from \(s\) to \(u\).

Proof: Since \(P\) is a path from \(s\) to \(u\), we have

\[w(P) \ge \mathrm{dist}_{G,w}(s,u).\]

If \(w(P) > \mathrm{dist}_{G,w}(s,u)\), then the path \(\Pi_{G,w}(s,u) \circ \langle (u,v) \rangle\) (the concatenation of the path \(\Pi_{G,w}(s,u)\) with the single-edge path \(\langle (u,v) \rangle\)) is a path from \(s\) to \(v\) of length

\[\mathrm{dist}_{G,w}(s,u) + w_{u,v} < w(P) + w_{u,v} = \mathrm{dist}_{G,w}(s,v),\]

a contradiction. This is illustrated in Figure 2.4. ▨

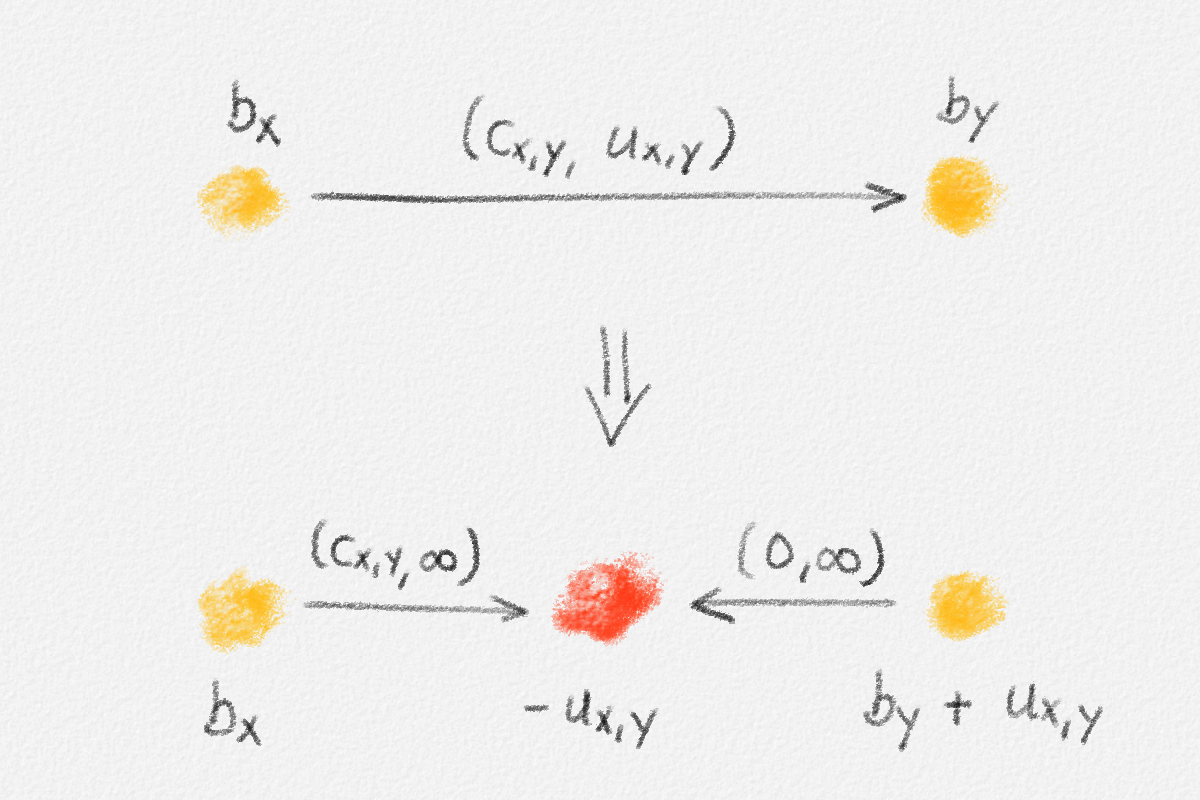

Figure 2.4: If \(\Pi_{G,w}(s,u)\) is shorter than \(P\), then \(\Pi_{G,w}(s,v)\) cannot be a shortest path.

\[\begin{multline} \mathrm{dist}_{G,w}(s,v) =\\ \begin{cases} 0 & \text{if } v = s\\ \min \{ \mathrm{dist}_{G,w}(s,u) + w_{u,v} \mid (u,v) \in E \} & \text{if } v \ne s \end{cases}\phantom{spc} \end{multline}\tag{2.2}\]

Proof: The case when \(v = s\) is obvious since the shortest path from \(s\) to \(s\) contains only \(s\) and thus has length \(0\). (Convince yourself that this follows from the absence of negative cycles.)

For \(v \ne s\), let \(z\) be \(v\)'s predecessor in \(\Pi_{G,w}(s,v)\). By Lemma 2.1,

\[\begin{aligned} \mathrm{dist}_{G,w}(s,v) &= \mathrm{dist}_{G,w}(s,z) + w_{z,v}\\ &\ge \min \{ \mathrm{dist}_{G,w}(s,u) + w_{u,v} \mid (u,v) \in E \}. \end{aligned}\tag{2.3}\]

Conversely, if \(z'\) is \(v\)'s in-neighbour that minimizes \(\mathrm{dist}_{G,w}(s,z') + w_{z',v}\), then \(\Pi_{G,w}(s, z') \circ \langle (z',v) \rangle\) is a path from \(s\) to \(v\) of length

\[\mathrm{dist}_{G,w}(s,z') + w_{z',v} = \min \{ \mathrm{dist}_{G,w}(s,u) + w_{u,v} \mid (u,v) \in E \}.\]

Thus,

\[\mathrm{dist}_{G,w}(s,v) \le \min \{ \mathrm{dist}_{G,w}(s,u) + w_{u,v} \mid (u,v) \in E \}.\tag{2.4}\]

Equation (2.2) follows from inequalities (2.3) and (2.4). ▨

2.2.3. The LP Formulation

Lemma 2.2 proves that

\[\displaystyle\mathrm{dist}_{G,w}(s,v) = \begin{cases} 0 & \text{if } v = s\\ \min \{ \mathrm{dist}_{G,w}(s,u) + w_{u,v} \mid (u,v) \in E \} & \text{if } v \ne s. \end{cases}\]

This is not a linear equation (because of the "min"), but its solution can be expressed as the solution to an LP:

\[\begin{gathered} \text{Maximize } \mathrm{dist}_{G,w}(s,v)\\ \text{s.t. } \mathrm{dist}_{G,w}(s,v) - \mathrm{dist}_{G,w}(s,u) \le w_{u,v} \quad \forall (u,v) \in E. \end{gathered}\tag{2.5}\]

Combining the linear constraints in (2.5) for all vertices \(v \ne s\) and summing the objective functions for all vertices leads to the following LP formulation of the SSSP problem:1

\[\begin{gathered} \text{Maximize } \sum_{v \in V} x_v\\ \begin{aligned} \text{s.t. } \phantom{x_v - x_u} \llap{x_s} &= 0\\ x_v - x_u &\le w_{u,v} && \forall (u,v) \in E \end{aligned} \end{gathered}\tag{2.6}\]

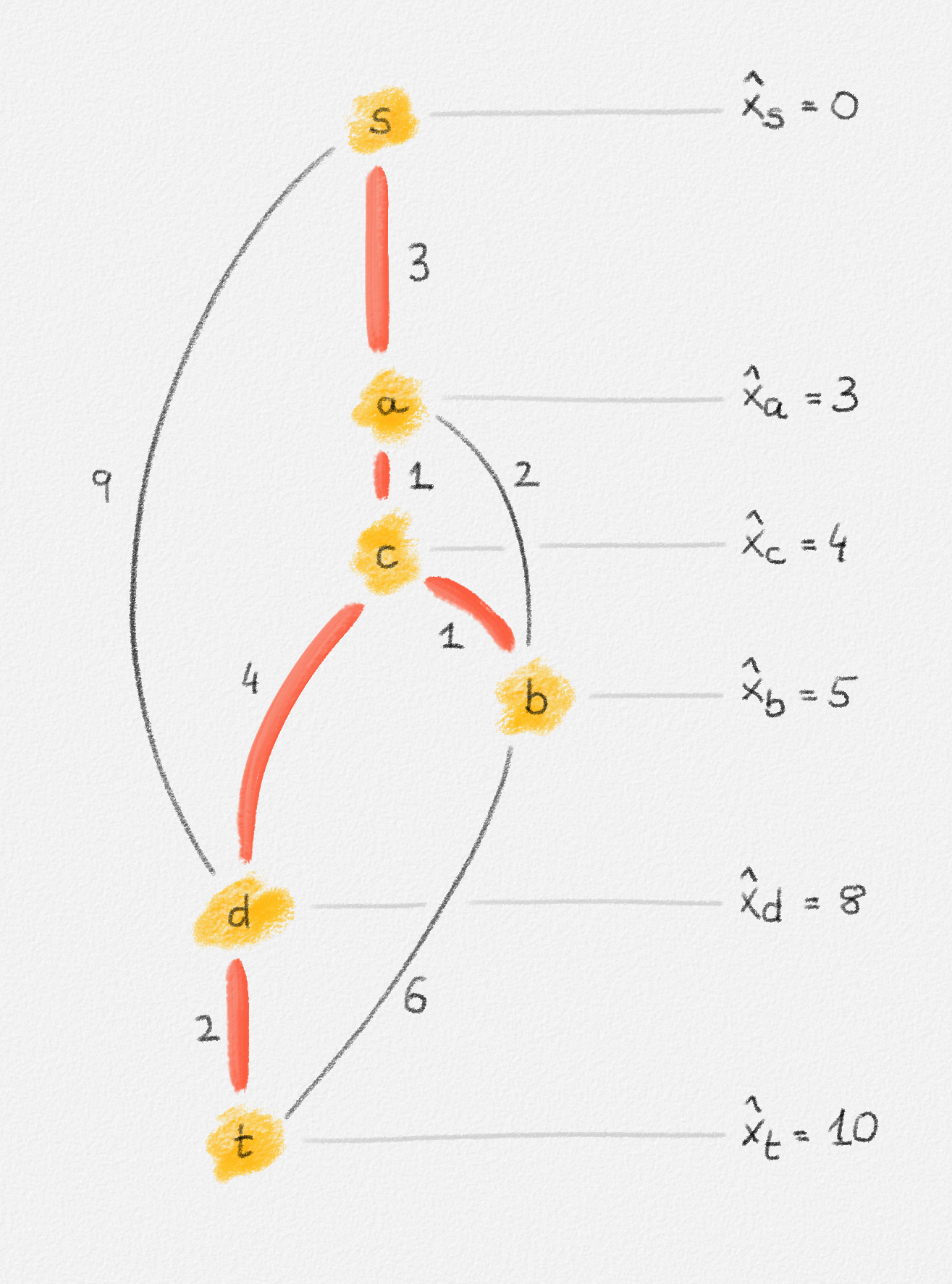

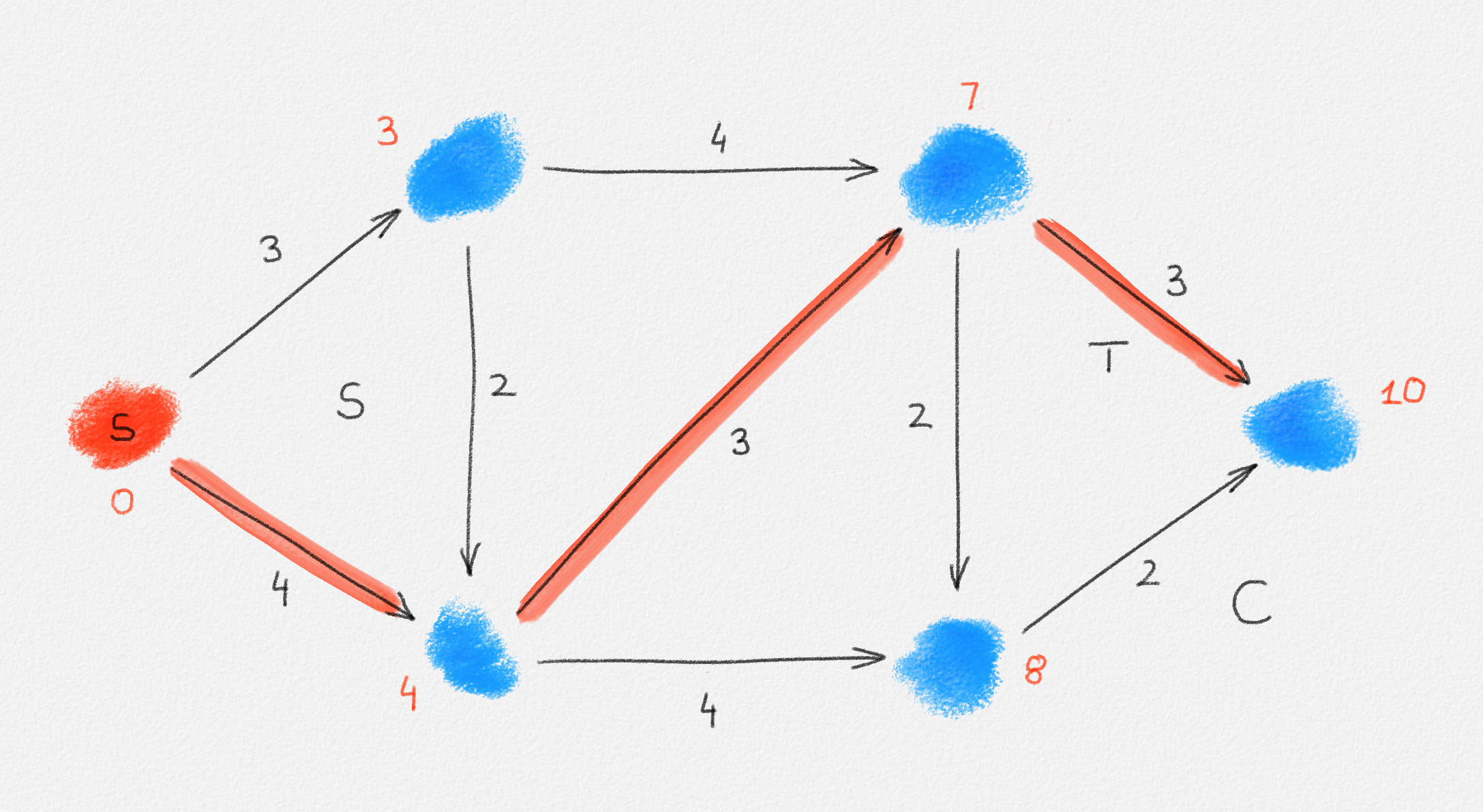

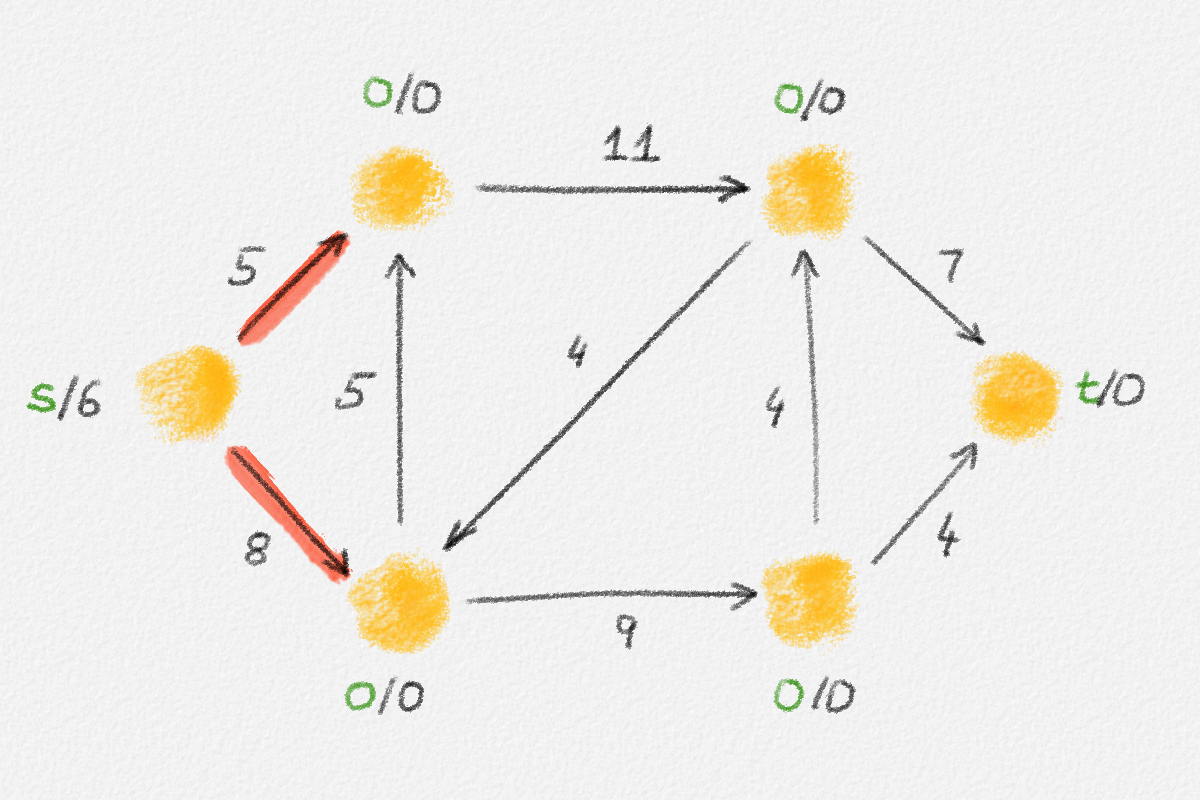

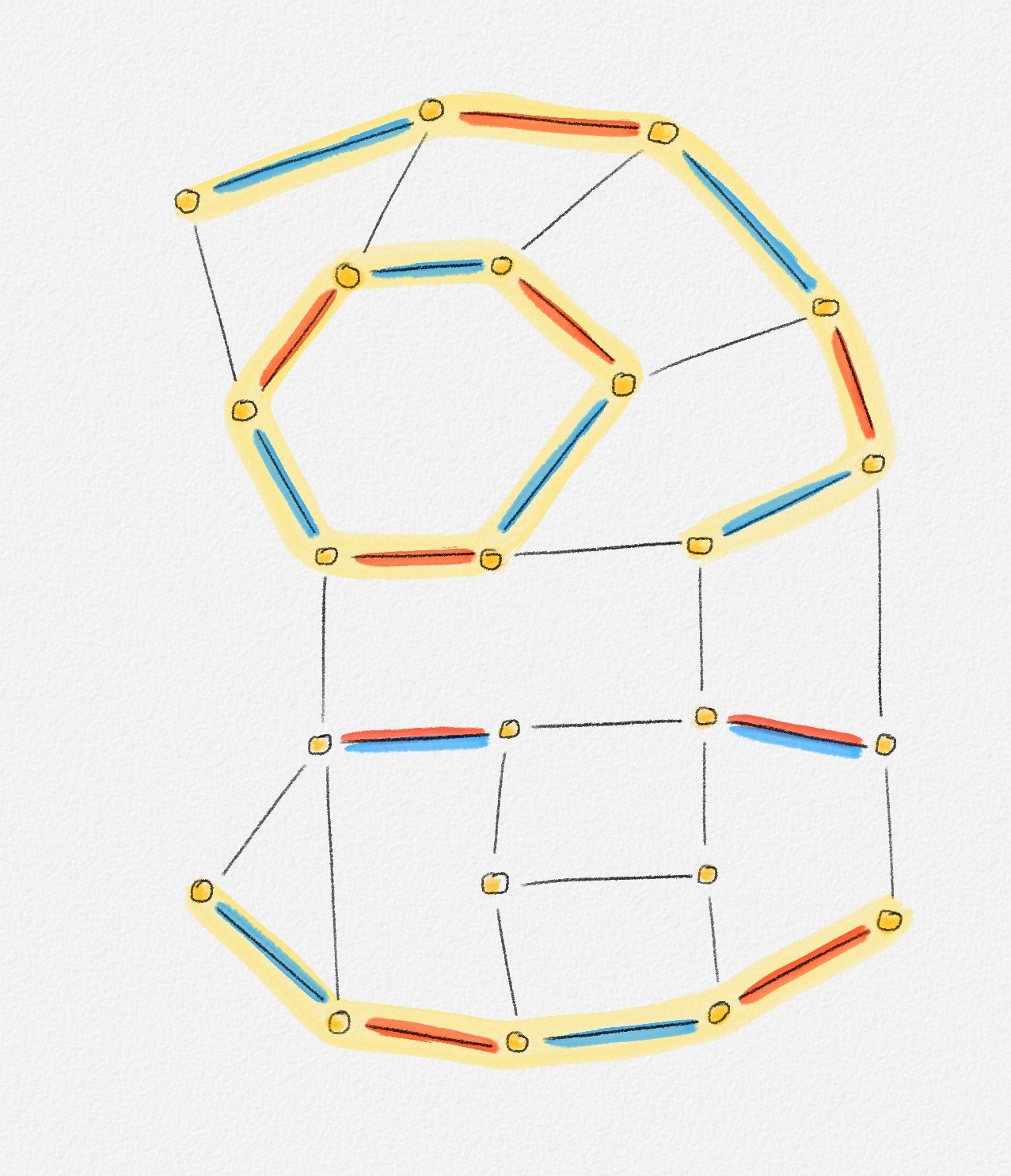

This LP has a rather natural interpretation:2 Think about building a physical model of the graph \(G\). Every vertex is represented by a bead. Every edge \((u,v)\) is a piece of string connecting the beads \(u\) and \(v\). If we now pick up this beads-and-string model of the graph, holding it by the bead \(s\), gravity will pull all beads as far down as possible without tearing any of the strings. This is illustrated in Figure 2.5.

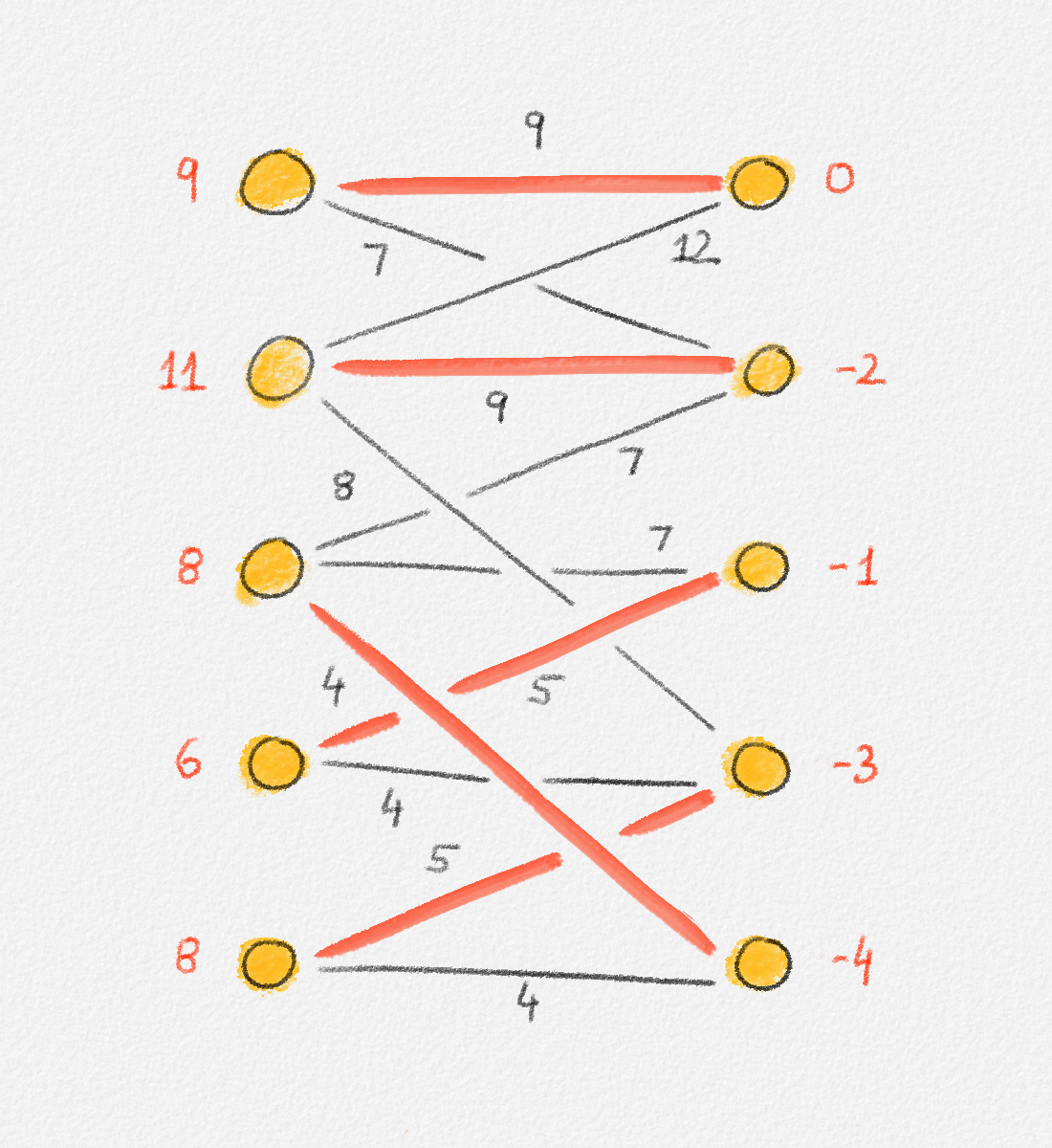

Figure 2.5: Illustration of the interpretation of (2.6) using the beads-and-string model. Holding the model from the vertex \(s\) makes each vertex hang the indicated distance below \(s\).

Thus, if \(\hat x_v\) represents how far below \(s\) we find \(v\), then gravity tries to maximize \(\sum_{v \in v} \hat x_v\). The condition that no string is being torn corresponds to the constraint that \(\hat x_v\) and \(\hat x_u\) can differ by at most \(w_{u,v}\), for every edge \((u,v)\) in the graph. Finally, once \(v\) has been pulled as far below \(s\) as possible by gravity, there must exist a path from \(s\) to \(v\) whose edges have been pulled tight. Otherwise, we would be able to pull \(v\) even farther away from \(s\). This path is a shortest path form \(s\) to \(v\), and its length is \(\hat x_v = \mathrm{dist}_{G,w}(s,v)\). The following lemma proves this claim formally.

Lemma 2.3: The optimal solution \(\hat x\) of the LP (2.6) satisfies \(\hat x_v = \mathrm{dist}_{G,w}(s,v)\) for all \(v \in V\).

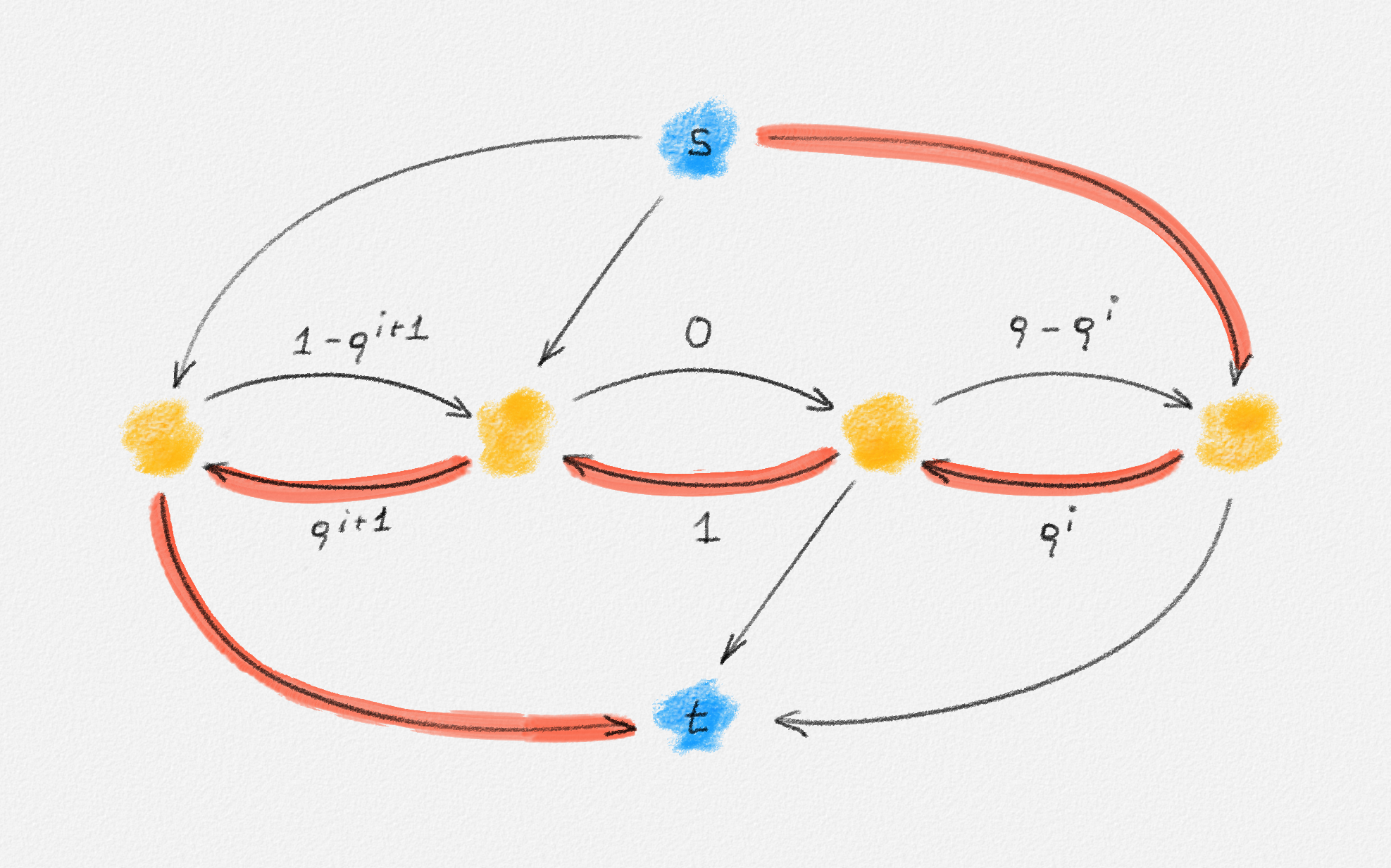

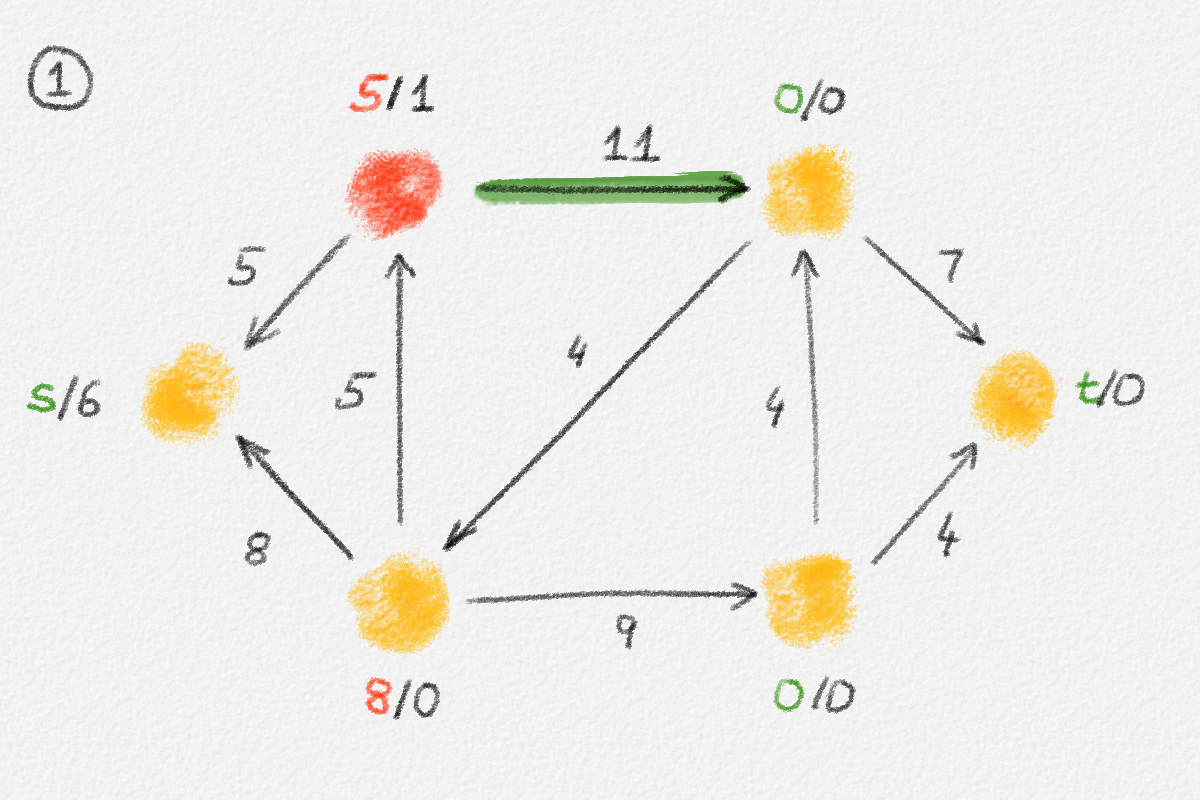

Proof: Consider the subgraph \(H = (V,E') \subseteq G\) such that

\[E' = \bigl\{ (u,v) \in E \mid \hat x_v - \hat x_u = w_{u,v} \bigr\},\]

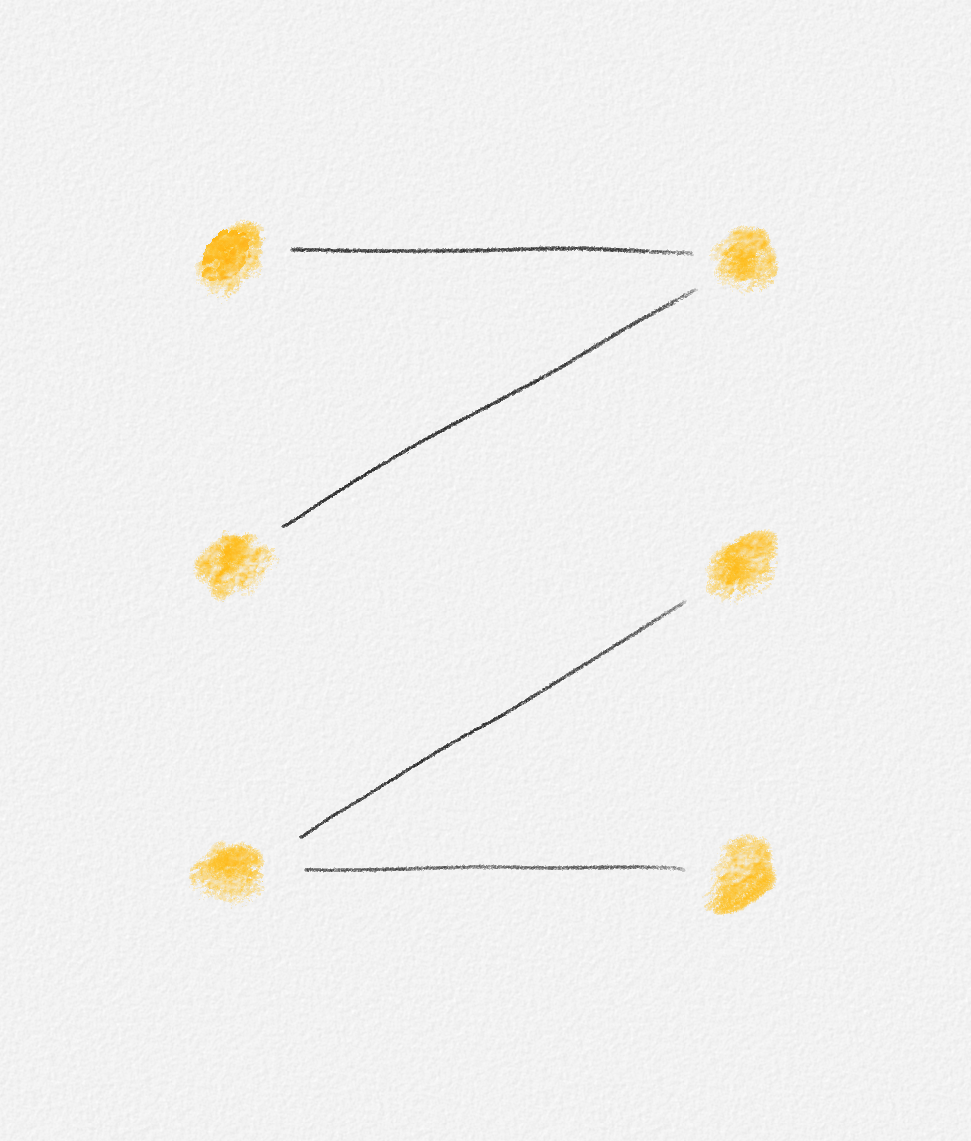

let \(S \subseteq V\) be the set of all vertices reachable from \(s\) in \(H\), and let \(T = V \setminus S\). See Figure 2.6.

Figure 2.6: The sets \(S\) and \(T\) corresponding to the solution \(\hat x\) of (2.6) indicated by the red vertex labels. Black edge labels are the edge lengths. The red edges are tight and form the edge set of \(H\).

First we prove that \(T = \emptyset\), that is, that every vertex is reachable from \(s\) in \(H\).

Let

\[C = \{ (u,v) \in E \mid u \in S, v \in T \}.\]

Since every edge \((u,v) \in C\) has the property that \(u\) is reachable from \(s\) in \(H\) but \(v\) is not, it must satisfy \((u, v) \notin E'\) and, thus, \(\hat x_v - \hat x_u < w_{u,v}\). Therefore,

\[\delta = \min \bigl\{ w_{u,v} - \bigl(\hat x_v - \hat x_u\bigr) \mid (u,v) \in C \bigr\} > 0.\]

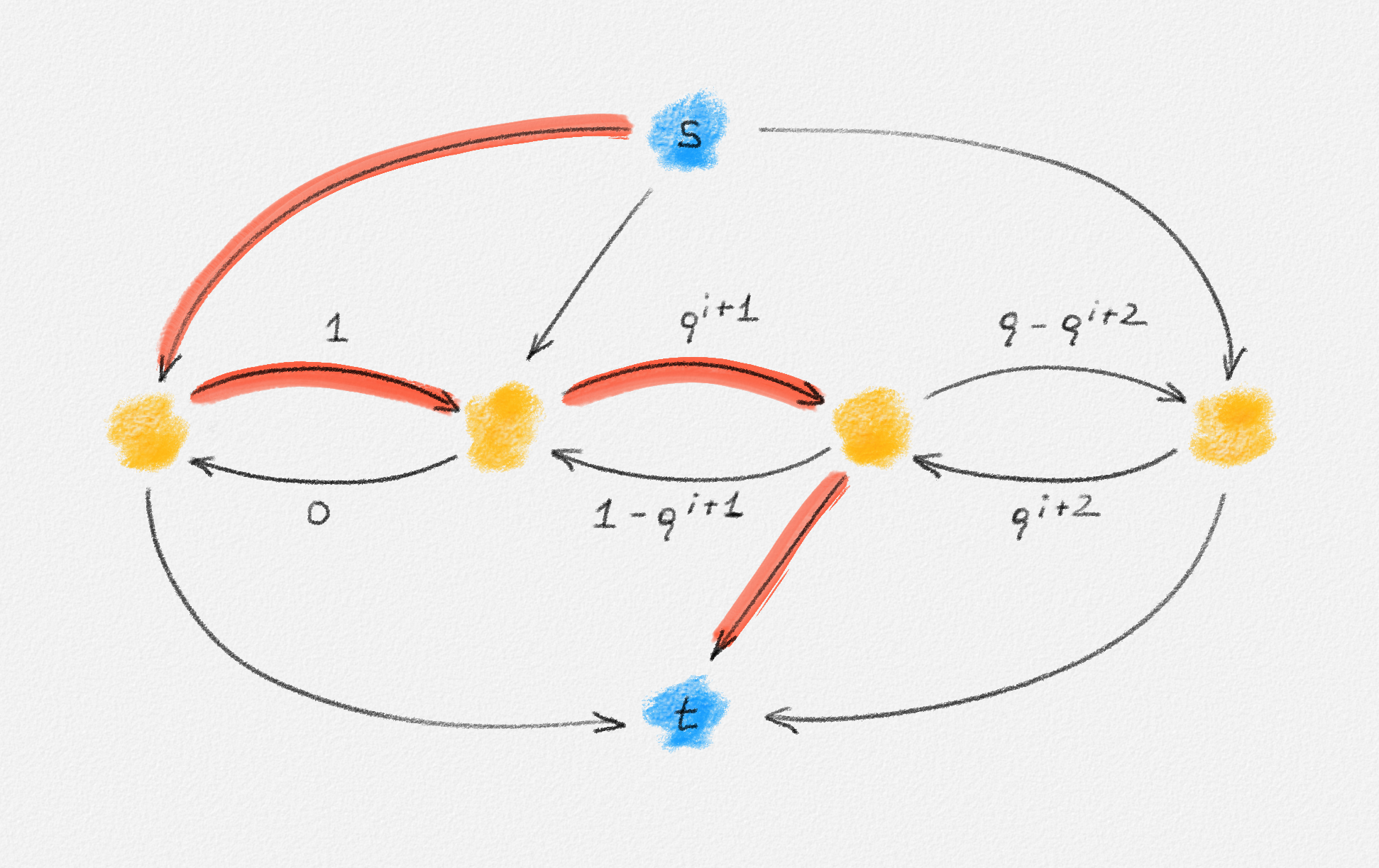

Now define a new solution \(\tilde x\) as

\[\tilde x_v = \begin{cases} \hat x_v & \text{if } v \in S\\ \hat x_v + \delta & \text{if } v \in T \end{cases}.\]

See Figure 2.7.

Figure 2.7: A solution \(\tilde x\) with a higher objective function value than the solution \(\hat x\) above. The new set \(S\) of vertices reachable from \(s\) is bigger.

Observe that \(s \in S\), so \(\tilde x_s = \hat x_s = 0\) because \(\hat x\) is a solution of (2.6).

For every edge \((u,v) \in E\), if \(v \in S\), then \(\tilde x_v = \hat x_v\) and \(\tilde x_u \ge \hat x_u\). Thus, \(\tilde x_v - \tilde x_u \le \hat x_v - \hat x_u \le w_{u,v}\).

If \(u, v \in T\), then \(\tilde x_v = \hat x_v + \delta\) and \(\tilde x_u = \hat x_u + \delta\). Thus, \(\tilde x_v - \tilde x_u = \hat x_v - \hat x_u \le w_{u, v}\).

Finally, if \(u \in S\) and \(v \in T\), then \(\tilde x_v = \hat x_v + \delta\) and \(\tilde x_u = \hat x_u\). Moreover, \((u, v) \in C\), so \(\delta \le w_{u, v} - \bigl(\hat x_v - \hat x_u\bigr)\). Therefore, \(\tilde x_v - \tilde x_u \le \hat x_v - \hat x_u + \delta \le w_{u,v}\).

This shows that \(\tilde x\) is a feasible solution of (2.6).

Next observe that \(\sum_{v \in V} \tilde x_v - \sum_{v \in V} \hat x_v = \delta|T|\). Since \(\tilde x\) is a feasible solution and \(\hat x\) is an optimal solution of (2.6), we have \(\sum_{v \in V} \tilde x_v - \sum_{v \in V} \hat x_v \le 0\), that is, \(T = \emptyset\) and \(S = V\).

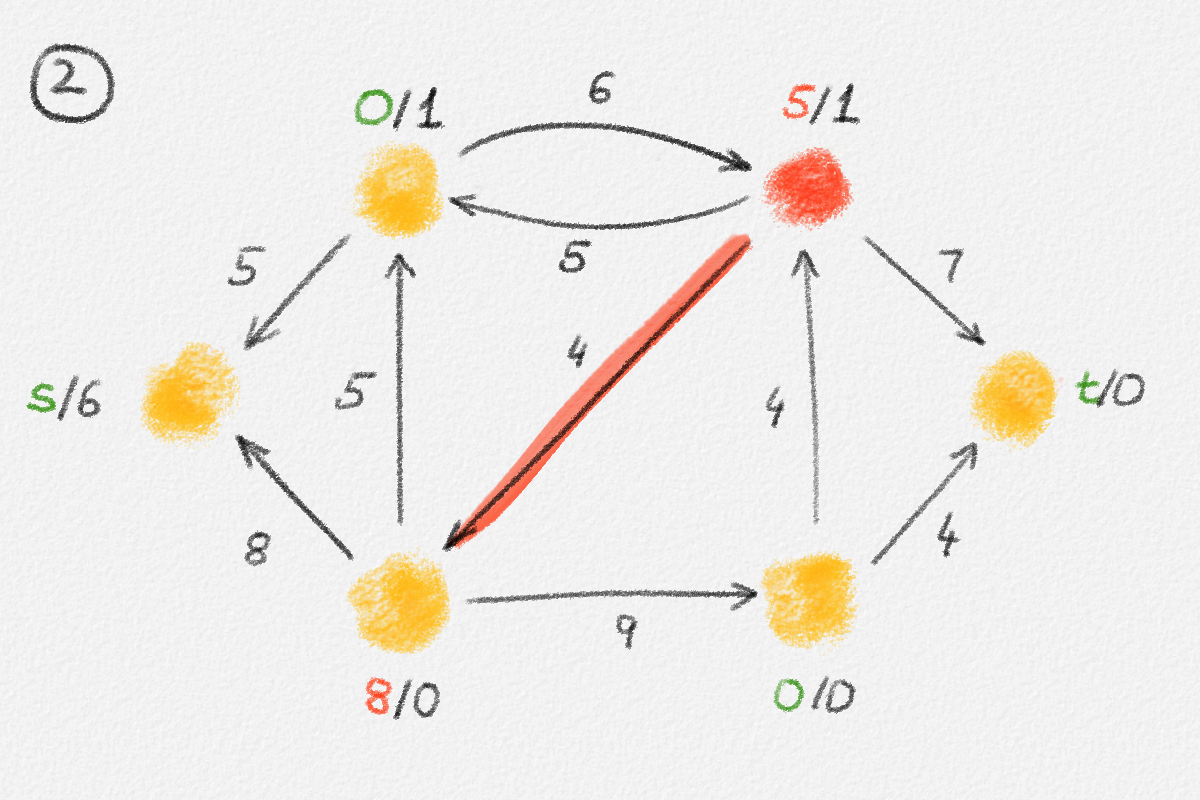

The lemma now follows if every vertex \(v \in S\) satisfies \(\hat x_v = \mathrm{dist}_{G,w}(s,v)\). Let \(P = \langle v_0, \ldots, v_k \rangle\) be a path from \(s\) to \(v\) in \(H\). See Figure 2.8.

Figure 2.8: An optimal solution to (2.6) for the graph in Figure 2.6. The red path is a shortest path from \(s\) to the rightmost vertex.

Then \(\hat x_{v_0} = \hat x_s = 0\) and for \(1 \le i \le k\), \(\hat x_{v_i} = \hat x_{v_{i-1}} + w_{v_{i-1},v_i}\) because \(P\) is a path in \(H\). Thus,

\[\hat x_v = \hat x_{v_k} = \sum_{i=1}^k w_{v_{i-1},v_i} = w(P).\]

Since \(P\) is also a path from \(s\) to \(v\) in \(G \supseteq H\), this implies that

\[\hat x_v \ge \mathrm{dist}_{G,w}(s,v).\tag{2.7}\]

Conversely, for the shortest path \(\Pi_{G,w}(s,v) = \langle u_0, \ldots, u_\ell \rangle\) from \(s\) to \(v\) in \(G\), \(\hat x_{u_0} = \hat x_s = 0\) and, since \(\hat x\) is a solution of (2.6), \(\hat x_{u_i} \le \hat x_{u_{i-1}} + w_{u_{i-1},u_i}\) for all \(1 \le i \le \ell\). Thus,

\[\hat x_v = \hat x_{u_\ell} \le \sum_{i=1}^\ell w_{u_{i-1},u_i} = \mathrm{dist}_{G,w}(s,v).\tag{2.8}\]

Together, equations (2.7) and (2.8) show that \(\hat x_v = \mathrm{dist}_{G,w}(s,v)\). This finishes the proof. ▨

It may be useful to remind you at this point that our goal was to derive an LP formulation of the SSSP problem, that is, a linear program whose optimal solution consists of the distances from \(s\) to all vertices in \(G\). We proved that (2.6) is such an LP formulation. At this point, you may wonder how we go about finding such an optimal solution. We defer this discussion until Chapter 3, when we discuss the Simplex Algorithm. Our goal at this point is not to find optimal solutions to various optimization problems but to study how we can use linear programs as a mechanism to state these problems. We will later see that these formulations are useful because we can either solve the LPs using the Simplex Algorithm or the LP formulation sheds some light on the structure of the problem, which we can exploit in some algorithm we design to solve the problem.

This LP assigns a variable \(x_v = \mathrm{dist}_{G,w}(s,v)\) to each vertex \(v \in V\) to adhere to the common notation used in LPs.

At least, this is true for undirected graphs. Given that the edges \((u, v)\) and \((v, u)\) can have different lengths in a directed graph, this interpretation as a beads-and-string model falls apart for directed graphs. As Lemma 2.3 shows, the LP formulation is correct for both undirected and directed graphs.

2.3. Minimum Spanning Tree as an ILP

2.3.1. The Minimum Spanning Tree Problem

We have shown that the single-source shortest paths problem can be formulated as a linear program. Next let us look at a problem for which this is harder to do:

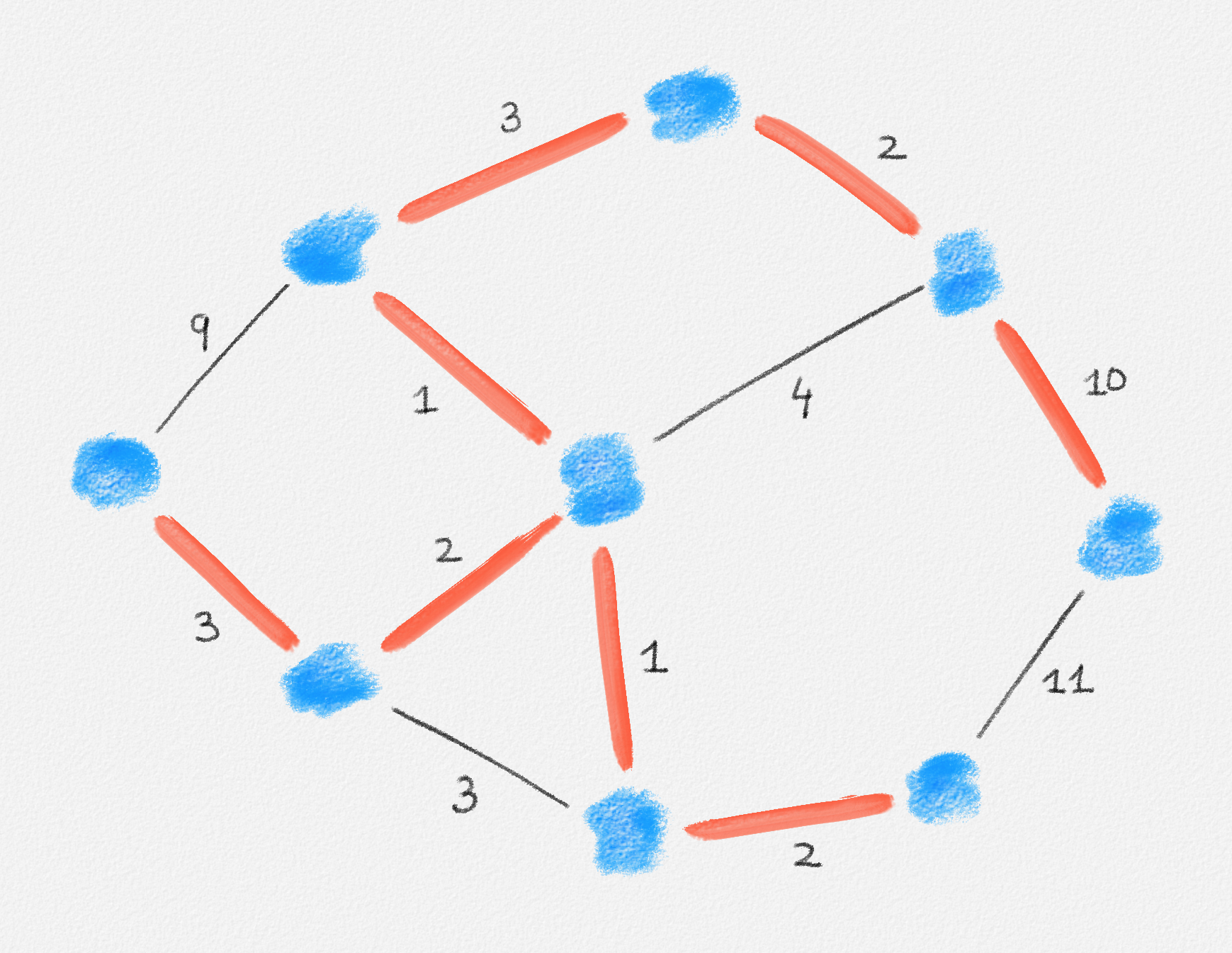

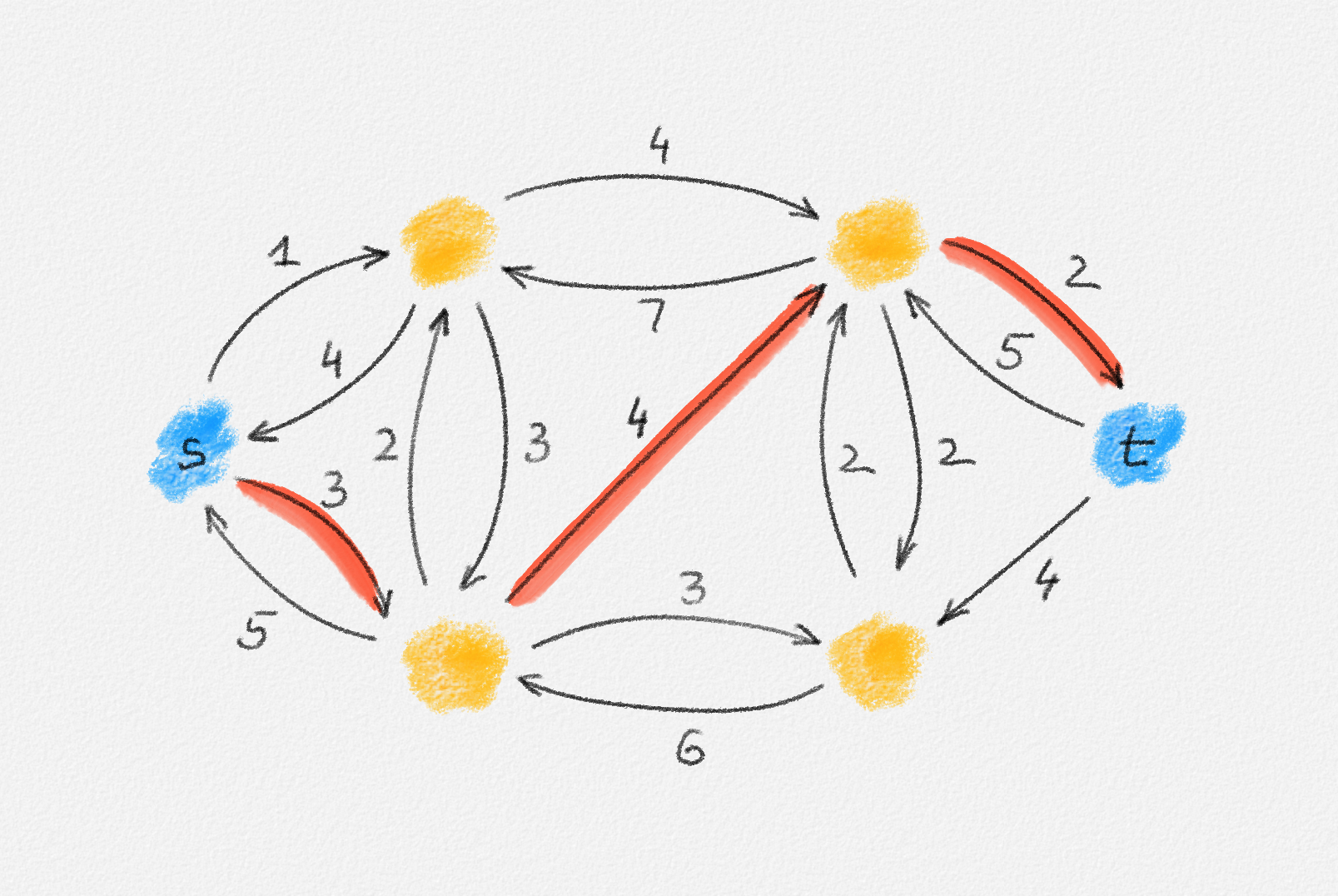

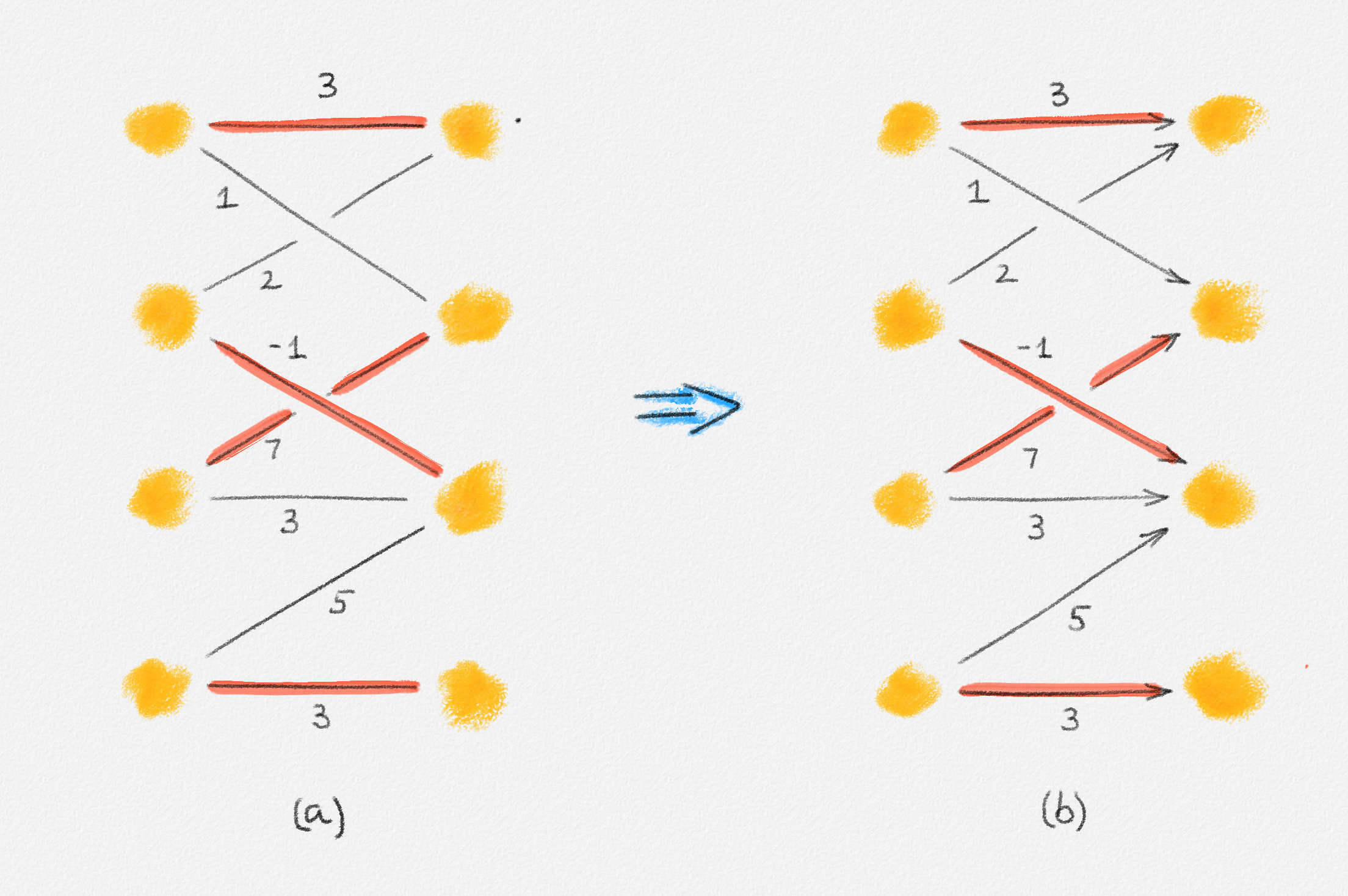

Given an edge-weighted connected undirected graph \((G,w)\), a minimum spanning tree (MST) of \((G,w)\) is a tree \(T = (V,E')\) with \(E' \subseteq E\) and such that every tree \(T' = (V,E'')\) with \(E'' \subseteq E\) satisfies \(w(E'') \ge w(E')\). See Figure 2.9.

Figure 2.9: A minimum spanning tree shown in red. The edge labels are the edge weights.

Minimum spanning tree problem: For an edge-weighted graph \((G,w)\), compute a spanning tree \(T\) of \(G\) of minimum weight.

2.3.2. Two Natural ILP Formulations of the MST Problem

The LP formulation of the SSSP problem is a fairly direct translation of the combinatorial formulation of the problem. When it comes to comparing greedy algorithms for the SSSP and MST problems, Kruskal's or Prim's algorithm seems to be more straightforward than Dijkstra's algorithm, at least in terms of the correctness proof. It is thus somewhat surprising that obtaining an efficient solution for the MST problem based on linear programming is significantly harder than for the SSSP problem.

Let us make a seemingly innocent change: We restrict the variables in the LP to being integers. An LP with this restriction is called, quite naturally, an integer linear program.

An integer linear program (ILP) is a linear program with the added constraint that all variable values in a feasible solution must be integers.

Since the MST problem is to choose the right subset of edges in \(E\) to be included in the edge set \(E'\) of the MST, it is natural to represent the set \(E'\) as a set of variables \(\{x_e \mid e \in E\}\) such that \(x_e = 1\) if \(e \in E'\) and \(x_e = 0\) if \(e \notin E'\). The weight of the MST \(T = (V, E')\) is then

\[w(T) = \sum_{e \in E} w_e x_e.\]

This is the objective function to be minimized. The constraints of the ILP need to enforce that \(T\) is a tree, that is, that \(T\) is connected and acyclic. The following exercise will be helpful in constructing the right set of constraints:

Exercise 2.3: Prove that a graph \(G\) on \(n\) vertices is a tree if and only if it has \(n - 1\) edges and

- Has no cycle or

- Is connected.

Our first two ILP formulations of the MST problem both enforce the constraint that \(T\) has \(n - 1\) edges:

\[\sum_{e \in E} x_e = n - 1.\]

To enforce that \(T\) is a tree, we need to add constraints that ensure that \(T\) is acyclic or that \(T\) is connected.

Enforcing That \(\boldsymbol{T}\) is Acyclic

Since \(T\) is a subgraph of \(G\), the only cycles that \(T\) can contain are cycles in \(G\). Thus, to ensure that \(T\) is acyclic, we need to ensure that there is no cycle \(C\) in \(G\) such that \(T\) contains all edges in \(C\); at least one edge from each cycle must be excluded from \(T\). This is easy to express as a set of linear constraints:

\[\sum_{e \in C} x_e \le |C| - 1 \quad \forall \text{cycle $C$ in $G$}.\]

This gives our first ILP formulation of the MST problem:

\[\begin{gathered} \text{Minimize } \sum_{e \in E} w_e x_e\\ \begin{aligned} \text{s.t. } \sum_{e \in E} x_e &= n - 1\\ \sum_{e \in C} x_e &\le |C| - 1 && \forall \text{cycle $C$ in $G$}\\ x_e &\in \{0, 1\} && \forall e \in E. \end{aligned} \end{gathered}\tag{2.9}\]

Note that this is an integer linear program: each variable \(x_e\) is not only constrained to be between \(0\) and \(1\)—this would make the problem a linear program—but is additionally required to be an integer. Since \(0\) and \(1\) are the only integers between \(0\) and \(1\), we thus require that \(x_e \in \{0, 1\}\) for all \(e \in E\).

Enforcing That \(\boldsymbol{T}\) is Connected

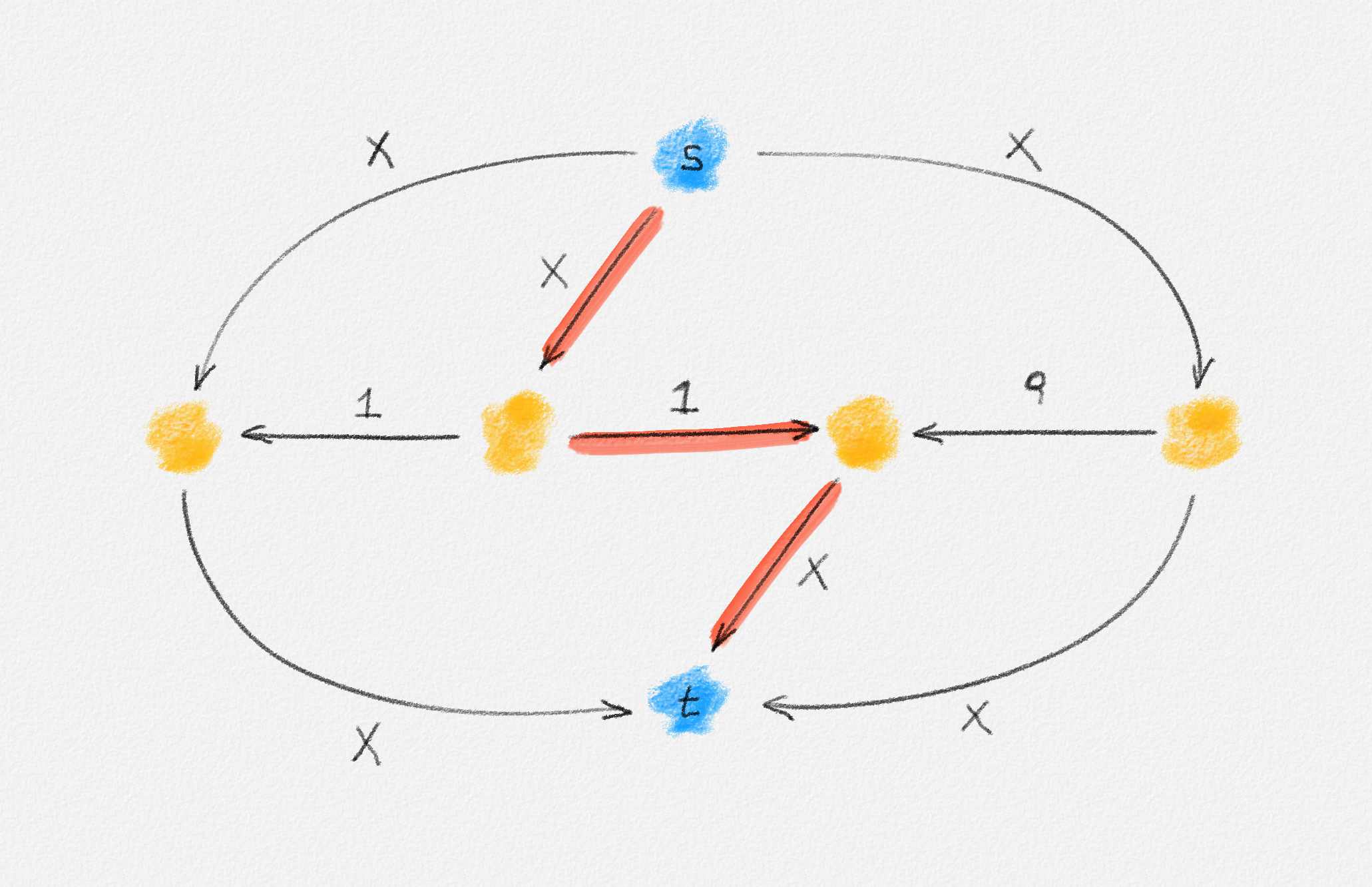

Our second ILP formulation of the MST problem ensures that \(T\) is a tree by requiring that it has \(n - 1\) edges and is connected. How can we express that a graph is connected? To do so, we need the notion of a cut:

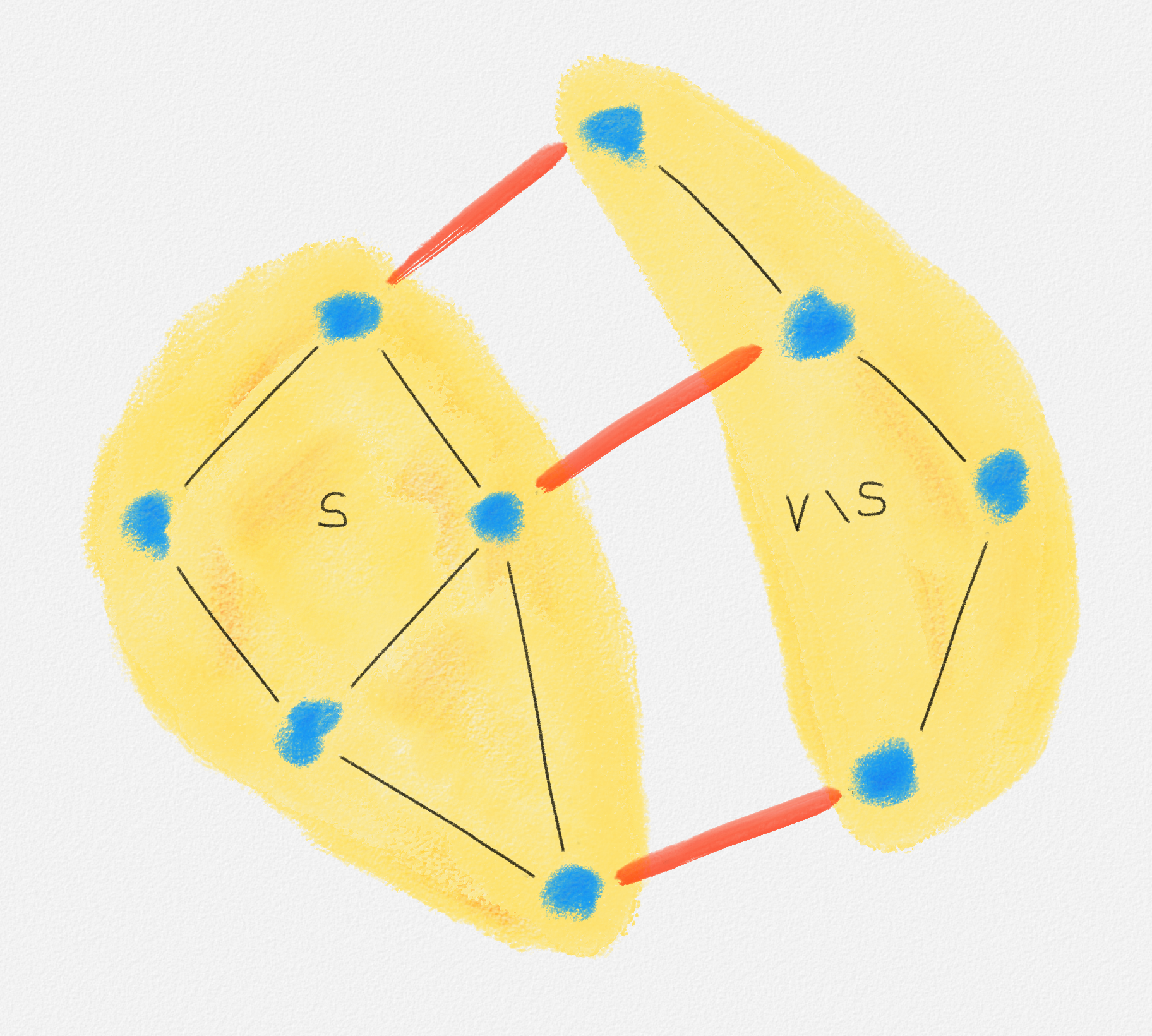

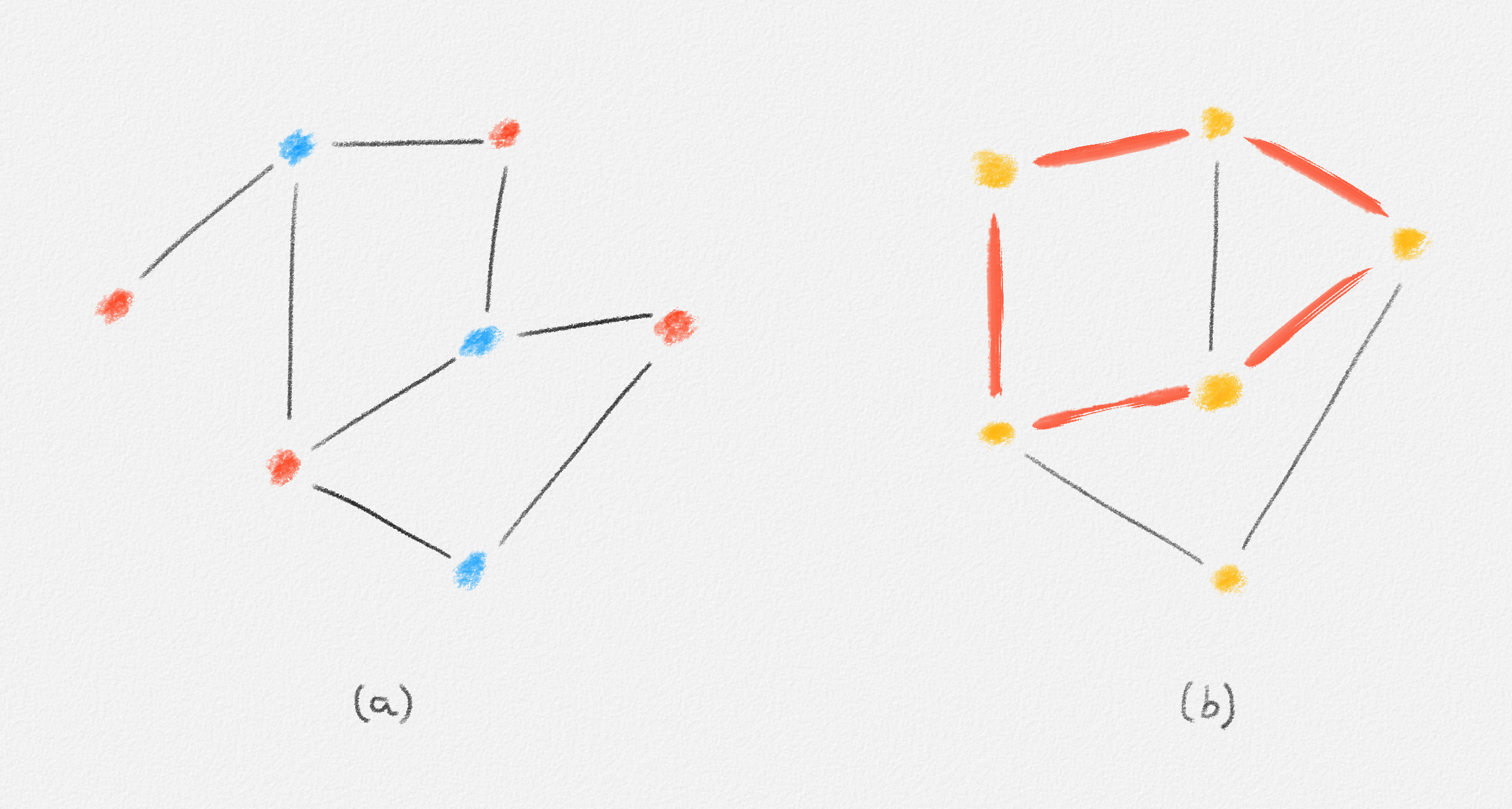

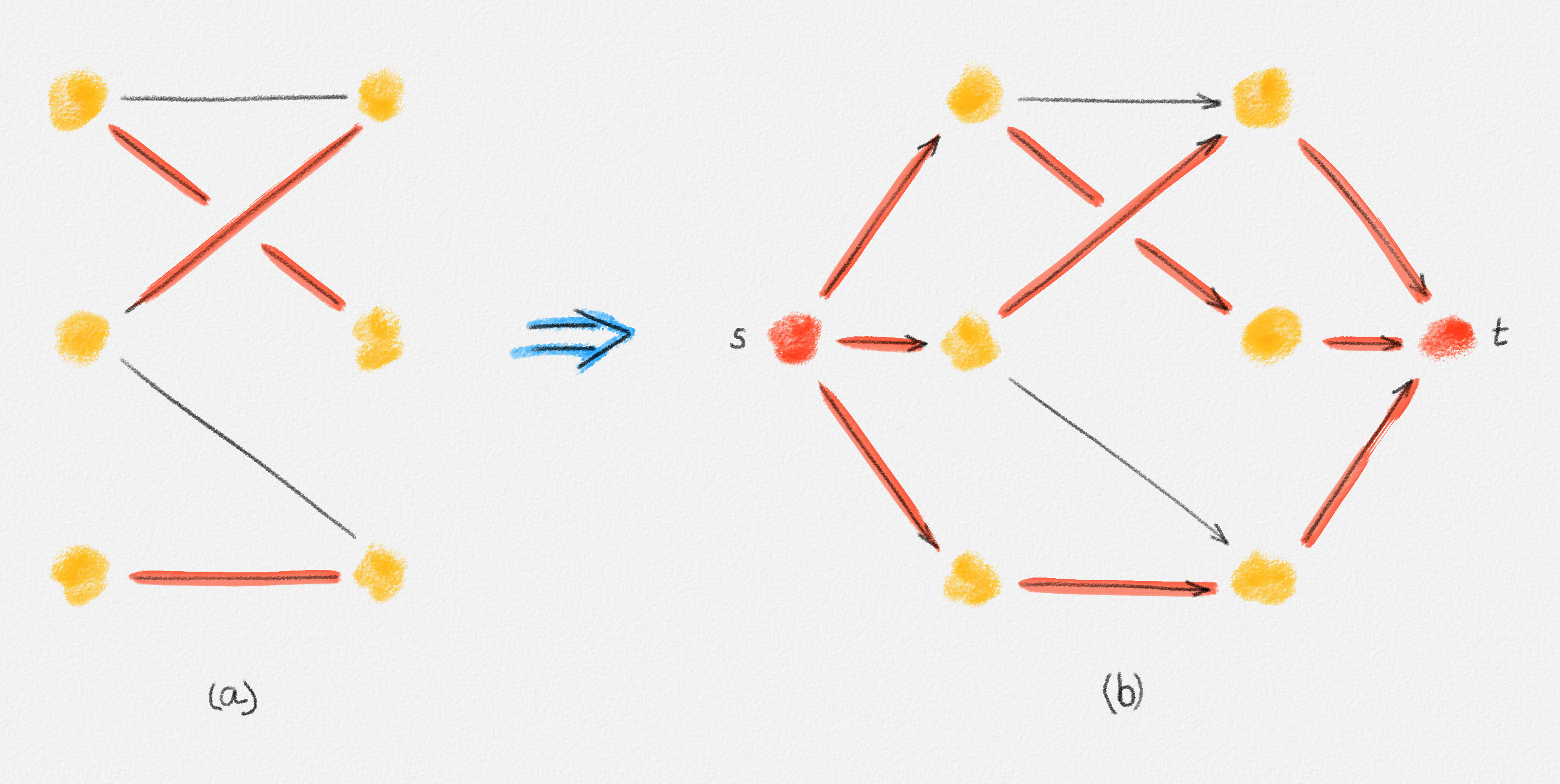

A cut in a graph \(G = (V, E)\) is a partition of \(V\) into two non-empty subsets \(S\) and \(V \setminus S\). See Figure 2.10.

Figure 2.10: A cut \((S, V \setminus S)\). The red edges cross the cut.

Since the set \(V \setminus S\) is fixed once we specify \(S\), we will usually refer to any non-empty proper subset \(\emptyset \subset S \subset V\) as a cut. This ensures explicitly that \(S \ne \emptyset\), and the condition \(S \subset V\) ensures that \(V \setminus S \ne \emptyset\), so \((S, V \setminus S)\) is indeed the unique cut that has \(S\) as one of its halves.

An edge crosses the cut \(S\) if it has exactly one endpoint in \(S\). See Figure 2.10.

The following exercise shows how we can use cuts to express that a graph is connected.

Exercise 2.4: Prove that a graph \(G = (V,E)\) is connected if and only if every cut in \(G\) is crossed by at least one edge in \(E\).

Thus, our ILP formulation needs to ensure that the edge set \(E'\) of the tree \(T\) includes at least one edge that crosses each cut of \(G\):

\[\begin{gathered} \text{Minimize } \sum_{e \in E} w_e x_e\\ \begin{aligned} \text{s.t. } \sum_{e \in E} x_e &= n - 1\\ \sum_{e \text{ crosses } S} x_e &\ge 1 && \forall \text{cut $S$ in $G$}\\ x_e &\in \{0, 1\} && \forall e \in E. \end{aligned} \end{gathered}\tag{2.10}\]

From an algorithmic point of view, neither (2.9) nor (2.10) seems to be a very appealing formulation of the MST problem because they both involve a potentially exponential number of constraints: There can be an exponential number of cycles in \(G\) and there are \(2^{n-1} - 1\) cuts in an \(n\)-vertex graph. This suggests that solving either ILP should take exponential time. As we will see in Section 2.4, this is basically correct but not because the number of constraints is exponential but because integer linear programming is much harder than linear programming. So the "innocent change" to restrict variables to having integral values is far from innocent. In contrast, Prim's or Kruskal's algorithm can elegantly compute an MST in polynomial time.

2.3.3. An ILP Formulation of Polynomial Size*

In an attempt to reduce the difficulty of solving the MST problem formulated as an ILP, let us aim to develop an ILP formulation of the MST problem with only a polynomial number of constraints. The following, substantially less intuitive ILP formulation has only \(O\bigl(nm^2\bigr)\) constraints.

The tree structure of \(T\) is enforced using additional variables \(y_e^v \in \{0, 1\}\) and constraints on these variables. Fix an arbitrary vertex \(s \in V\). Then \(y_e^v = 1\) if and only if \(e\) belongs to the path from \(s\) to \(v\) in \(T\). Now the following observations capture that \(T\) is a tree:

-

If the edge \((u,v)\) is in \(T\), then it belongs to the path from \(s\) to \(v\) or to the path from \(s\) to \(u\) but not both. If \((u,v) \notin T\), then it is not on any path. This can be expressed using the following constraint:

\[y_{u,v}^u + y_{u,v}^v = x_{u,v} \quad \forall (u,v) \in E.\]

-

Every vertex \(v \ne s\) has exactly one incident edge \((u,v)\) that belongs to the path from \(s\) to \(v\):

\[\sum_{(u,v) \in E} y_{u,v}^v = 1 \quad \forall v \ne s.\]

For \(v = s\), none of the edges incident to \(s\) belongs to the path from \(s\) to \(s\):

\[\sum_{(s,v) \in E} y_{s,v}^s = 0.\]

-

If the edge \((u,v)\) belongs to the path from \(s\) to \(v\) and the edge \((v,w)\) belongs to the path from \(s\) to some vertex \(z\), then the edge \((u,v)\) also belongs to the path from \(s\) to \(z\):

\[y_{u,v}^z \ge y_{u,v}^v + y_{v,w}^z - 1 \quad \forall z \in V, (u,v) \in E, (v,w) \in E.\]

This gives the following ILP formulation of the MST problem:

\[\begin{gathered} \text{Minimize } \sum_{e \in E} w_e x_e\\ \begin{aligned} \text{s.t. } \phantom{y_{u,v}^u + y_{u,v}^v} \llap{\displaystyle\sum_{e \in E} x_e} &= n - 1\\ y_{u,v}^u + y_{u,v}^v &= x_{u,v} && \forall (u,v) \in E\\ \sum_{(s,v) \in E} y_{s,v}^s &= 0\\ \sum_{(u,v) \in E} y_{u,v}^v &= 1 && \forall v \ne s\\ y_{u,v}^z &\ge y_{u,v}^v + y_{v,w}^z - 1 && \forall z \in V, (u,v) \in E, (v,w) \in E\\ x_e &\in \{0, 1\} && \forall e \in E\\ y_e^v &\in \{0, 1\} && \forall v \in V, e \in E. \end{aligned} \end{gathered}\tag{2.11}\]

This ILP does indeed have polynomial size because, apart form the first three constraints, there are \(n - 1\) copies of the fourth constraint, one per vertex \(v \ne s\); no more than \(nm^2\) copies of the fifth constraint, at most one per vertex \(z \in V\) and per pair of edges \((u,v)\) and \((v, w)\) in \(E\); \(m\) copies of the sixth constraint, one per edge; and \(nm\) copies of the last constraint, one per pair \((v, e)\), where \(v\) is a vertex and \(e\) is an edge.

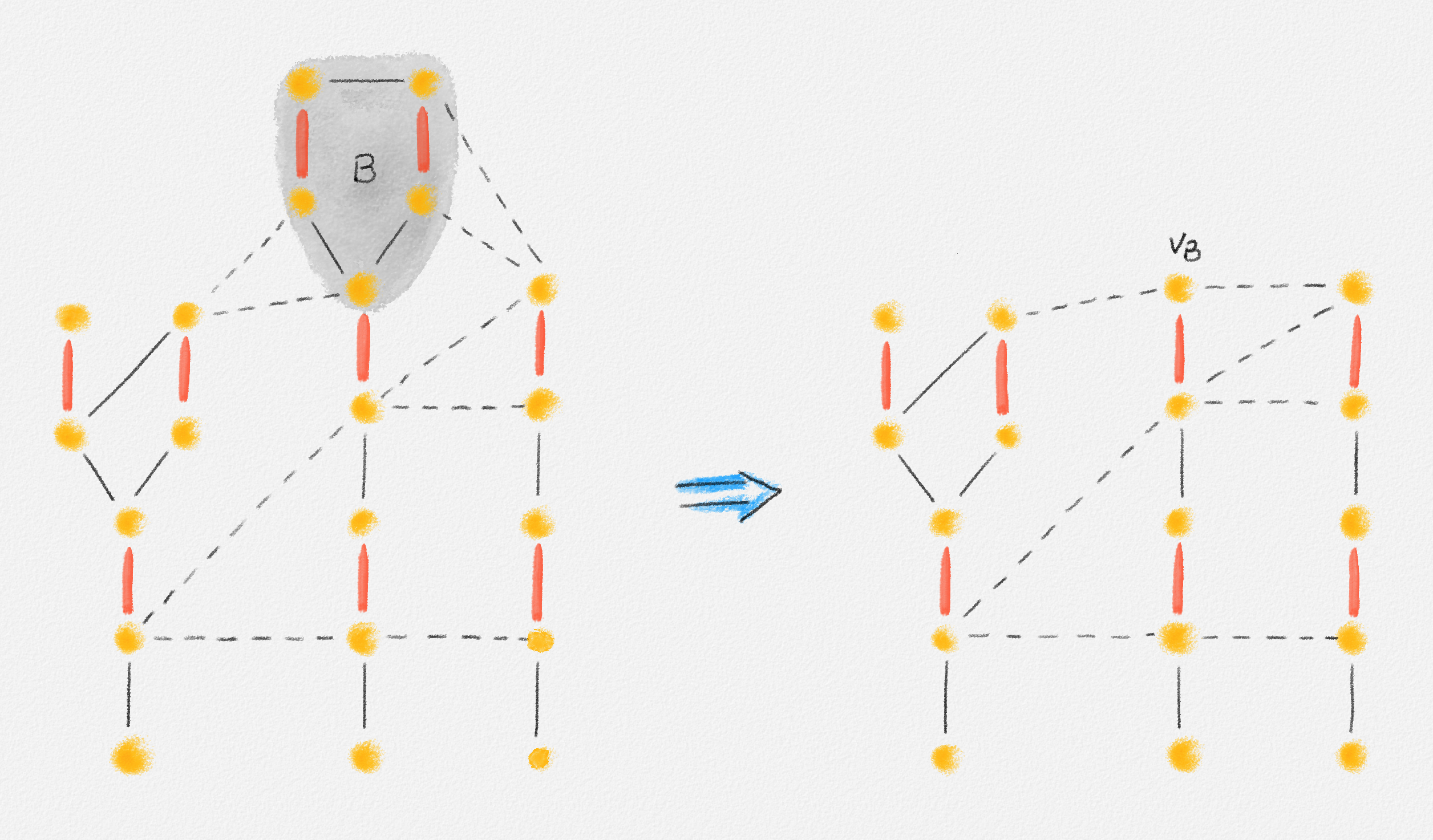

While the correctness of (2.9) and (2.10) should be obvious, the correctness of (2.11) requires some effort to prove. The next lemma and corollary show that it is indeed a correct formulation of the MST problem.

Lemma 2.4: For any undirected edge-weighted graph \((G,w)\), \(\bigl(\hat x, \hat y\bigr)\) is a feasible solution of (2.11) if and only if the subgraph \(T = (V, E')\) of \(G\) with \(E' = \bigl\{ e \in E \mid \hat x_e = 1 \bigr\}\) is a spanning tree of \(G\).

Proof: The first constraint of (2.11) ensures that every feasible solution \(\bigl(\hat x, \hat y\bigr)\) satisfies \(\sum_{e \in E} \hat x_e = n - 1\). Since an \(n\)-vertex graph is a tree if and only if it has \(n - 1\) edges and is acyclic, it therefore suffices to prove that \(\bigl(\hat x, \hat y\bigr)\) is a feasible solution of (2.11) if and only if \(T\) contains no cycle.

So assume first, for the sake of contradiction, that \(\bigl(\hat x, \hat y\bigr)\) is a feasible solution and that \(T\) contains a cycle \(C = \langle v_0, v_1, \ldots, v_k \rangle\). Then \(\hat x_{v_{i-1},v_i} = 1\) for all \(1 \le i \le k\). Since \(\hat y_{v_{i-1},v_i}^{v_{i-1}} + \hat y_{v_{i-1},v_i}^{v_i} = \hat x_{v_{i-1},v_i}\), this implies that either \(\hat y_{v_{i-1},v_i}^{v_{i-1}} = 1\) or \(\hat y_{v_{i-1},v_i}^{v_i} = 1\) for all \(1 \le i \le k\). If there exists a vertex \(v_i\) such that \(\hat y_{v_{i-1},v_i}^{v_i} = 1\) and \(\hat y_{v_i,v_{i+1}}^{v_i} = 1\), then \(\sum_{(u,v_i) \in E} \hat y_{u,v_i}^{v_i} > 1\), a contradiction. Thus, we can assume w.l.o.g. that \(\hat y_{v_{i-1},v_i}^{v_i} = 1\) and \(\hat y_{v_{i-1},v_i}^{v_{i-1}} = 0\) for all \(1 \le i \le k\). In particular, \(y_{v_0,v_1}^{v_0} = 0\).

Now observe that \(\hat y_{v_{i-1},v_i}^{v_k} = 1\) for all \(1 \le i \le k\). The proof is by induction on \(j = k - i\). For the base case, \(i = k\), we just observed that \(\hat y_{v_{k-1},v_k}^{v_k} = 1\). For the inductive step, \(i < k\), observe that, by the inductive hypothesis, \(\hat y_{v_i,v_{i+1}}^{v_k} = 1\). Since \(\hat y_{v_{i-1},v_i}^{v_i} = 1\), \(\hat y_{v_{i-1},v_i}^{v_k} \ge \hat y_{v_{i-1},v_i}^{v_i} + y_{v_i,v_{i+1}}^{v_k} - 1\), and \(\hat y_{v_{i-1},v_i}^{v_k} \in \{0,1\}\), we have \(\hat y_{v_{i-1},v_i}^{v_k} = 1\).

Since \(\hat y_{v_{i-1},v_i}^{v_k} = 1\) for all \(1 \le i \le k\), we have in particular that \(\hat y_{v_0,v_1}^{v_k} = 1\). Since \(C\) is a cycle, we have \(v_k = v_0\), that is, \(\hat y_{v_0,v_1}^{v_0} = 1\), a contradiction because we observed above that \(\hat y_{v_0, v_1}^{v_0} = 0\). Thus, \(T\) cannot contain any cycles if \(\bigl(\hat x, \hat y\bigr)\) is a feasible solution of (2.11).

Now assume that \(T\) is a tree and define \(\hat x_e = 1\) if and only if \(e \in T\) and \(\hat y_e^v = 1\) if and only if the edge \(e\) is on the path from \(s\) to \(v\) in \(T\). Since \(T\) has \(n - 1\) edges, we have \(\sum_{e \in E} \hat x_e = n - 1\).

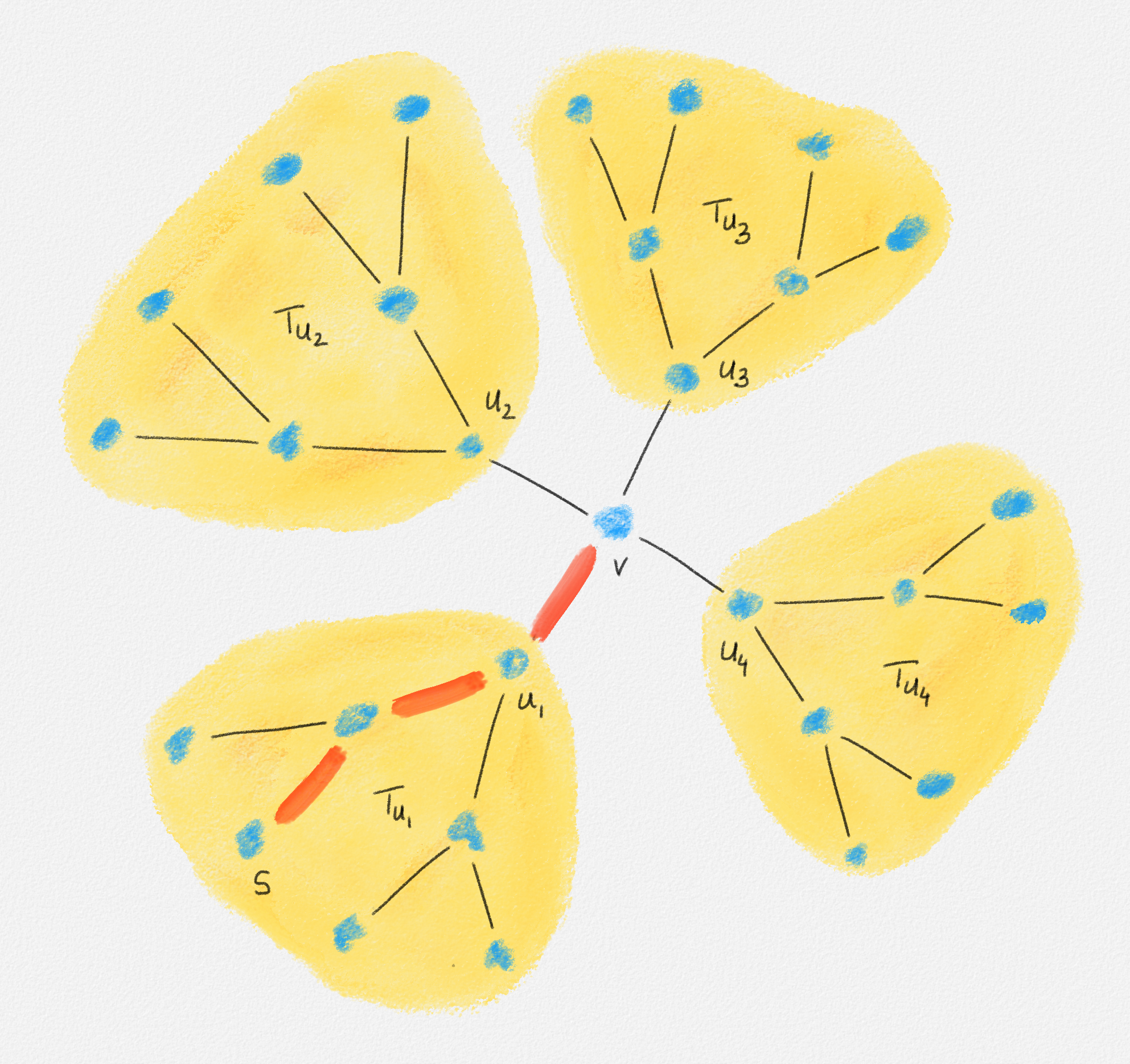

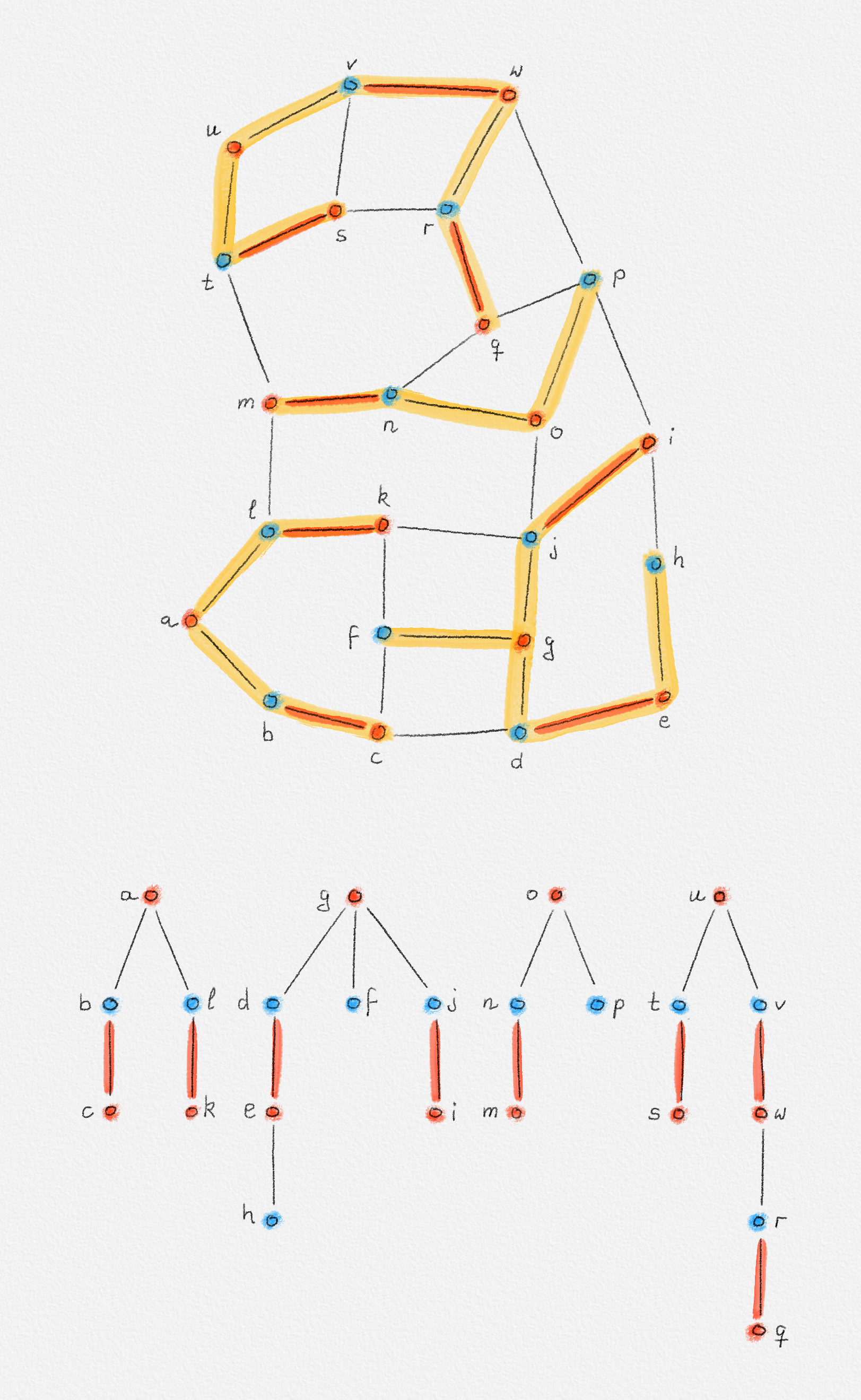

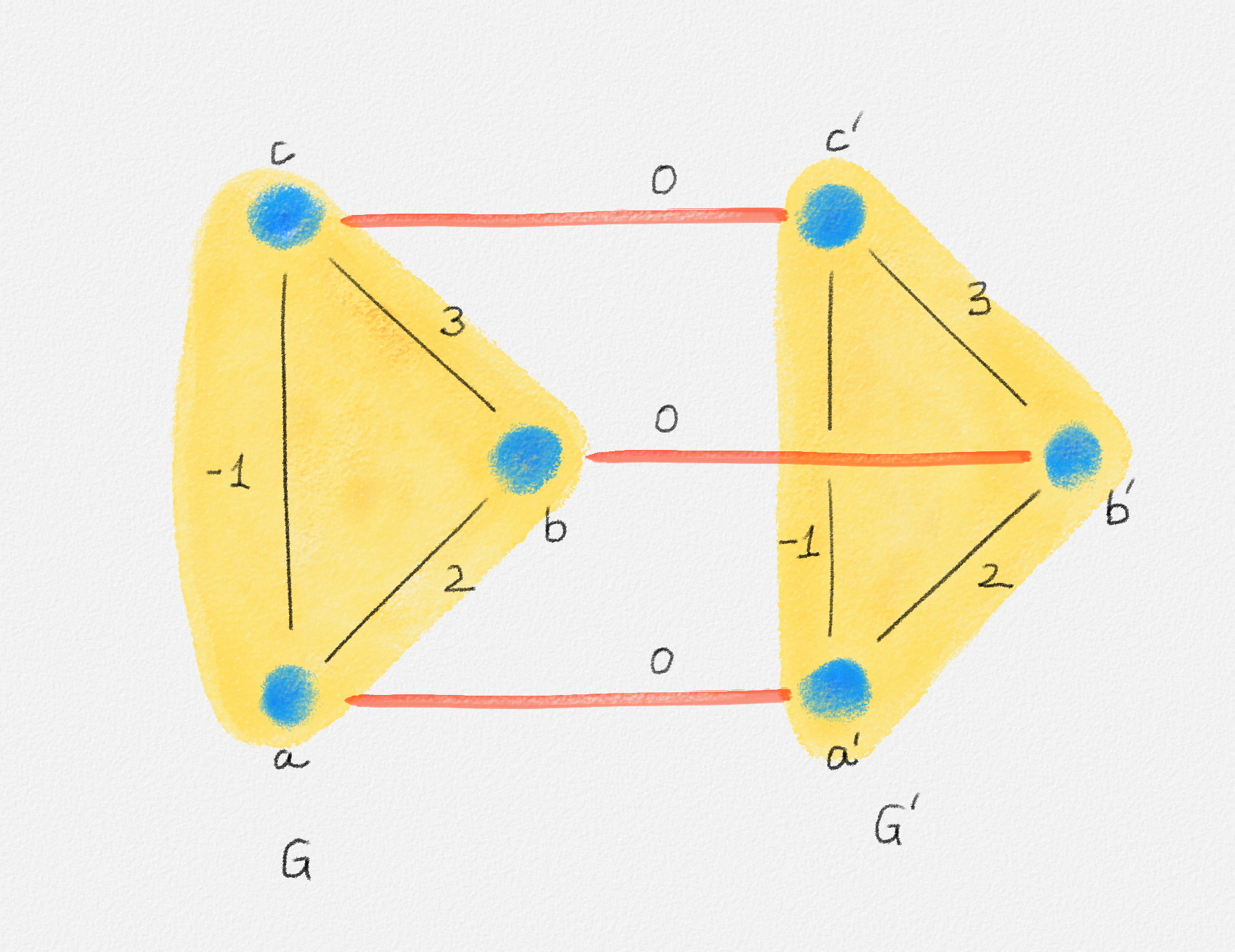

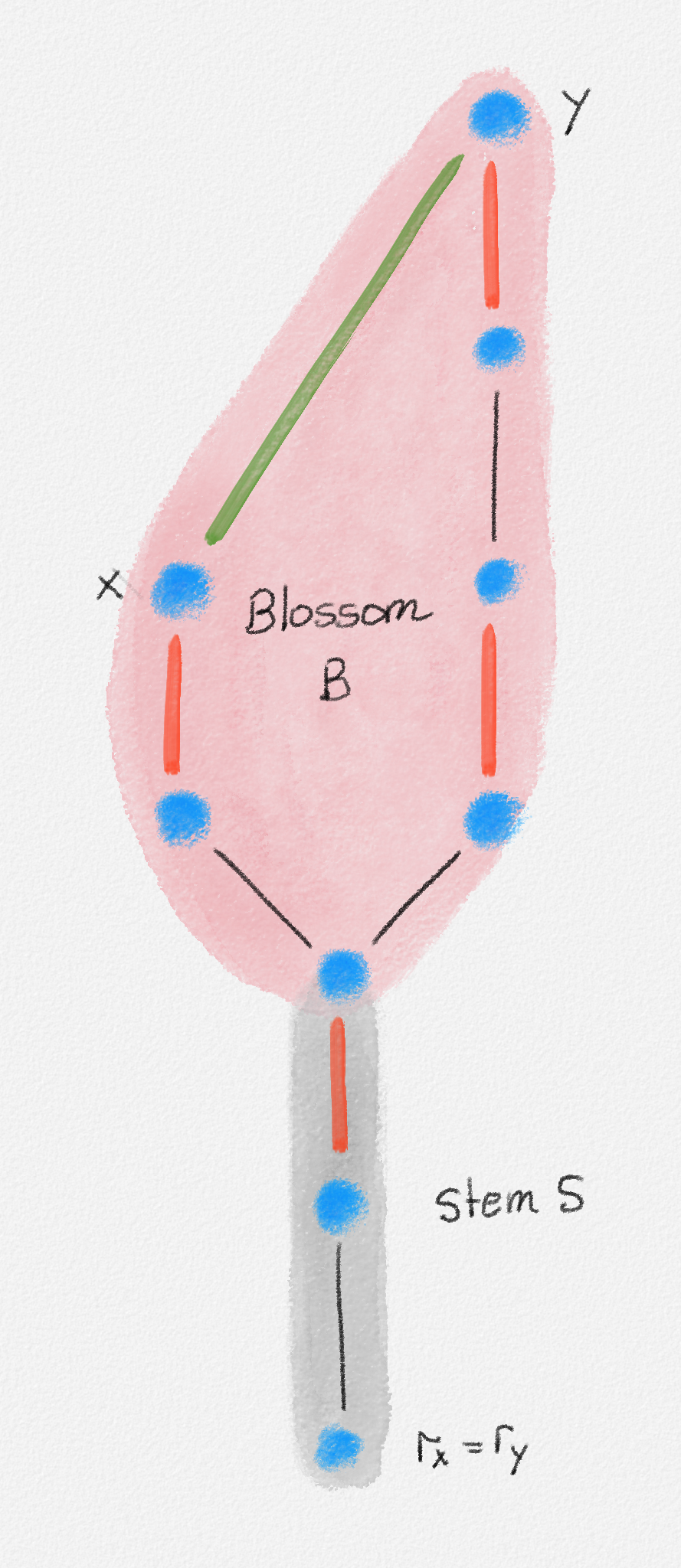

If we consider an edge \((u, v) \in T\), then let \(T_u\) and \(T_v\) be the two subtrees of \(T\) obtained by removing the edge \((u, v)\). See Figure 2.11.

Figure 2.11: The two subtrees \(T_u\) and \(T_v\) of \(T\) obtained by removing the edge \((u, v)\).

If \(s \in T_u\), then \((u, v)\) belongs to the path from \(s\) to \(v\) but not to the path from \(s\) to \(u\). If \(s \in T_v\), then \((u, v)\) belongs to the path from \(s\) to \(u\) but not to the path from \(s\) to \(v\). In both cases, \(\hat y_{u,v}^u + \hat y_{u,v}^v = 1 = \hat x_{u,v}\). If \((u, v) \notin T\), then \(\hat y_{u,v}^u + \hat y_{u,v}^v = 0 = \hat x_{u,v}\).

Since no edge in \(T\) belongs to the path from \(s\) to \(s\), we have \(\sum_{(s, v) \in E} \hat y_{s,v}^s = 0\). For \(v \ne s\), let \(u_1, \ldots, u_d\) be the neighbours of \(v\) in \(T\). Removing the edges \((u_1,v), \ldots, (u_d,v)\) breaks \(T\) into \(d + 1\) connected components. One contains only \(v\); the others are subtrees \(T_{u_1}, \ldots, T_{u_d}\) containing the vertices \(u_1, \ldots, u_d\), respectively. There exists exactly one such tree \(T_{u_i}\) that contains \(s\). In this case, the edge \((u_i,v)\) belongs to the path from \(s\) to \(v\) and the other edges \((u_j,v)\) with \(j \ne i\) do not. Thus, \(\sum_{j=1}^d \hat y_{u_j,v}^v = \sum_{(u,v) \in E} \hat y_{u,v}^v = 1\). See Figure 2.12.

Figure 2.12: Exactly one of the edges incident to a vertex \(v \ne s\) belongs to the path from \(s\) to \(v\).

Finally, consider any two edges \((u,v), (v,w) \in E\) sharing an endpoint and any vertex \(z \in V\). If \((u,v)\) does not belong to the path from \(s\) to \(v\), then \(\hat y_{u,v}^v = 0\). If \((v,w)\) does not belong to the path from \(s\) to \(z\), then \(\hat y_{v,w}^z = 0\). In either case, \(\hat y_{u,v}^v + \hat y_{v,w}^z - 1 \le 0\). Thus, \(\hat y_{u,v}^z \ge 0 \ge \hat y_{u,v}^v + \hat y_{v,w}^z - 1\).

If \((u,v)\) belongs to the path from \(s\) to \(v\), and \((u,v)\) and \((v,w)\) belong to the path from \(s\) to \(z\), then \(\hat y_{u,v}^v = \hat y_{v,w}^z = 1\) and \(\hat y_{u,v}^z = 1 = \hat y_{u,v}^v + \hat y_{v,w}^z - 1\).

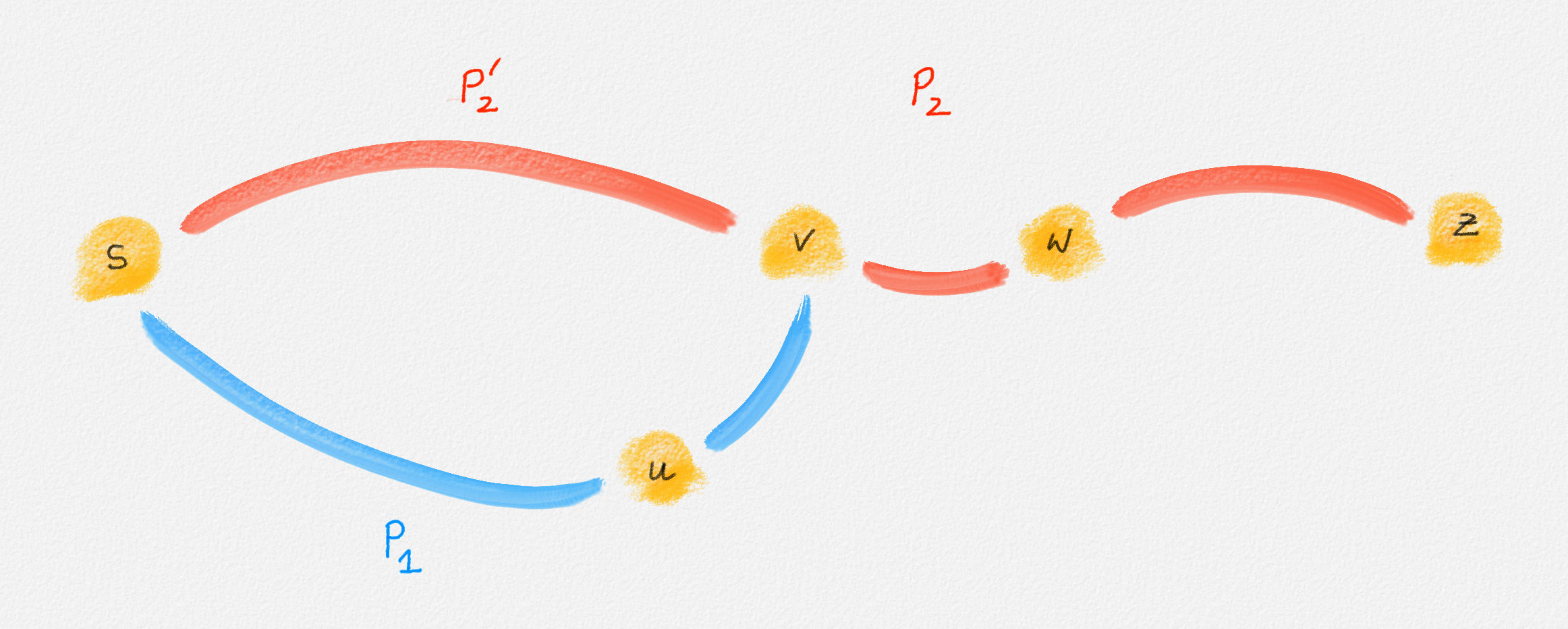

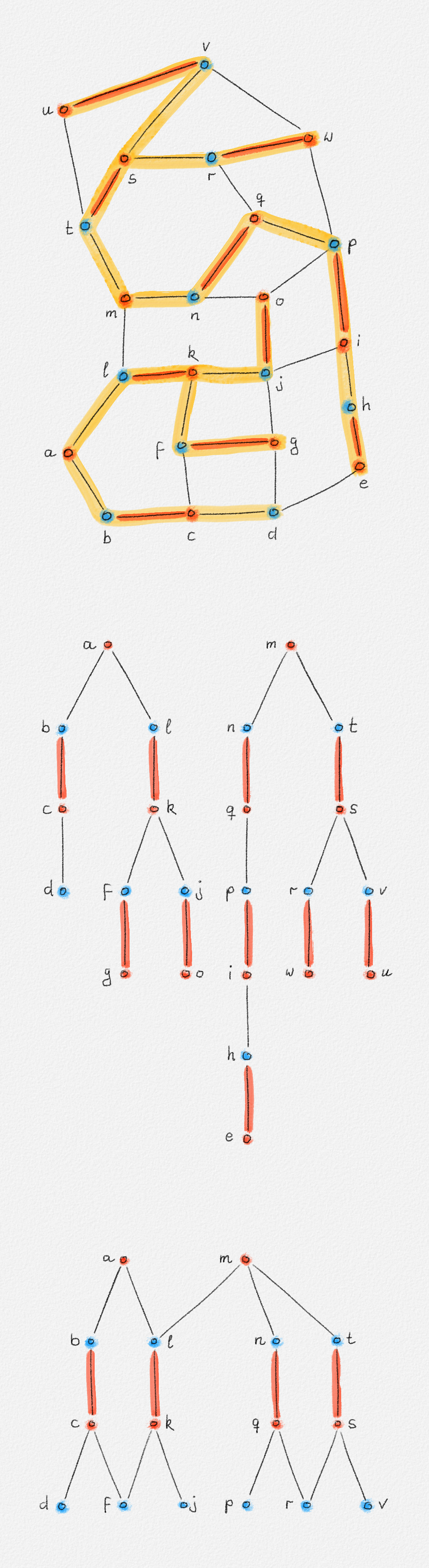

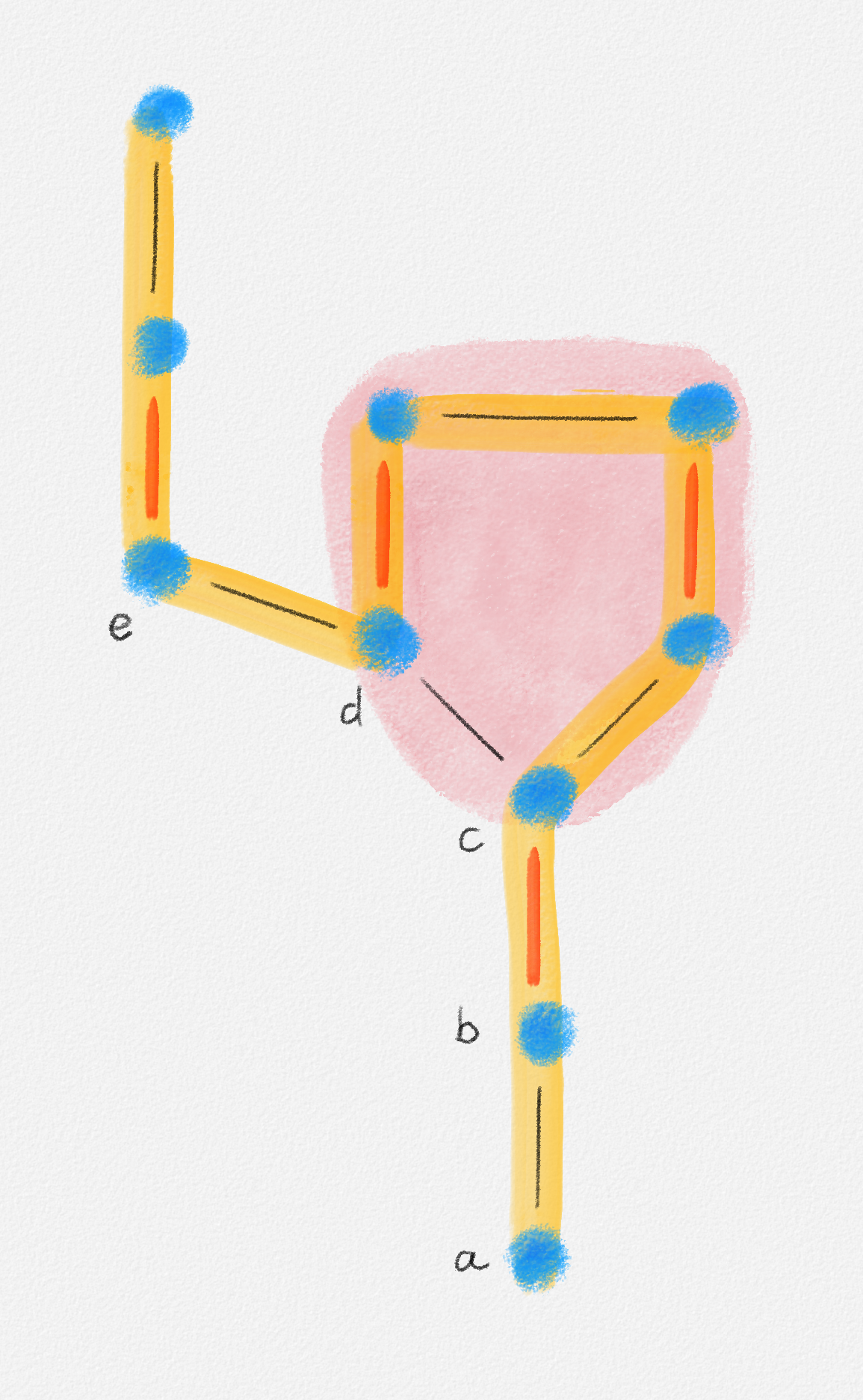

If \((u,v)\) belongs to the path from \(s\) to \(v\), \((v,w)\) belongs to the path from \(s\) to \(z\), and \((u,v)\) does not belong to the path from \(s\) to \(z\), then let \(P_1\) be the path from \(s\) to \(v\) in \(T\), and let \(P_2\) be the path from \(s\) to \(z\) in \(T\). Since the edge \((v,w)\) belongs to \(P_2\), so does the vertex \(v\). The subpath \(P_2'\) of \(P_2\) from \(s\) to \(v\) does not contain \((u,v)\) because \(P_2\) does not contain \((u,v)\). Thus, \(T\) contains two paths from \(s\) to \(v\), a contradiction because \(T\) is a tree. Therefore, this is not possible. In other words, \(\hat y_{u,v}^v = 1\) and \(\hat y_{v,w}^z = 1\) implies that \(\hat y_{u,v}^z = 1 = \hat y_{u,v}^v + \hat y_{v,w}^z - 1\). See Figure 2.13.

Figure 2.13: If \((u, v)\) belongs to the path from \(s\) to \(v\) and \((v, w)\) belongs to the path from \(s\) to \(z\) but \((u, v)\) does not, then \(T\) cannot be a tree.

This shows that the solution \(\bigl(\hat x, \hat y\bigr)\) corresponding to \(T\) is a feasible solution of (2.11). ▨

Since every feasible solution \(\bigl(\hat x, \hat y\bigr)\) of (2.11) defines a spanning tree of \(G\) and vice versa, we have:

Corollary 2.5: For any undirected edge-weighted graph \((G,w)\), \(\bigl(\hat x, \hat y\bigr)\) is an optimal solution of (2.11) if and only if the subgraph \(T = (V, E')\) of \(G\) with \(E' = \bigl\{ e \in E \mid \hat x_e = 1 \bigr\}\) is an MST of \((G,w)\).

The ILP (2.11) has only a polynomial number of constraints, while (2.9) and (2.10) have an exponential number of constraints. As we discuss next, this does not make (2.11) any easier to solve. The key to an efficient solution of the minimum spanning tree problem is to express it as a linear program, without requiring that the variables have integer values. It turns out that this is much easier to do for a slightly modified version of (2.9) than for any of the three ILPs (2.9), (2.10), and (2.11).

2.4. The Computational Complexity of LP and ILP

Now that we know what linear programs and integer linear programs are, and we got a glimpse of how to model optimization problems as LPs or ILPs, the next question is how we find optimal solutions for LPs and ILPs.

Linear Programming

In Chapter 3, we discuss the Simplex Algorithm as a classical algorithm for solving LPs. The Simplex Algorithm is the most popular algorithm for solving linear programs in practice, and it is also among the fastest algorithms in practice to solve many LPs. For some pathological inputs, however, the Simplex Algorithm may take exponential time and thus does not find a solution efficiently. Remarkably, any such pathological input has an almost identical input "nearby" that takes the Simplex Algorithm polynomial time to solve (Spielman and Teng, 2004). Thus, we have to try really, really hard if we want to make the Simplex Algorithm take exponential time.

Still, in theory, the Simplex Algorithm is not a polynomial-time algorithm. The Ellipsoid Algorithm and Karmarkar's Algorithm, not discussed here, can solve every linear program in polynomial time. More importantly, the Ellipsoid Algorithm can do that even for LPs with an exponential number of constraints, provided these constraints are represented appropriately: The Ellipsoid Algorithm relies on a "separation oracle" to drive its search for an optimal solution. Even if the LP technically has an exponential number of constraints, it may be possible to implement a separation oracle for the LP that runs in polynomial time and space. If that's the case, then the Ellipsoid Algorithm solves the LP in polynomial time. In practice, the Ellipsoid Algorithm suffers from numerical instability and is much slower than both the Simplex Algorithm and Karmarkar's Algorithm.

Even though we do not discuss the Ellipsoid Algorithm or Karmarkar's algorithm in these notes, the main take-away from this short subsection is that

Theorem 2.6: Any linear program can be solved in polynomial time in its size.

Integer Linear Programming

So we can solve linear programs in polynomial time. What about integer linear programs? In the remainder of this section, we prove that solving integer linear programs is NP-hard, even if they have only a polynomial number of constraints.

Recall the basic strategy for proving that a problem \(\Pi\) is NP-hard. It suffices to provide a polynomial-time reduction from an NP-hard problem \(\Pi'\) to \(\Pi\). Specifically, we need to provide a polynomial-time algorithm \(\mathcal{A}\) that takes any instance \(I'\) of \(\Pi'\) and computes from it an instance \(I\) of \(\Pi\) such that \(I\) is a yes-instance of \(\Pi\) if and only if \(I'\) is a yes-instance of \(\Pi'\). If you have not seen any NP-hardness proofs yet or you need a refresher on how they work, the appendix provides a brief introduction to the topic.

Here, we choose \(\Pi\) to be ILP and \(\Pi'\) to be the satisfiability problem (SAT). A SAT instance is a Boolean formula in CNF over some set of variables \(x_1, \ldots, x_n\):

\[\begin{aligned} F &= C_1 \wedge \cdots \wedge C_m\\ C_i &= \lambda_{i1} \vee \cdots \vee \lambda_{ik_i} && \forall 1 \le i \le m\\ \lambda_{ij} &\in \{ x_1, \ldots, x_n, \bar x_1, \ldots, \bar x_n \} && \forall 1 \le i \le m, 1 \le j \le k_i. \end{aligned}\]

\(\bar x_i\) denotes the negation of \(x_i\).

We translate \(F\) into an ILP over variables \(x_1, \ldots, x_n \in \{0,1\}\). Each literal \(\lambda_{ij}\) is translated into a linear function \(\phi_{ij}(x_1, \ldots, x_n)\) defined as

\[\phi_{ij}(x_1, \ldots, x_n) = \begin{cases} x_k & \text{if } \lambda_{ij} = x_k\\ 1 - x_k & \text{if } \lambda_{ij} = \bar x_k \end{cases}.\]

Each clause \(C_i\) is translated into the constraint

\[\Phi_i(x_1, \ldots, x_n) \ge 1,\]

where

\[\Phi_i(x_1, \ldots, x_n) = \sum_{j=1}^{k_i} \phi_{ij}(x_1, \ldots, x_n).\]

It turns out that even deciding whether this ILP has a feasible solution is NP-hard, so we can choose any objective function, such as \(f(x_1, \ldots, x_n) = 0\), to complete the definition of the ILP.

To prove that deciding whether this ILP has a feasible solution is NP-hard, we prove that \(F\) is satisfiable if and only if the ILP has a feasible solution:

First assume that \(F\) is satisfiable. Then there exists an assignment \(\hat x = \bigl(\hat x_1, \ldots, \hat x_n\bigr)\) of truth values to the variables \(x_1, \ldots, x_n\) in \(F\) such that every clause \(C_i\) contains a true literal \(\lambda_{ij}\). For this literal, \(\phi_{ij}\bigl(\hat x_1, \ldots, \hat x_n\bigr) = 1\). Thus, since \(\phi_{ij'}\bigl(\hat x_1, \ldots, \hat x_n\bigr) \ge 0\) for all \(1 \le j' \le k_i\), \(\Phi_i\bigl(\hat x_1, \ldots, \hat x_n\bigr) = \sum_{j'=1}^{k_i} \phi_{ij'}\bigl(\hat x_1, \ldots, \hat x_n\bigr) \ge 1\), that is, \(\hat x\) satisfies the constraint corresponding to \(C_i\) in the ILP. Since \(\hat x\) satisfies every clause of \(F\), this shows that it satisfies every constraint of the ILP, so \(\hat x\) is a feasible solution of the ILP.

Now assume that the ILP is feasible. Then there exists a feasible solution \(\hat x = \bigl(\hat x_1, \ldots, \hat x_n\bigr)\) of the ILP. Since \(\hat x_i \in \{0, 1\}\) for all \(1 \le i \le n\), we can interpret this solution as an assignment of truth values to the variables \(x_1, \ldots, x_n\) in \(F\). Since \(\hat x\) is a feasible solution of the ILP, we have \(\Phi_i\bigl(\hat x_1, \ldots, \hat x_n\bigr) = \sum_{j'=1}^{k_i} \phi_{ij'}\bigl(\hat x_1, \ldots, \hat x_n\bigr) \ge 1\) for every clause \(C_i\). Thus, \(\phi_{ij}\bigl(\hat x_1, \ldots, \hat x_n\bigr) = 1\) for at least one literal \(\lambda_{ij} \in C_i\), that is, \(\lambda_{ij}\) is made true by the solution \(\hat x = \bigl(\hat x_1, \ldots, \hat x_n\bigr)\) and, thus, \(C_i\) is satisfied by \(\hat x\). Since \(\hat x\) satisfies every constraint in the ILP, it also satisfies every clause \(C_i\) in \(F\), that is, it is a satisfying truth assignment of \(F\).

We have shown that we can, in polynomial time, convert any Boolean formula \(F\) into an ILP that is feasible if and only if \(F\) is satisfiable. This conversion is a polynomial-time reduction from SAT to ILP. Since SAT is NP-hard, this proves that

Theorem 2.7: Integer linear programming is NP-hard.

2.5. LP Relaxations

Given that we have polynomial-time algorithms for solving LPs but solving ILPs is NP-hard, an important question is whether we can use the machinery for solving LPs to obtain suboptimal but good solutions for ILPs. We will explore this question in the third part of this book. Here, we introduce an important tool for translating ILPs into related LPs: LP relaxation.

For every ILP, one can define a corresponding LP by simply dropping the requirement that the solution value of each variable be an integer: in the LP solution, variables are allowed to take on any real values as long as they satisfy the constraints in the LP.

The LP relaxation of an ILP is the same as the ILP, but the requirement that the variable values in a feasible solution be integers is dropped.

As an example, here is the LP relaxation of (2.9). It is identical to the ILP, except that the constraint \(x_e \in \{0, 1\}\) has been replaced with the constraint \(0 \le x_e \le 1\): \(x_e\) is no longer required to be integral:

\[\begin{gathered} \text{Minimize } \sum_{e \in E} w_e x_e\\ \begin{aligned} \text{s.t. } \sum_{e \in E} x_e &= n - 1\\ \sum_{e \in C} x_e &\le |C| - 1 && \forall \text{cycle $C$ in $G$}\\ 0 &\le x_e \le 1 && \forall e \in E. \end{aligned} \end{gathered}\tag{2.12}\]

In general, the LP relaxation of an ILP may have a much better optimal solution than the ILP itself, but it can never have a worse optimal solution:

Lemma 2.8: If \(\hat x\) is an optimal solution to a minimization ILP \(P\) and \(\tilde x\) is an optimal solution to its LP relaxation \(P'\), then \(f\bigl(\tilde x\bigr) \le f\bigl(\hat x\bigr)\), where \(f\) is the objective function shared by \(P\) and \(P'\).

Proof: Since \(\hat x\) is a feasible solution of \(P\), it is also a feasible solution of \(P'\). Since \(\tilde x\) is an optimal solution of \(P'\), this implies that \(f\bigl(\tilde x\bigr) \le f\bigl(\hat x\bigr)\). ▨

The ratio \(f\bigl(\hat x\bigr) \mathop{/} f\bigl(\tilde x\bigr)\) between the optimal objective function values of the ILP and its relaxation is called the integrality gap of the ILP.

In general, this gap can be arbitrarily large. However, for problems where the integrality gap is bounded, the LP relaxation can often be used to efficiently obtain an approximation of the optimal solution of the ILP, a fact that will be explored further when we discuss Chapter 13. Another useful property exploited by some approximation algorithms is half-integrality of the optimal solution of some LPs: In the optimal solution, every variable takes on a value that is a multiple of \(\frac{1}{2}\). Again, this will be explored further when discussing approximation algorithms.

2.5.1. ILPs With Integrality Gap 1

In some instances, the optimal solution of the LP relaxation of an ILP is integral, that is, every variable has an integer value. By Lemma 2.8, this implies that the optimal solution of the LP relaxation is also an optimal solution of the ILP.

In the remainder of this section, we explore whether the MST problem is such a problem. The answer depends on the chosen ILP formulation. For each of (2.9), (2.10), and (2.11), there exists an input that has a fractional solution with a lower objective function value than the best possible integral solution, as shown in the following three examples.

The "No Cycles" Formulation

As already observed, the LP relaxation of (2.9) is

\[\begin{gathered} \text{Minimize } \sum_{e \in E} w_e x_e\\ \begin{aligned} \text{s.t. } \sum_{e \in E} x_e &= n - 1\\ \sum_{e \in C} x_e &\le |C| - 1 && \forall \text{cycle $C$ in $G$}\\ 0 &\le x_e \le 1 && \forall e \in E. \end{aligned} \end{gathered}\tag{2.13}\]

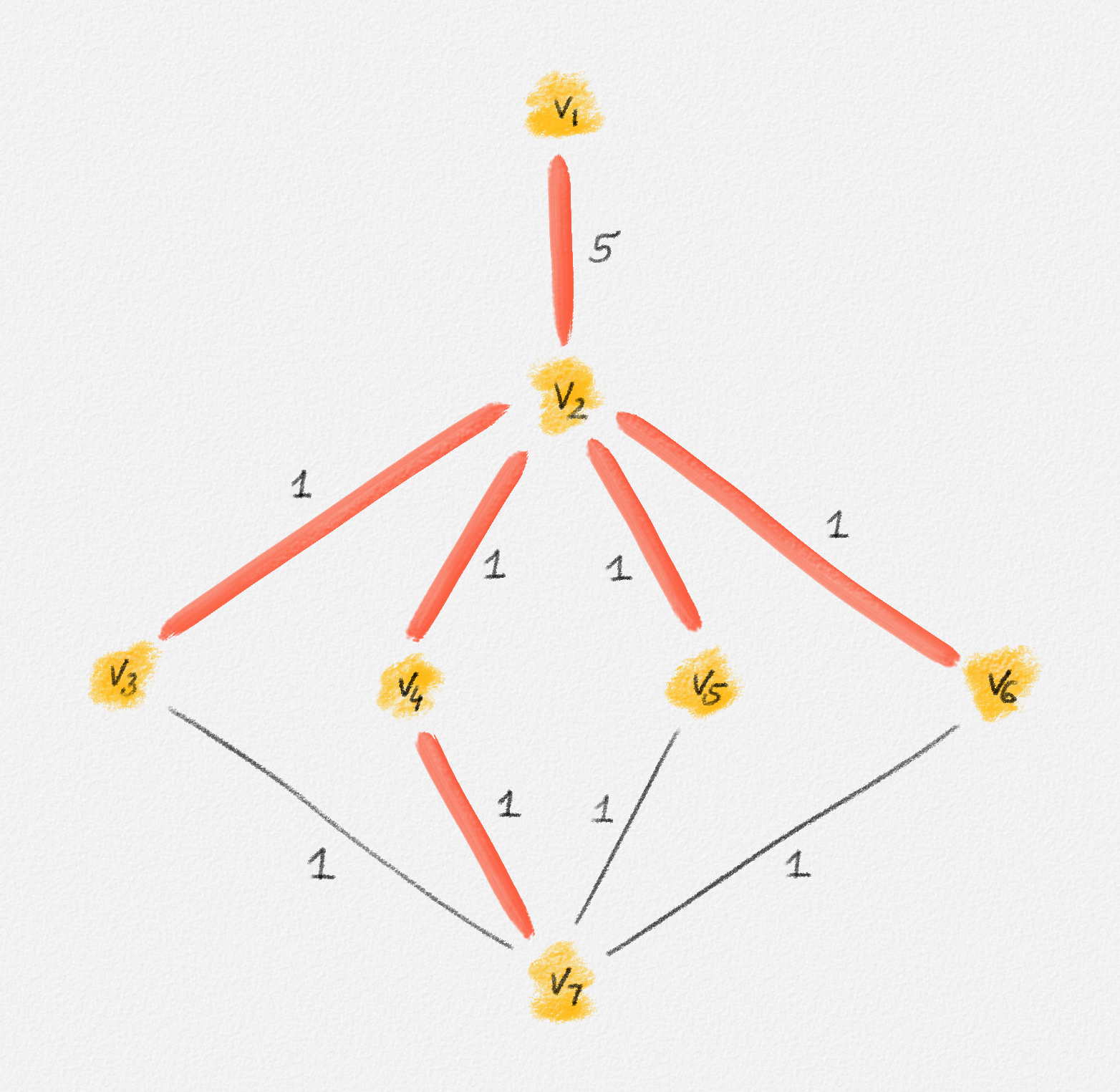

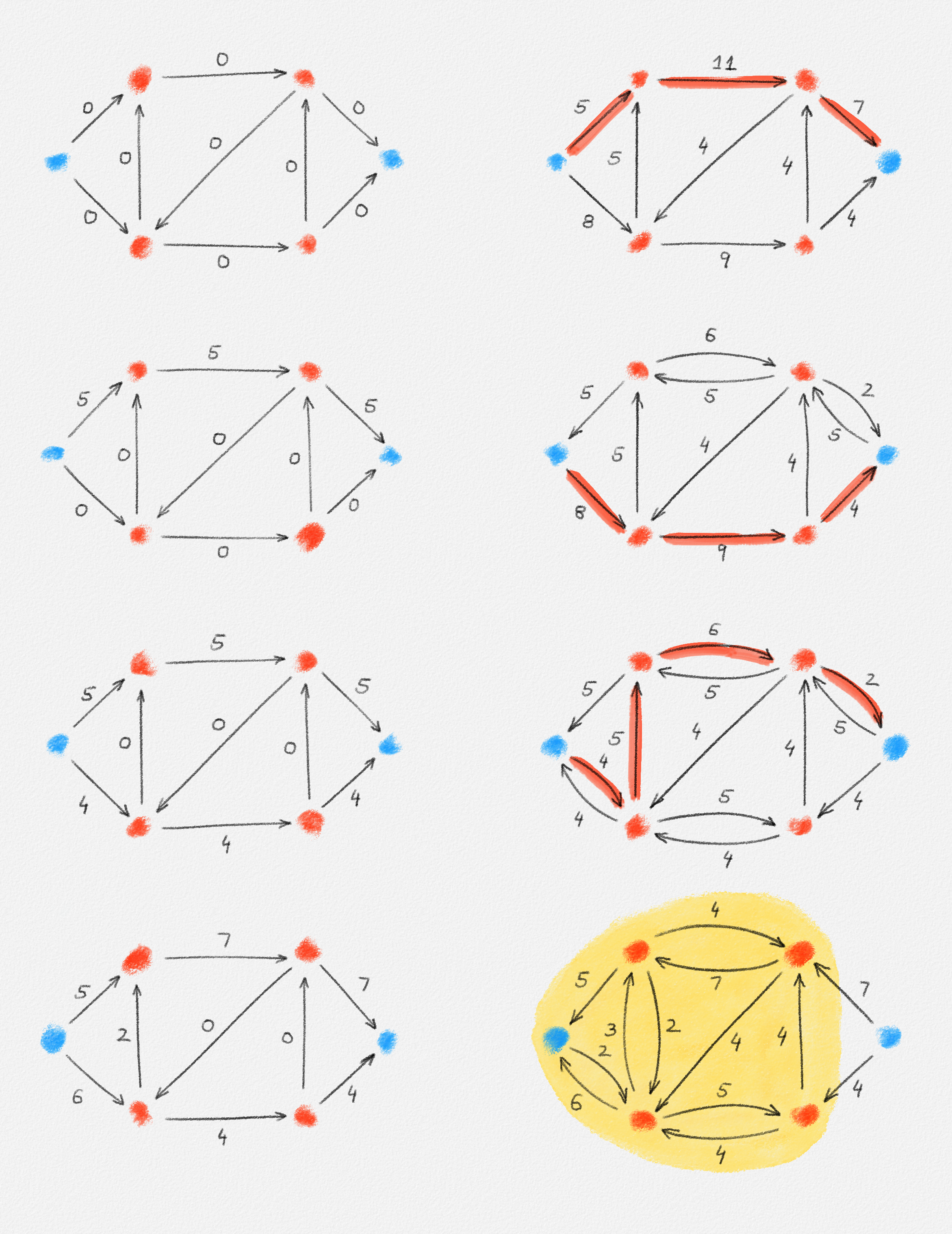

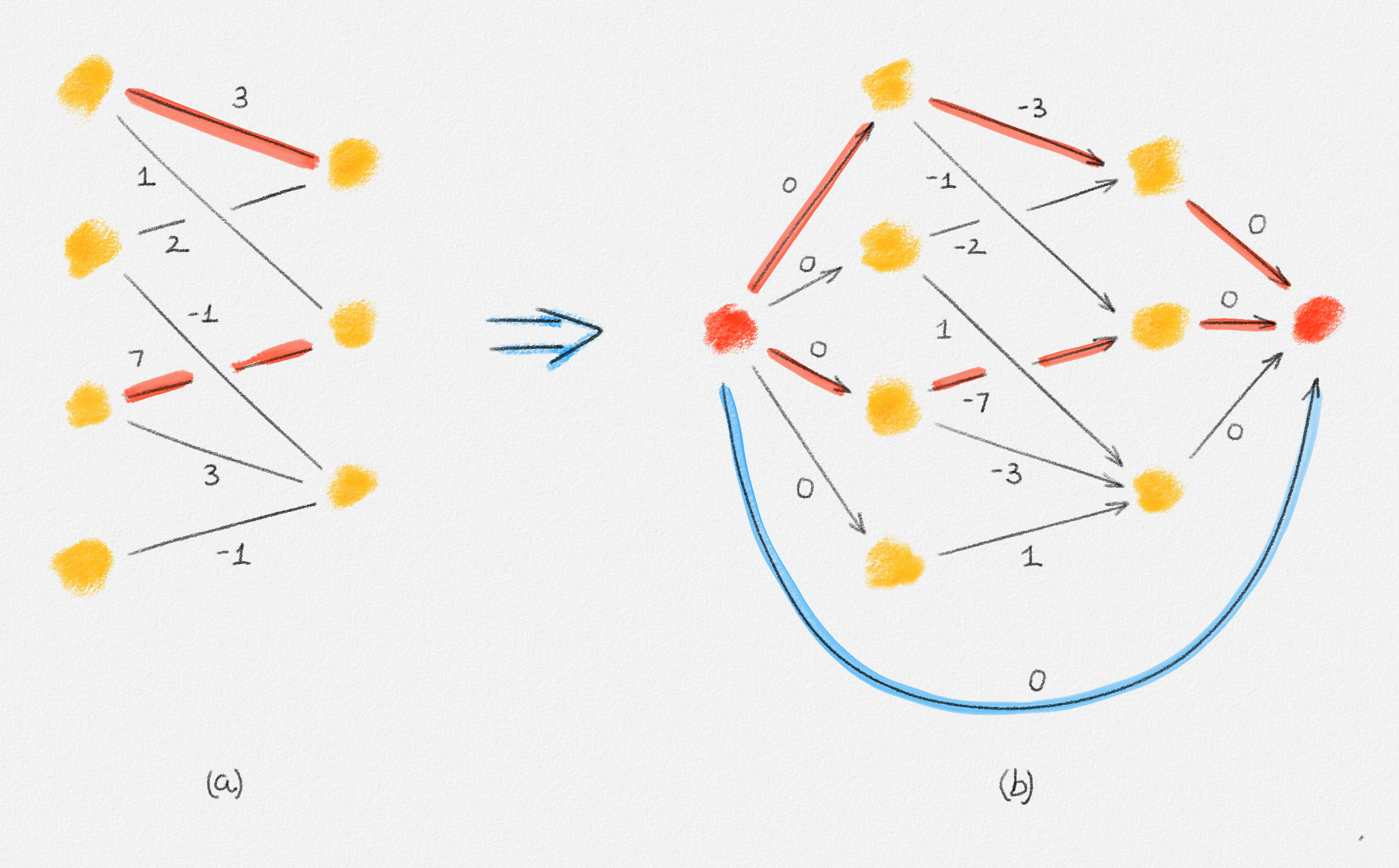

Any MST of the graph in Figure 2.14 has weight \(10\).

Figure 2.14: The edge labels are edge weights. The red tree has weight \(10\) and is an MST.

On the other hand, the following fractional solution with objective function value \(6\) satisfies all constraints of (2.13):1

\[\begin{aligned} x_{12} &= 0\\ x_{23} = x_{24} = x_{25} = x_{26} &= \frac{1}{2}\\ x_{37} = x_{47} = x_{57} = x_{67} &= 1 \end{aligned}\]

The "Connectivity" Formulation

The LP relaxation of (2.10) is

\[\begin{gathered} \text{Minimize } \sum_{e \in E} w_e x_e\\ \begin{aligned} \text{s.t. } \sum_{e \in E} x_e &= n - 1\\ \sum_{e \text{ crosses } S} x_e &\ge 1 && \forall \text{cut $S$ in $G$}\\ 0 &\le x_e \le 1 && \forall e \in E. \end{aligned} \end{gathered}\tag{2.14}\]

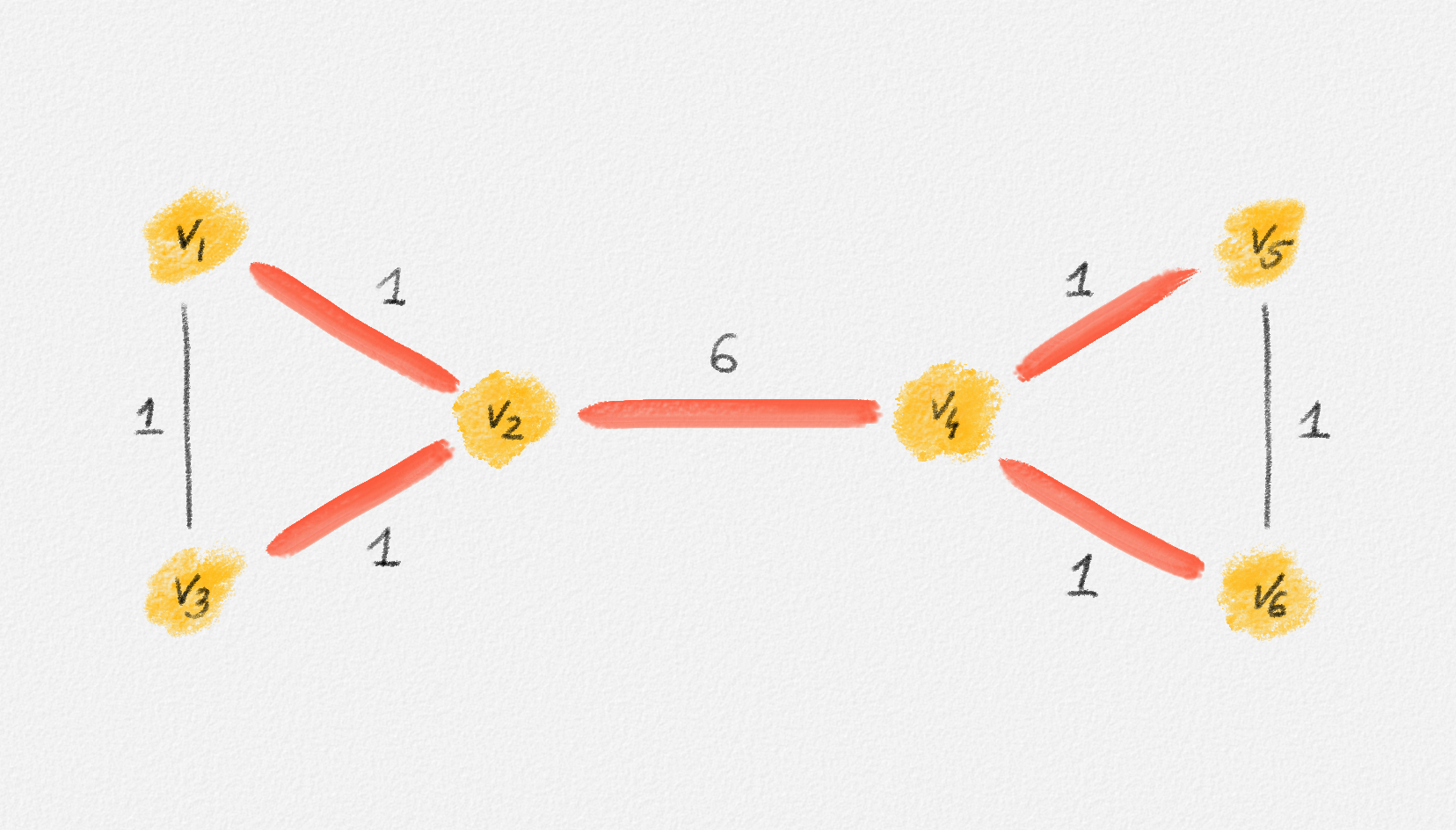

Any MST of the graph in Figure 2.15 has weight \(2\).

Figure 2.15: The edge labels are edge weights. The red tree has weight \(2\) and is an MST.

On the other hand, the following fractional solution with objective function value \(\frac{3}{2}\) satisfies all constraints of (2.12):

\[\begin{aligned} x_{12} = x_{13} = x_{24} = x_{34} &= \frac{1}{2}\\ x_{25} = x_{45} &= 1 \end{aligned}\]

The Polynomial-Size Formulation

The LP relaxation of (2.11) is

\[\begin{gathered} \text{Minimize } \sum_{e \in E} w_e x_e\\ \begin{aligned} \text{s.t. } \phantom{y_{(u,v)}^u + y_{(u,v)}^v} \llap{\displaystyle\sum_{e \in E} x_e} &= n - 1\\ y_{u,v}^u + y_{u,v}^v &= x_{u,v} && \forall (u,v) \in E\\ \sum_{(s,v) \in E} y_{s,v}^s &= 0\\ \sum_{(u,v) \in E} y_{u,v}^v &= 1 && \forall v \ne s\\ y_{u,v}^z &\ge y_{u,v}^v + y_{v,w}^z - 1\quad && \forall z \in V, (u,v) \in E, (v,w) \in E\\ 0 \le x_e &\le 1 && \forall e \in E\\ 0 \le y_e^v &\le 1 && \forall v \in V, e \in E. \end{aligned} \end{gathered}\tag{2.15}\]

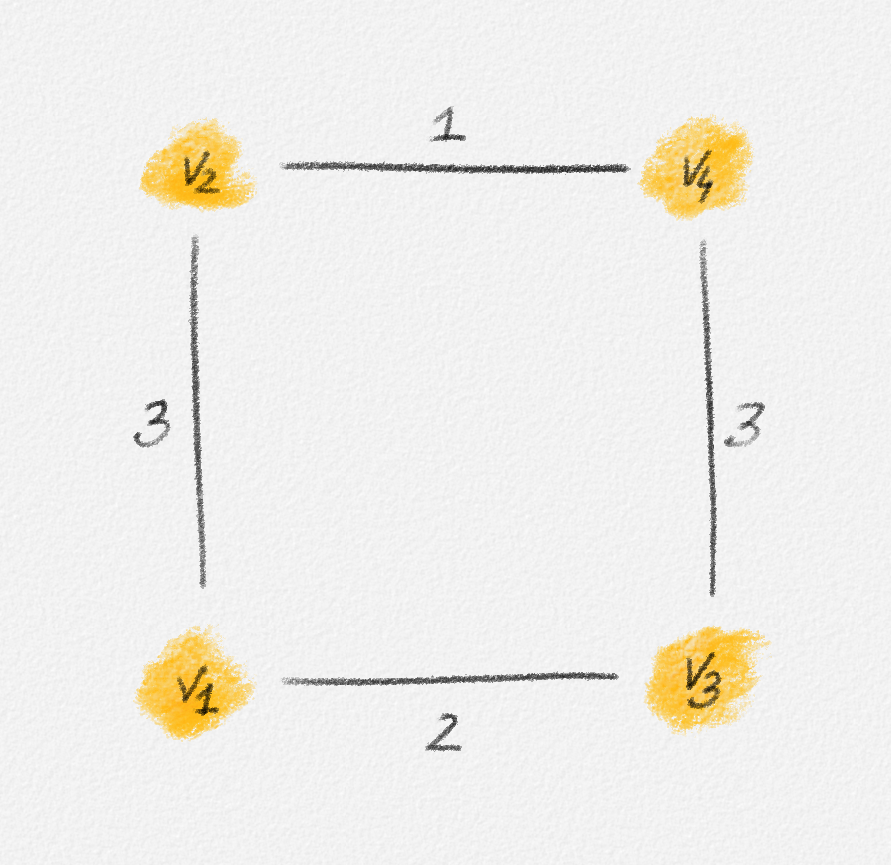

Any MST of the graph in Figure 2.16 has weight \(10\).

Figure 2.16: The edge labels are edge weights. The red tree has weight \(10\) and is an MST.

No matter whether \(s = v_1\) or \(s = v_2\) (any other choice is symmetric to one of these two choices), there exists a feasible solution of (2.15) whose objective function value is only \(5\):

\[\begin{aligned} \boldsymbol{s = v_1:} && x_{13} = x_{24} &= 0 & x_{12} &= x_{23} = x_{45} = x_{46} = x_{56} = 1\\ && y_{13}^k = y_{24}^k &= 0 \quad \forall 1 \le k \le 6 & y_{45}^k &= y_{46}^k = y_{56}^k = \frac{1}{2} \quad \forall 1 \le k \le 6\\ && y_{12}^k &= 0 \quad \forall k \notin \{2, 3\} & y_{12}^k &= 1 \quad \forall k \in \{2, 3\}\\ && y_{23}^k &= 0 \quad \forall k \ne 3 & y_{23}^3 &= 1 \end{aligned}\]

\[\begin{aligned} \boldsymbol{s = v_2:} && x_{13} = x_{24} &= 0 & x_{12} &= x_{23} = x_{45} = x_{46} = x_{56} = 1\\ && y_{13}^k = y_{24}^k &= 0\quad \forall 1 \le k \le 6 & y_{45}^k &= y_{46}^k = y_{56}^k = \frac{1}{2} \quad \forall 1 \le k \le 6\\ && y_{12}^k &= 0 \quad \forall k \ne 1 & y_{12}^1 &= 1\\ && y_{23}^k &= 0 \quad \forall k \ne 3 & y_{23}^3 &= 1 \end{aligned}\]

As these three examples show, integrality matters for all three MST ILPs (2.9), (2.10), and (2.11). Next, we discuss an extension of (2.9) whose LP relaxation has an optimal integral solution.

For the sake of simplicity, we write \(x_{ij}\) instead of \(x_{v_i,v_j}\) in this example and the next two.

2.5.2. An ILP Formulation of the MST Problem With Integrality Gap 1

The following extension of (2.9) finally is an ILP whose LP relaxation has an optimal integral solution:

\[\begin{gathered} \text{Minimize } \sum_{e \in E} w_e x_e\\ \begin{aligned} \text{s.t. } \phantom{\displaystyle\sum_{\substack{(u,v) \in E\\u,v \in S}} x_{u,v}} \llap{\displaystyle\sum_{e \in E} x_e} &\ge n - 1\\ \sum_{\substack{(u,v) \in E\\u,v \in S}} x_{u,v} &\le |S| - 1 && \forall \emptyset \subset S \subseteq V\\ x_e &\in \{0, 1\} && \forall e \in E. \end{aligned} \end{gathered}\tag{2.16}\]

This is an extension of (2.9) because it does not only require that at most \(k - 1\) edges of every \(k\)-vertex cycle in \(G\) are chosen as part of the spanning tree but that at most \(k - 1\) edges between any subset of \(k\) vertices are chosen. Given that not including any cycle in \(T\) is what makes \(T\) a tree, it is surprising that this seemingly unimportant strengthening of the ILP ensures that the LP relaxation has an integral optimal solution. Let's first establish the correctness of the ILP.

Lemma 2.9: For any undirected edge-weighted graph \((G, w)\), \(\hat x\) is a feasible solution of (2.14) if and only if the subgraph \(T = (V, E')\) of \(G\) with \(E' = \bigl\{e \in E \mid \hat x_e = 1\bigr\}\) is a spanning tree of \(G\).

Proof: First note that the constraints \(\sum_{(u,v) \in E; u,v \in S} x_{u,v} \le |S| - 1\) ensure exactly that \(T\) is a forest, that is, \(T\) contains no cycles: If \(T\) contains a cycle \(C\) with vertex set \(S_C \ne \emptyset\), then \(\sum_{(u,v) \in E; u,v \in S_C} \hat x_{u,v} \ge |S_C|\), violating the constraint \(\sum_{(u,v) \in E; u,v \in S_C} x_{u,v} \le |S_C| - 1\).

Conversely, if \(T\) contains no cycle, then the subgraph

\[T[S] = (S, \{ (u,v) \in E' \mid u,v \in S \})\]

is a forest for every subset \(\emptyset \subset S \subseteq V\). Thus,

\[|\{ (u,v) \in E' \mid u,v \in S \}| = \sum_{(u,v) \in E; u,v \in S} \hat x_{u,v} \le |S| - 1,\]

that is, \(\hat x\) satisfies the constraint

\[\sum_{(u,v) \in E; u,v \in S} x_{u,v} \le |S| - 1\]

for every subset \(\emptyset \subset S \subseteq V\).

Finally, observe that the constraints

\[\sum_{e \in E} x_e \ge n - 1\]

and

\[\sum_{(u,v) \in E; u,v \in S} x_{(u,v)} \le |S| - 1\]

for \(S = V\) imply that

\[\sum_{e \in E} x_e = n - 1,\]

that is, \(|E'| = n - 1\).

Thus, \(\hat x\) satisfies the constraints in (2.16) if and only if \(T\) is a forest with \(n - 1\) edges, which is a tree. ▨

Once again, this one-to-one correspondence between feasible solutions of the ILP and spanning trees implies that

Corollary 2.10: For any undirected edge-weighted graph \((G, w)\), \(\hat x\) is an optimal solution of (2.16) if and only if the subgraph \(T = (V, E')\) of \(G\) with \(E' = \bigl\{e \in E \mid \hat x_e = 1\bigr\}\) is an MST of \((G, w)\).

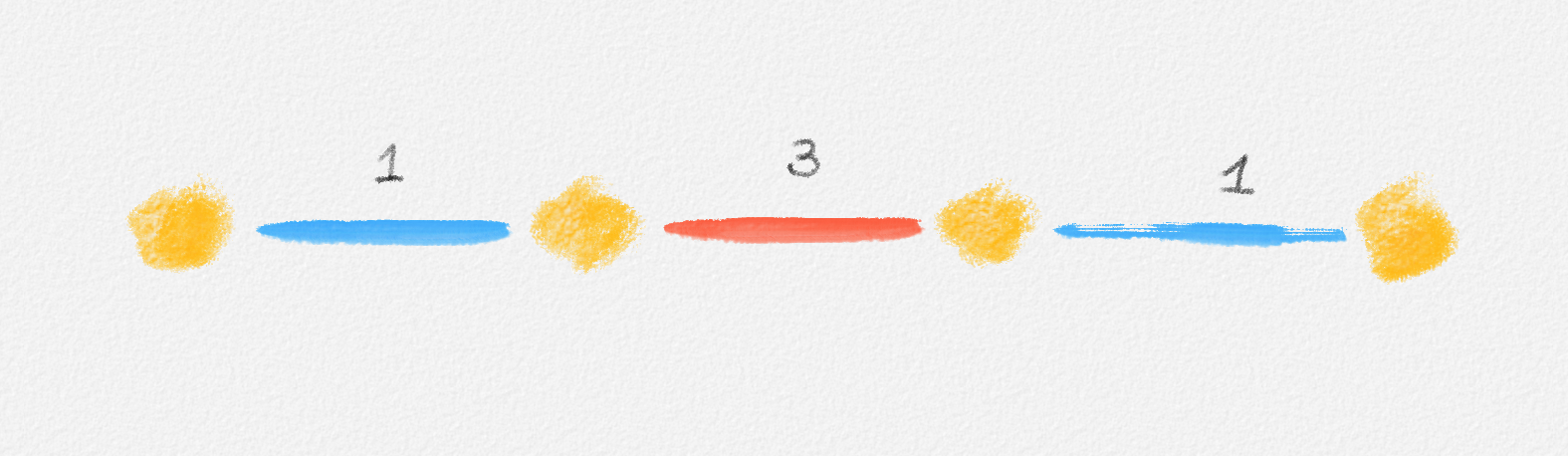

As the following example shows, if there are edges of equal weight in \((G, w)\), then the LP relaxation of (2.16) may once again have an optimal fractional solution:

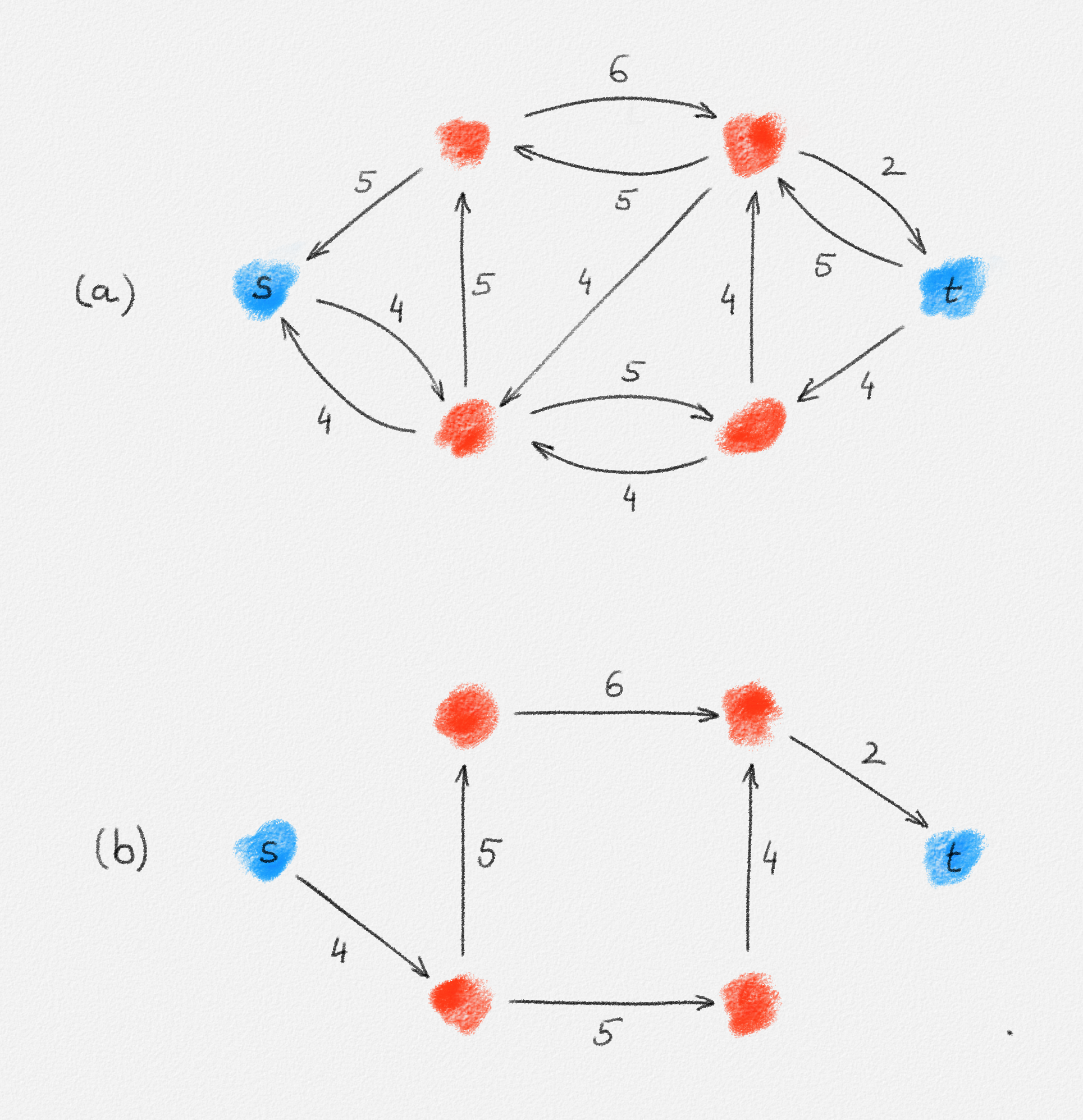

Consider the graph in Figure 2.17. The corresponding LP relaxation of (2.16) is

\[\begin{gathered} \text{Minimize } 3x_{12} + 2x_{13} + x_{24} + 3x_{34}\\ \begin{aligned} \text{s.t. } x_{12} + x_{13} + x_{24} + x_{34} &\ge 3\\ x_{12} + x_{13} + x_{24} + x_{34} &\le 3\\ x_{12} + x_{13} &\le 2\\ x_{12} + x_{24} &\le 2\\ x_{13} + x_{34} &\le 2\\ x_{24} + x_{34} &\le 2\\ 0 \le x_e &\le 1 \quad \forall e \in E, \end{aligned} \end{gathered}\tag{2.17}\]

which has the optimal fractional solution

\[x_{12} = \alpha \qquad x_{34} = 1 - \alpha \qquad x_{13} = x_{24} = 1,\]

for any \(0 \le \alpha \le 1\).

Figure 2.17: Since the edges \((v_1, v_2)\) and \((v_3, v_4)\) have the same weight, any fractional solution of (2.17) with \(x_{12} = \alpha\), \(x_{34} = 1 - \alpha\), and \(x_{13} = x_{24} = 1\) is an optimal solution.

It is easily verified that, at least in this example, any optimal fractional solution has the same objective function value as an optimal integral solution, that is, the optimal solution of the ILP is also an optimal solution of its relaxation. As we will show in Section 4.4, this is always the case. (We postpone the proof because we do not have the tools to construct the proof yet.) Moreover, it can be shown that if we use the Simplex Algorithm to find a solution of the LP relaxation of (2.16), the computed solution is always integral. The proof uses two facts that we do not prove here:

-

Recall that the feasible region of an LP is a convex polytope in \(\mathbb{R}^n\). A geometric view of the Simplex Algorithm is that it moves between vertices of this polytope until it reaches a vertex that represents an optimal solution.

-

Every vertex of the polytope defined by the LP relaxation of (2.16) represents an integral solution.

How useful is it that the LP relaxation of (2.16) has an integral optimal solution, given that this LP has exponentially many constraints? Doesn't it still take exponential time to solve this LP even using an algorithm whose running time is polynomial in the number of constraints? As mentioned in Section 2.4, the Ellipsoid Algorithm can solve every LP in polynomial time for which we can provide a polynomial-time separation oracle. The constraints in the LP relaxation of (2.16) are derived from the given edge-weighted graph \((G,w)\), whose representation uses only polynomial space, and it turns out that an appropriate separation oracle for the MST problem can be implemented in polynomial time based on this graph representation, without explicitly inspecting all constraints. Thus, the LP relaxation of (2.16) can in fact be solved in polynomial time. Still, sticking to Kruskal's or Prim's algorithm seems to be the much easier and generally more efficient approach for computing MSTs. The purpose of the Section 2.3 and of this section was simply to illustrate linear programming using a more elaborate example and to introduce integer linear programming and LP relaxations.

2.6. Canonical Form and Standard Form

So far, we have focused on discussing how to model some optimization problems using LPs. More examples will follow in subsequent chapters: Linear programming will be used as a tool to express optimization problems throughout this book. Chapter 3 is concerned with discussing the most widely used algorithm for solving LPs, the Simplex Algorithm. This algorithm assumes that its input is in a particular form. This section introduces two standard representations of linear programs, the canonical form and the standard form; the latter, sometimes also called slack form, is the one the Simplex Algorithm works with.1 Canonical form is important as the basis for the theory of LP duality, which will be the basis for a number of algorithms discussed in these notes.

To make things perfectly confusing, texts that refer to standard form as slack form often also refer to canonical form as standard form. I follow the terminology of Papadimitriou and Steiglitz (1982) here because the interpretation of some variables in the standard form as "slack variables", which justifies the name "slack form", makes sense only immediately after converting an LP in canonical form into one in standard form.

2.6.1. Canonical Form

An LP over a set of variables \(x_1, \ldots, x_n\) is in canonical form if

It is a maximization LP,

Every variable \(x_i\) is required to be non-negative: The LP contains a non-negativity constraint

\[x_i \ge 0\]

for every variable \(x_i\).

All other constraints are upper bound constraints: They provide a constant upper bound on some linear combination of the variables. For example:

\[x_1 + 3x_2 - 2x_4 \le 20.\]

We can represent such an LP as an \(n\)-element row vector

\[c = (c_1, \ldots, c_n),\]

an \(m\)-element column vector

\[b = \begin{pmatrix} b_1\\ b_2\\ \vdots\\ b_m \end{pmatrix},\]

and an \(m \times n\) matrix

\[A = \begin{pmatrix} a_{1,1} & a_{1,2} & \cdots & a_{1,n}\\ a_{2,1} & a_{2,2} & \cdots & a_{2,n}\\ \vdots & \vdots & \ddots & \vdots\\ a_{m,1} & a_{m,2} & \cdots & a_{m,n} \end{pmatrix}.\]

\(A\), \(b\), and \(c\) represent the following LP in canonical form:

\[\begin{gathered} \text{Maximize } \sum_{j=1}^n c_jx_j\\ \begin{aligned} \text{s.t. }\sum_{j=1}^n a_{ij}x_j &\le b_i && \forall 1 \le i \le m && \text{(upper bound constraints)}\\ x_j &\ge 0 && \forall 1 \le j \le n && \text{(non-negativity constraints)} \end{aligned} \end{gathered}\]

If we define that \(x \le y\) for two \(m\)-element vectors if and only if \(x_i \le y_i\) for all \(1 \le i \le m\), and we write \(0\) to denote the \(0\)-vector \((0, \ldots, 0)^T\),1 then this LP can be written more compactly as

\[\begin{gathered} \text{Maximize } cx\\ \begin{aligned} \text{s.t. } Ax &\le b\\ x &\ge 0, \end{aligned} \end{gathered}\tag{2.18}\]

where \(cx\) is the inner product of the two vectors \(c\) and \(x\) and \(Ax\) is the usual matrix-vector product. (Throughout the remainder of this chapter and throughout the next two chapters, we treat \(x\) as a column vector.)

Throughout most of these notes, we adopt the following definition of equivalence of two LPs:

Two LPs are equivalent if

- They have the same variables,

- They have the same set of feasible solutions, and

- Their objective functions assign the same value to each feasible solution.

In the following two lemmas, we use a more general notion of equivalence of LPs, which considers two LPs \(P\) and \(P'\) over variables \(x = (x_1, \ldots, x_n)^T\) and \(y = (y_1, \ldots, y_r)^T\) equivalent if there exist two linear functions \(\phi : \mathbb{R}^n \rightarrow \mathbb{R}^r\) and \(\psi : \mathbb{R}^r \rightarrow \mathbb{R}^n\) such that

- \(\hat x\) is a feasible solution of \(P\) if and only if \(\phi\bigl(\hat x\bigr)\) is a feasible solution of \(P'\),

- \(\hat y\) is a feasible solution of \(P'\) if and only if \(\psi\bigl(\hat y\bigr)\) is a feasible solution of \(P\),

- If \(\hat x\) is an optimal solution of \(P\), then \(\phi\bigl(\hat x\bigr)\) is an optimal solution of \(P'\), and

- If \(\hat y\) is an optimal solution of \(P'\), then \(\psi\bigl(\hat y\bigr)\) is an optimal solution of \(P\).

Less formally, this says that \(\phi\) and \(\psi\) are mappings between solutions of \(P\) and \(P'\) that preserve feasible solutions and optimal solutions.

Equivalence of LPs is useful because it allows us to obtain an optimal solution of an LP by solving any of its equivalent LPs. By the following lemma, every LP has an equivalent LP in canonical form:

Lemma 2.11: Every linear program can be transformed into an equivalent linear program in canonical form.

Proof: An LP \(P\) may not be in canonical form for four reasons:

- It may be a minimization LP.

- Some of its constraints may be equality constraints.

- Some of its inequality constraints may be lower bounds, as opposed to upper bounds.

- Some variables may not have non-negativity constraints.

We define a sequence of LPs \(P = P_0, P_1, P_2, P_3, P_4 = P'\) such that \(P'\) is in canonical form and for all \(1 \le i \le 4\), \(P_{i-1}\) and \(P_i\) are equivalent. Thus, \(P'\) is an LP in canonical form that is equivalent to \(P\).

From minimization LP to maximization LP: First we construct a maximization LP \(P_1\) that is equivalent to \(P_0 = P\). If \(P_0\) is itself a maximization LP, then \(P_1 = P_0\). Otherwise, the objective of \(P_0\) is to minimize \(cx\). This is the same as maximizing \(-cx\). Thus, \(P_1\) has the objective function \(-cx\) and asks us to maximize it. \(P_0\) and \(P_1\) have the same variables and the same constraints, so they have the same set of feasible solutions. As just observed, any feasible solution that minimizes \(cx\) also maximizes \(-cx\). Thus, \(P_0\) and \(P_1\) are equivalent.

From equalities to inequalities: The next LP \(P_2\) we construct from \(P_1\) is a maximization LP without equality constraints. Clearly,

\[\sum_{j=1}^n a_{ij}x_j = b_i\]

if and only if

\[\begin{aligned} \sum_{j=1}^n a_{ij}x_j &\ge b_i \text{ and}\\ \sum_{j=1}^n a_{ij}x_j &\le b_i. \end{aligned}\]

Thus, we construct \(P_2\) by replacing every equality constraint in \(P_1\) with these two corresponding inequality constraints. \(P_1\) and \(P_2\) have the same variables and the same objective function, and every inequality constraint in \(P_1\) is also a constraint in \(P_2\). As just observed, any solution \(\hat x = \bigl(\hat x_1, \ldots, \hat x_n\bigr)\) satisfies an equality constraint in \(P_1\) if and only if it satisfies the two corresponding inequality constraints in \(P_2\). Thus, \(P_1\) and \(P_2\) have the same set of feasible solutions and the same objective function. They are therefore equivalent.

From lower bounds to upper bounds: The next LP \(P_3\) we construct from \(P_2\) is a maximization LP with only upper bound constraints. This transformation is similar to the transformation from a minimization LP to a maximization LP: Every lower bound constraint

\[\sum_{j=1}^n a_{ij}x_j \ge b_i\]

in \(P_2\) is satisfied if and only if the upper bound constraint

\[\sum_{j=1}^n -a_{ij}x_j \le -b_i\]

is satisfied, so we replace it with this constraint in \(P_3\). Since \(P_2\) and \(P_3\) have the same variables and the same objective function, and it is easy to see that \(\hat x = \bigl(\hat x_1, \ldots, \hat x_n\bigr)\) is a feasible solution of \(P_2\) if and only if it is a feasible solution of \(P_3\), \(P_2\) and \(P_3\) are equivalent.

Introducing non-negativity constraints: \(P_3\) is in canonical form, except that it does not include non-negativity constraints for its variables. To obtain the final LP \(P_4 = P'\) in canonical form, we replace every variable in \(P_3\) with a pair of non-negative variables in \(P_4\).

\(P_4\) has the set of variables

\[\bigl\{x_j', x_j'' \mid 1 \le j \le n\bigr\}.\]

The objective function and constraints of \(P_4\) are obtained from the objective function and constraints of \(P_3\) by replacing every occurrence of \(x_j\) with \(x_j' - x_j''\) for all \(1 \le j \le n\) and by adding non-negativity constraints for all variables of \(P_4\). The resulting LP \(P_4\) is in canonical form.

The linear transformations \(\phi\) and \(\psi\) that establish the equivalence between \(P_3\) and \(P_4\) are defined as follows:

\[\phi(x_1, \ldots, x_n) = \bigl(x_1', x_1'', \ldots, x_n', x_n''\bigr),\]

where

\[\begin{aligned} x_j' &= \begin{cases} x_j & \text{if } x_j \ge 0\\ 0 & \text{if } x_j < 0 \end{cases}\\ x_j'' &= \begin{cases} 0 & \text{if } x_j \ge 0\\ -x_j & \text{if } x_j < 0 \end{cases} \end{aligned}\]

for all \(1 \le j \le n\), and

\[\psi\bigl(x_1', x_1'', \ldots, x_n', x_n''\bigr) = (x_1, \ldots, x_n),\]

where

\[x_j = x_j' - x_j''\]

for all \(1 \le j \le n\).

Given a feasible solution \(\bigl(\hat x_1, \ldots, \hat x_n\bigr)\) of \(P_3\), let \(\bigl(\hat x_1', \hat x_1'', \ldots, \hat x_n', \hat x_n''\bigr) = \phi\bigl(\hat x_1, \ldots, \hat x_n\bigr)\). By the definition of \(\phi\), all values \(\hat x_1', \hat x_1'', \ldots, \hat x_n', \hat x_n''\) are non-negative. Moreover, we have \(\hat x_j' - \hat x_j'' = \hat x_j\) for all \(1 \le j \le n\). Since \(\bigl(\hat x_1, \ldots, \hat x_n\bigr)\) satisfies all constraints of \(P_3\) and every occurrence of \(x_j\) in \(P_3\) is replaced with \(x_j' - x_j''\) in \(P_4\), for all \(1 \le j \le n\), \(\bigl(\hat x_1', \hat x_1'', \ldots, \hat x_n', \hat x_n''\bigr)\) satisfies all constraints of \(P_4\) and achieves the same objective function value as \(\bigl(\hat x_1, \ldots, \hat x_n\bigr)\).

Conversely, given a feasible solution \(\bigl(\hat x_1', \hat x_1'', \ldots, \hat x_n', \hat x_n''\bigr)\) of \(P_4\), let \(\bigl(\hat x_1, \ldots, \hat x_n\bigr) = \psi\bigl(\hat x_1', \hat x_1'', \ldots, \hat x_n', \hat x_n''\bigr)\). Then, once again, \(\hat x_j' - \hat x_j'' = \hat x_j\) for all \(1 \le j \le n\). Since \(\bigl(\hat x_1', \hat x_1'', \ldots, \hat x_n', \hat x_n''\bigr)\) satisfies all constraints of \(P_4\) and every occurrence of \(x_j\) in \(P_3\) is replaced with \(x_j' - x_j''\) in \(P_4\), for all \(1 \le j \le n\), \(\bigl(\hat x_1, \ldots, \hat x_n\bigr)\) satisfies all constraints of \(P_3\) and achieves the same objective function value as \(\bigl(\hat x_1', x_1'', \ldots, \hat x_n', x_n''\bigr)\).

Since \(\phi\) and \(\psi\) map feasible solutions to feasible solutions and preserve their objective function values, it follows that \(\phi\bigl(x_1, \ldots, x_n)\) is an optimal solution of \(P_4\) if \(\bigl(x_1, \ldots, x_n\bigr)\) is an optimal solution of \(P_3\), and \(\psi\bigl(x_1', x_1'', \ldots, x_n', x_n''\bigr)\) is an optimal solution of \(P_3\) if \(\bigl(x_1', x_1'', \ldots, x_n', x_n''\bigr)\) is an optimal solution of \(P_4\). Thus, \(P_3\) and \(P_4\) are equivalent. ▨