Function Composition

How else do mathematicians define functions? They build more complex functions from simpler ones. If we define

that's really the chaining of two functions: First, we apply a function that doubles the value of the function argument \(x\), and then we apply to the result another function that increases it by one. Our function \(f\) from the previous section takes care of doubling the value of \(x\). Let's define another function \(h\) that takes care of increasing the value of its argument by one:

or, in Haskell,

h :: Int -> Int

h x = x + 1

Given these two functions, we can define \(g\) as

or, in Haskell, as

g :: Int -> Int

g x = h (f x)

Here, we do need parentheses to indicate that we want to apply f to x and

then apply h to the result. If we had written h f x, then this would mean

that we apply h to two arguments, f and x, and this wouldn't compile

because h expects only a single argument.

Mathematicians have a shorter way of writing the composition of two functions. The function \(g\) defined as the composition of \(h\) and \(f\) is written as

defined exactly as above:

The key is that we have this composition operator that allows us to directly build new functions from existing ones by composing them, by applying one after the other.

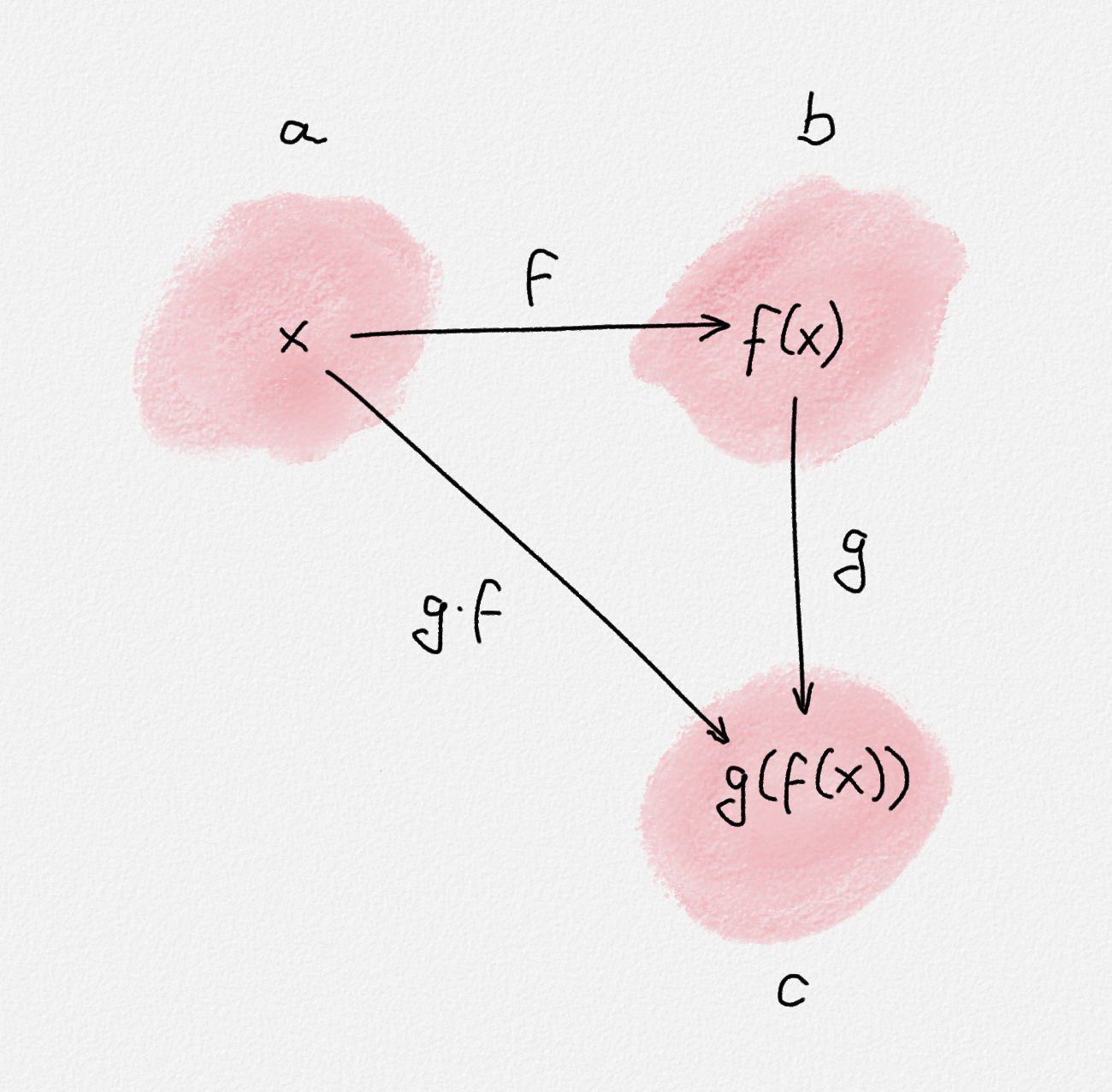

The composition \(g \cdot f\) of two functions \(f\) and \(g\) first maps its argument \(x\) to \(f(x)\) and then maps \(f(x)\) to \(g(f(x))\).

Haskell also has a function composition operator:

(.) :: (b -> c) -> (a -> b) -> (a -> c)

(g . f) x = g (f x)

Now, this definition of the composition operator in Haskell requires some

unpacking. We'll do that in a second. For now, let's believe that this is

exactly the equivalent of mathematics' function composition. Then we can

simply write our definition of our function g above as

g :: Int -> Int

g = h . f

As we will see, directly manipulating functions instead of explicitly referring to their arguments is a very powerful and expressive way to program, albeit very different from how you used to program in the past.

Back to our composition operator. First of all, let's look at the function signature

(.) :: (b -> c) -> (a -> b) -> (a -> c)

I suspect you have at least three issues with it: Why the parentheses around

the .? What are a, b and c? And what's with all the parentheses in

the function's type?

First let's talk about the parentheses around the .. In Haskell,

mathematical operators such as +, -, and so on are in fact plain old

functions. Indeed, the addition operator takes two number arguments and maps

them to a number. Looked at it this way, it's nothing but a two-argument

function. The only difference is that we don't write + 2 3 but 2 + 3. The

syntactic rule in Haskell is that

Functions whose names are composed of letters, digits, underscores, and apostrophes are "normal" functions, which should be written in prefix notation.

Like so: myFunction 1 2.

Two-argument functions whose names are composed of special characters are interpreted as infix operators and should be written in infix notation.

Like so: 2 + 3 or x >>= f. Yes, (>>=) is indeed a rather important infix

operator in Haskell which we will talk about much later in this book.

Now it turns out that we are allowed to use operators in prefix notation in Haskell, and we are allowed to use ordinary two-argument functions in infix notation. It just requires a bit of special syntax.

Instead of 2 + 3, we can also write (+) 2 3.

To use an operator in prefix notation, we need to enclose it in parentheses. The same notation applies when we want to use an operator by itself, without its arguments.

The latter is useful in type signatures, as above, and when passing an operator as an argument to some other function that expects a two-argument function as argument.

Functions are ordinary values in Haskell that can be passed as arguments to other functions and returned as the results of other functions.

That's a really powerful way to program once you get used to it.

In order to write an ordinary function in infix notation, you need to include it in backticks.

For example, there is the elem function that checks whether a given value

belongs to a list. Normally, we'd write elem x list, but writing x `elem`

list reads a bit more like English or even math: \(x \in \textit{list}\).

$ stack ghci

>>> 2 `elem` [1,2,3,4]

True

>>> 5 `elem` [1,2,3,4]

False

So that's the parenthesis around the .. Now what about a, b, and c.

That's another Haskell syntax rule.

Concrete values and concrete types (just another kind of value) start with uppercase letters.

For example, Int and Bool are concrete types, True and False are

concrete values of type Bool.

Variables start with lowercase letters.

In the type signature of our function composition operator, a, b, and c

are type variables. They can refer to any type!

With that, let's take apart that type signature. b -> c is the type of a

function that maps a value of type b to a value of type c. a -> b is the

type of a function that maps a value of type a to a value of type b. a ->

c is the type of a function that maps a value of type a to a value of type

c. If we compose a function f :: b -> c with a function g :: a -> b, we

expect to obtain a function f . g :: a -> c, because f . g first applies g

to its argument, that is, it expects a value of the same type expected by g,

type a. Applying g to this value produces a value of type b. That's

luckily exactly the argument type that f expects, so it is okay to apply f

to the result of g, and f produces a value of type c from it. And this is

exactly what our type signature says: (.) takes two arguments, a function of

type b -> c and a function of type a -> b, and it produces from them a

function of type a -> c, by composing its two arguments.

Finally, the definition of the composition of two functions matches exactly the definition from mathematics:

(f . g) x = f (g x)

Let me emphasize once more that a, b, and c in the type signature of (.)

are type variables, not concrete types. Consider three functions:

toInt :: Char -> Int

toDouble :: Int -> Double

toString :: Double -> String

Then we can construct the function toDouble . toInt. Since toInt has type

Char -> Int, it matches the type of the second argument of (.), which is

a -> b, by setting a = Char and b = Int. Similarly, toDouble, having

type Int -> Double, matches the type of the first argument of (.), which is

b -> c, if we set b = Int and c = Double. The only constraint that the

type signature of (.) imposes is that we choose the same type as the value of

the type variable b in both substitutions. Thankfully, that's the case here,

since we choose b = Int both to match the type of toInt to the type a -> b

and to match the type of toDouble to b -> c. The type of toDouble . toInt

is Char -> Double because we have a = Char and c = Double.

Similarly, we would be able to compose toString and toDouble to obtain a

function toString . toDouble of type Int -> String. What we can't do is

construct the function toString . toInt. That's because matching toInt to

the second argument of (.) would require that we set b = Int, while matching

toString to the first argument of (.) would require that we set

b = Double. But they're two different types, so we cannot simultaneously have

b = Int and b = Double. This is an important constraint because remember,

the function f . g first applies g to its argument and then applies f to

the result of g. This works only if the argument type of f and the result

type of g are the same.

If you've used generic programming in Java, Rust, Go, or C++ before, then type

variables are nothing but generic types. The difference is that to use a generic

type in Java, you'd have to write something like int myFunction<T>(T val),

whereas in Haskell, the syntax is more lightweight: myFunction :: t -> Int.

The argument type is generic by virtue of being a type variable. You'll soon

come to appreciate that polymorphic functions (Haskell's name for generic

functions) are everywhere in Haskell, and Haskell programmers tend to prefer to

write polymorphic functions wherever they can.

We digressed a bit. We were talking about how mathematicians define functions: simple functions can be defined directly. More complex functions can be defined by composing simpler functions. Let's look at a third way mathematicians use to define functions: case distinctions. As we will see, these three tools for building functions are all we really need to have an expressive and elegant programming language.