Green AI Research

Overview

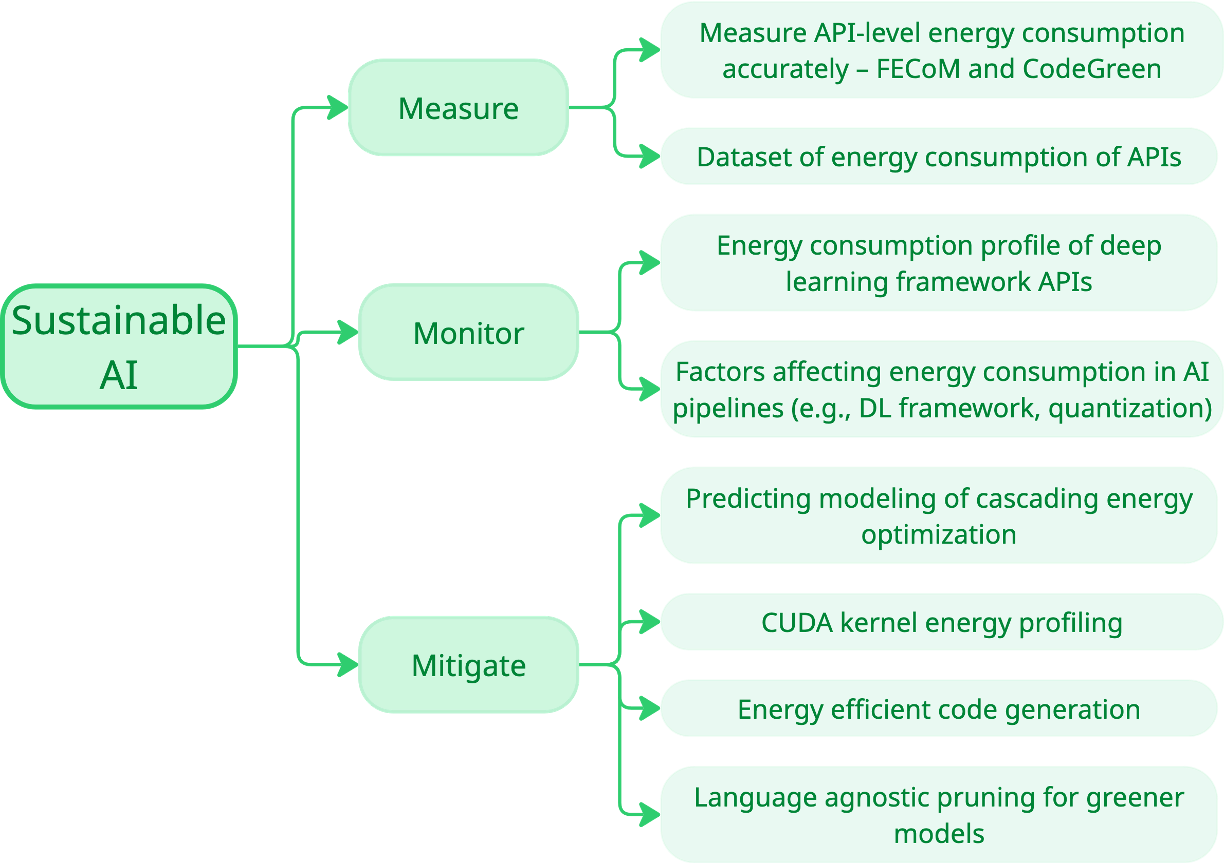

Optimizing computational methods and AI pipelines to achieve results with less power, offering greener ML models, and providing energy transparency.

This research addresses the critical challenge of energy consumption in AI systems applied to ocean and climate research. As AI models grow in complexity and scale, their computational demands and carbon footprint have become significant concerns. We develop innovative frameworks, tools, and methodologies to measure, analyze, and optimize energy consumption across the entire AI pipeline—from individual API calls to complete deep learning workflows.

Our research emphasizes to consider energy efficiency as a first-class design consideration in AI pipelines and workloads rather than an afterthought. We create practical tools, techniques, and methods that enable researchers and practitioners to:

- Measure energy consumption at various granularities (system, process, function, API, kernel level)

- Monitor energy profiles across different execution paths and optimization strategies

- Mitigate energy waste through informed design decisions and automated informed optimization

By combining fine-grained energy profiling, static code analysis, GPU kernel optimization, and systematic benchmarking, we empower the AI community to deploy sustainable, high-performance models that balance accuracy with environmental responsibility.

Team

Faculty Lead

Dr. Tushar Sharma - Assistant Professor, Dalhousie University

Dr. Sharma's research focuses on software code quality, refactoring, sustainable artificial intelligence (AI), and machine learning for software engineering (ML4SE). He earned his PhD from Athens University of Economics and Business (2019) and holds an MS in Computer Science from IIT Madras. His professional experience includes roles at Siemens Research (Charlotte, USA, 2019-2021) and Siemens Corporate Technology (Bangalore, India, 2008-2015). He co-authored Refactoring for Software Design Smells: Managing Technical Debt and two Oracle Java certification books. He founded and developed Designite, a software design quality assessment tool used worldwide. He is an IEEE Senior Member.

PhD Students

Saurabhsingh Rajput - PhD Candidate

Saurabhsingh specializes in energy-efficient AI pipelines and co-leads the CodeGreen project, which targets fine-grained energy profiling and optimization in traditional source code and AI-enabled applications. His research develops innovative techniques to enhance energy and computational efficiency of deep learning systems. He has published in top-tier venues including ACM TOSEM, ICSE, ICSA GREENS, and ACM MSR. He serves as Junior PC member (MSR'24) and subreviewer for ICSE, ASE, FSE, SANER, SCAM, and ICPC. Prior to his PhD, he earned his B.Tech in Computer Science from Visvesvaraya National Institute of Technology, India, and worked at Fidelity Investments on enterprise-level applications.

Mootez Saad - PhD Candidate

Mootez earned his bachelor's degree in software engineering from South Mediterranean University, Tunisia. His PhD research focuses on the intersection of compute-efficient deep learning and software engineering, particularly creating methods that enable language models for code understanding and analysis in computationally constrained environments. His work on adaptive pruning techniques (ALPINE) demonstrates substantial reductions in computational overhead while maintaining model performance.

Master's Students

Mohammadjavad Mehditabar

Mohammadjavad received his bachelor's degree in Computer Engineering from the Iran University of Science and Technology, Tehran, Iran. He is currently pursuing a Master of Computer Science with the Software Maintenance and Analytics Research Team Lab at Dalhousie University, Halifax, Canada. His master's research focuses on analyzing the energy-consumption behavior of language models. He benchmarks large and frontier models to uncover their hidden energy costs across different tasks, supporting the development of greener and more sustainable AI. He also evaluates language models on their ability to generate energy-efficient code. In addition, he analyzes software code for energy efficiency by identifying energy-intensive anti-patterns and recommending more efficient solutions to prevent these patterns from occurring.

Collaborators

Following academic and industrial researchers are collaborating with us on the topic of Green AI.

- Alex Brandt - Dalhousie University

- Vadim Elisseev - IBM Research, UK

- Maria Kechagia - National and Kapodistrian University of Athens, Greece

- Antonio Mastropaolo - William & Mary, USA

- José Antonio Hernández López - University of Murcia, Spain

- Federica Sarro - University College London, UK

- Daniel Varro - Linköping University, Sweden

Funded Projects

Sustainable Ocean AI

Transforming Climate Action (TCA) is one of the most ambitious research initiatives focused on understanding the ocean’s role in climate change, supported by $154M in funding from CFREF. Within this large program, Cluster 1.2, Transforming Climate Action using Artificial Intelligence (TCA-AI), advances new technologies and AI-driven methods to deepen our understanding of the ocean–climate–people nexus. Our work package, Sustainable Ocean AI, is one of seven work packages contributing to the overarching goals of Cluster 1.2.

Opportunities

Potential students (MSc/PhD/Undergraduate)

We welcome motivated students interested in exploring energy efficiency in AI-based systems. The specific topic and scope of the research will be tailored to your program requirements and individual interests. If you are comfortable with programming (Python, C++/CUDA), passionate about sustainable AI, and able to work independently with minimal guidance, feel free to reach out to the PI here.

Postdoctoral researchers

We also welcome applications from highly motivated postdoctoral researchers interested in advancing state-of-the-art research in energy-efficient AI systems. Candidates with a strong publication record in software engineering, AI, software performance analysis, or related areas, and a clear interest in sustainability in AI, are encouraged to reach out to the PI.

Collaboration opportunities

We actively seek collaborations with industry partners such as cloud providers, AI companies, and hardware manufacturers working toward energy-efficient AI; with academic researchers who bring expertise in compilers, systems, high-performance computing, or green computing; and with open-source communities, particularly contributors to major deep learning frameworks including TensorFlow, PyTorch, JAX, and ONNX.

Developers and engineers can contribute by adopting our tools, providing targeted feedback, and advocating for sustainable software development practices. Industry representatives are invited to partner on research challenges related to improving software system energy efficiency. We welcome collaborative opportunities, particularly with support from agencies such as Mitacs that facilitate academic-industry partnerships. Funding agencies and technology foundations can advance our work through grants aimed at mitigating the climate impact of energy-intensive AI systems.

Publications

2025

Tu(r)ning AI Green: Exploring Energy Efficiency Cascading with Orthogonal Optimizations

Saurabhsingh Rajput, Mootez Saad, Tushar Sharma

IEEE Software (Minor Revision, 2025)

Preprint

Abstract

AI's exponential growth intensifies computational demands and energy challenges. While practitioners employ various optimization techniques (referred to as "knobs") to tune model efficiency, these are typically afterthoughts and reactive ad-hoc changes applied in isolation without understanding their combinatorial effects on energy efficiency. This paper emphasizes treating energy efficiency as a first-class citizen and as a fundamental design consideration for compute-intensive pipelines. The research shows that strategic selection across five AI pipeline phases (data, model, training, system, inference) creates cascading efficiency gains. Experimental validation demonstrates orthogonal combinations reduce energy consumption while preserving the original F1 score of non-optimized pipelines. This curated approach provides actionable frameworks for informed sustainable AI that balance efficiency, performance, and environmental responsibility.Smart but Costly? Benchmarking LLMs on Functional Accuracy and Energy Efficiency

Mohammadjaved Mehdiabbar, Saurabhsingh Rajput, Antonio Mastropaolo, Tushar Sharma

Under Review

Preprint

Abstract

The rapid advancement of AI technologies and their accelerated adoption in software development necessitates a systematic evaluation of their environmental impact alongside functional correctness. While prior studies have examined sustainability in large language models, existing approaches lack systematic frameworks for evaluating accuracy-energy trade-offs in Code Language Models (CLMs). This paper presents BRACE, a framework to benchmark CLMs on a unified scale of energy efficiency and functional correctness. The study benchmarks 22 state-of-the-art models on code generation and summarization tasks, proposing two rating methods: Concentric Incremental Rating Circles (CIRC) and Observation to Expectation Rating (OTER). CIRC provides deterministic Euclidean-based rankings with static trade-offs that are robust to outliers, while OTER offers trend-aware evaluation with dynamic trade-offs that capture the complex correlation between energy and accuracy. These rating methods enable rating LLMs on a 1-5 scale reflecting their combined capabilities in terms of energy efficiency and functional correctness. The analysis reveals models generally perform better in code summarization tasks as they are not enforced to generate grammar-based and syntactically correct output. The proposed BRACE framework empowers practitioners to make evidence-based model selections that balance sustainability with task requirements.An Adaptive Language-Agnostic Pruning Method for Greener Language Models for Code

Mootez Saad, José Antonio Hernández López, Boqi Chen, Daniel Varro, Tushar Sharma

ACM SIGSOFT International Symposium on Foundations of Software Engineering (FSE), 2025

Paper

Abstract

Language models of code have demonstrated remarkable performance across various software engineering and source code analysis tasks. However, their demanding computational resource requirements and consequential environmental footprint remain significant challenges. This work introduces ALPINE, an adaptive programming language-agnostic pruning technique designed to substantially reduce the computational overhead of these models. The proposed method offers a pluggable layer that can be integrated with all Transformer-based models. With ALPINE, input sequences undergo adaptive compression throughout the pipeline, reaching a size that is up to 3x less than their initial size, resulting in significantly reduced computational load. Experiments on two software engineering tasks—defect prediction and code clone detection across three language models (CodeBERT, GraphCodeBERT, and UniXCoder)—show that ALPINE achieves up to 50% reduction in FLOPs, 58.1% decrease in memory footprint, and 28.1% improvement in throughput on average. This led to a reduction in CO2 emissions by up to 44.85%. Importantly, it achieves a reduction in computation resources while maintaining up to 98.1% of the original predictive performance. These findings highlight the potential of ALPINE in making language models of code more resource-efficient and accessible while preserving their performance, contributing to the overall sustainability of their adoption in software development.The Energy Flow Graph: Modeling Software Energy Consumption

Saurabhsingh Rajput, Tushar Sharma

Under Review

Abstract

The growing energy demands of computational systems necessitate a fundamental shift from performance-centric design to one that treats energy consumption as one of the primary design considerations. Current approaches treat energy consumption as an aggregate, deterministic property, overlooking the path-dependent nature of computation where different execution paths through the same code consume dramatically different energy. This work introduces the Energy Flow Graph (EFG), a formal model that represents computational processes as state-transition systems with energy costs for both states and transitions. EFG enables various applications in the software engineering realm, including static analysis of energy-optimal execution paths and providing a multiplicative cascade model that predicts combined optimization effects without exhaustive testing. Early experiments demonstrate EFG's versatility across domains: in software programs, it explains 31-44% energy variations through differential path activation, while in AI pipelines, it predicts optimization combinations within 5.1% of empirical measurements. The EFG transforms energy optimization from trial-and-error to systematic analysis, providing a common foundation for green software engineering across computational domains.FlipFlop: A Static Analysis-based Energy Optimization Framework for GPU Kernels

Saurabhsingh Rajput, Alex Brandt, Vadim Elisseev, Tushar Sharma

Under Review

Abstract

Artificial Intelligence (AI) applications, such as Large Language Models, are primarily driven and executed by Graphics Processing Units (GPUs). These GPU programs (kernels) consume substantial amounts of energy, yet software developers often lack the hardware expertise and ad hoc knowledge required to optimize for power efficiency. This work proposes FlipFlop, a framework using static code analysis to predict energy consumption and recommend Pareto-optimal thread block configurations considering both power consumption and execution time. The framework requires no runtime execution and analyzes PTX code, a low-level instruction set for CUDA-enabled GPUs. It is validated across a diverse set of GPUs and kernels, including multi-head attention, convolution, and matrix multiplication. FlipFlop achieves 83% accuracy in identifying locally optimal energy-efficient configurations, while also minimizing developer effort by reducing the optimization search space by 93.4%. For multi-head attention kernels, it yields up to 79% energy savings and 106% throughput gains relative to NVIDIA's occupancy heuristic. By integrating static analysis with real-time monitoring and providing explainable optimization guidance, FlipFlop empowers developers to create sustainable, high-performance GPU software which minimizes environmental and computational costs.2024

Enhancing Energy-Awareness in Deep Learning through Fine-Grained Energy Measurement

Saurabhsingh Rajput, Tim Widmayer, Ziyan Shang, Maria Kechagia, Federica Sarro, Tushar Sharma

ACM Transactions on Software Engineering and Methodology (TOSEM), 2024

Paper | Tool: FECoM

Abstract

With the increasing usage, scale, and complexity of Deep Learning (DL) models, their rapidly growing energy consumption has become a critical concern. Promoting green development and energy awareness at different granularities is the need of the hour to limit carbon emissions of DL systems. However, the lack of standard and repeatable tools to accurately measure and optimize energy consumption at fine granularity (e.g., at the API level) hinders progress in this area. This paper introduces FECoM (Fine-grained Energy Consumption Meter), a framework for fine-grained DL energy consumption measurement. FECoM enables researchers and developers to profile DL APIs from energy perspective. FECoM addresses the challenges of fine-grained energy measurement using static instrumentation while considering factors such as computational load and temperature stability. The study assesses FECoM's capability for fine-grained energy measurement for one of the most popular open-source DL frameworks, namely TensorFlow. Using FECoM, the research investigates the impact of parameter size and execution time on energy consumption, enriching understanding of TensorFlow APIs' energy profiles. Furthermore, the work elaborates on the considerations and challenges while designing and implementing a fine-grained energy measurement tool. This work facilitates further advances in DL energy measurement and the development of energy-aware practices for DL systems.Greenlight: Highlighting TensorFlow APIs Energy Footprint

Saurabhsingh Rajput, Maria Kechagia, Federica Sarro, Tushar Sharma

ACM/IEEE International Conference on Mining Software Repositories (MSR), 2024

Paper | Dataset

Abstract

Deep learning (DL) models are being widely deployed in real-world applications, but their usage remains computationally intensive and energy-hungry. While prior work has examined model-level energy usage, the energy footprint of the DL frameworks, such as TensorFlow and PyTorch, used to train and build these models, has not been thoroughly studied. This work presents Greenlight, a large-scale dataset containing fine-grained energy profiling information of 1284 TensorFlow API calls. A command line tool called CodeGreen was developed to curate such a dataset. CodeGreen is based on the previously proposed framework FECoM, which employs static analysis and code instrumentation to isolate invocations of TensorFlow operations and measure their energy consumption precisely. By executing API calls on representative workloads and measuring the consumed energy, detailed energy profiles for the APIs are constructed. Several factors, such as input data size and the type of operation, significantly impact energy footprints. Greenlight provides a ground-truth dataset capturing energy consumption along with relevant factors such as input parameter size to take the first step towards optimization of energy-intensive TensorFlow code. The Greenlight dataset opens up new research directions such as predicting API energy consumption, automated optimization, modeling efficiency trade-offs, and empirical studies into energy-aware DL system design.Benchmarking Emerging Deep Learning Quantization Methods for Energy Efficiency

Saurabhsingh Rajput, Tushar Sharma

IEEE International Conference on Software Architecture (ICSA) - GREENS Workshop, 2024

Paper

Abstract

In the era of generative artificial intelligence (AI), the quest for energy-efficient AI models is increasing. The increasing size of recent AI models has led to quantization techniques that reduce large models' computing and memory requirements. This study aims to compare the energy consumption of five quantization methods: Gradient-based Post-Training Quantization (GPTQ), Activation-aware Weight Quantization (AWQ), GPT-Generated Model Language (GGML), GPT-Generated Unified Format (GGUF), and Bits and Bytes (BNB). The research benchmarks and analyzes the energy efficiency of these commonly used quantization methods during inference. This preliminary exploration found that GGML and its successor GGUF were the most energy-efficient quantization methods. The findings reveal significant variability in energy profiles across methods, challenging the notion that lower precision universally improves efficiency. The results underscore the need to benchmark quantization techniques from an energy perspective beyond just model compression. The findings guide the selection of models using quantization techniques and the development of new quantization techniques that prioritize energy efficiency, potentially leading to more environmentally friendly AI deployments.2022

Green AI: Do Deep Learning Frameworks Have Different Costs?

Stefanos Georgiou, Maria Kechagia, Tushar Sharma, Federica Sarro, Ying Zou

ACM/IEEE International Conference on Software Engineering (ICSE), 2022

Paper

Abstract

The use of Artificial Intelligence (AI), and more specifically of Deep Learning (DL), in modern software systems, is nowadays widespread and continues to grow. At the same time, its usage is energy demanding and contributes to the increased CO2 emissions, and has a great financial cost as well. Even though there are many studies that examine the capabilities of DL, only a few focus on its green aspects, such as energy consumption. This paper aims at raising awareness of the costs incurred when using different DL frameworks. To this end, a thorough empirical study is performed to measure and compare the energy consumption and run-time performance of six different DL models written in the two most popular DL frameworks, namely PyTorch and TensorFlow. A well-known benchmark of DL models, DeepLearningExamples created by NVIDIA, is used to compare both the training and inference costs of DL. Finally, the functions of these frameworks that took most of the time to execute in the experiments are manually investigated. The results of the empirical study reveal that there is a statistically significant difference between the cost incurred by the two DL frameworks in 94% of the cases studied. While TensorFlow achieves significantly better energy and run-time performance than PyTorch with large effect sizes in 100% of the cases for the training phase, PyTorch instead exhibits significantly better energy and run-time performance than TensorFlow in the inference phase for 66% of the cases, always with large effect sizes. Such a large difference in performance costs does not, however, seem to affect the accuracy of the models produced, as both frameworks achieve comparable scores under the same configurations. Manual analysis of the documentation and source code of the functions examined reveals that such a difference in performance costs is under-documented in these frameworks. This suggests that developers need to improve the documentation of their DL frameworks, the source code of the functions used in these frameworks, as well as to enhance existing DL algorithms.Talks, Presentations, and Posters

Conference Presentations and Talks

-

Towards Greener AI: Developing Methods for Energy-Efficient AI Systems

Saurabhsingh Rajput

Conference on Software Engineering Research (CSER), 2025 -

Enhancing Energy-Awareness in Deep Learning through Fine-Grained Energy Measurement

Saurabhsingh Rajput

IEEE International Conference on Software Engineering (ICSE) - Journal First Presentations, 2025 -

Full-spectrum Energy Profiling: Methods, Challenges, and Applications

Saurabhsingh Rajput

(IEEE International Conference on Collaborative Advances in Software and COmputiNg (CASCON)) Tutorial, 2025 -

Ignorance is Green: An Adaptive Language-Agnostic Pruning Method for Greener Language Models for Code

Mootez Saad

Conference on Software Engineering Research (CSER), 2025 -

An Adaptive Language-Agnostic Pruning Method for Greener Language Models for Code Mootez Saad ACM SIGSOFT International Symposium on Foundations of Software Engineering (FSE), 2025

-

Energy Smell Taxonomy & Identification

Mohammadjavad Mehditabar

Conference on Software Engineering Research (CSER), 2025

Poster Presentations

-

Towards Energy-Efficient CUDA Kernels: A Predictive Modeling Approach

Saurabhsingh Rajput

Dalhousie AI Symposium, 2024 -

Towards Energy-Efficient CUDA Kernels: A Predictive Modeling Approach

Saurabhsingh Rajput

(IEEE International Conference on Collaborative Advances in Software and COmputiNg (CASCON)) Poster, 2024 -

Investigating the Energy Efficiency of Popular Quantization Techniques for AI Models

Saurabhsingh Rajput

SEMLA Symposium, 2024 -

Towards Predictive Power Modeling of GPU kernels

Saurabhsingh Rajput

Smart Energy Conference, Halifax, 2024

Contact

Dr. Tushar Sharma

Assistant Professor, Faculty of Computer Science

Dalhousie University, Halifax, NS, Canada

Email: tushar@dal.ca

Website: https://tusharma.in | SMART Lab