|

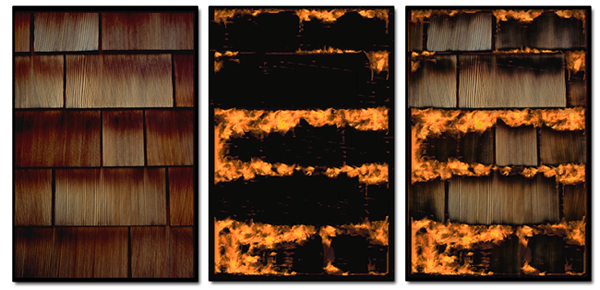

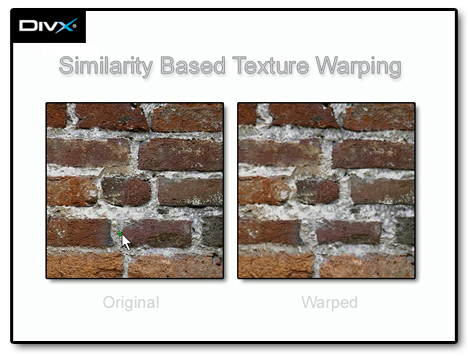

Image editing software is often characterized by a seemingly endless array of toolbars, filters, transformations and layers. But recently, a counter trend has emerged in the field of image editing which aims to reduce the user's workload through semi-automation. This alternate style of interaction has been made possible through advances in directed texture synthesis and computer vision. It is in this context that we have developed our texture editing system that allows complex operations to be performed on images with minimal user interaction. This is achieved by utilizing the inherent self-similarity of image textures to replicate intended manipulations globally. Inspired by the recent successes of hierarchical approaches to texture synthesis, our method uses multi-scale neighborhoods to assess the similarity of pixels within a texture. However, neighborhood matching is not employed to generate new instances of a texture. We instead locate similar neighborhoods for the purpose of replicating editing operations on the original texture itself, thereby creating a fundamentally new texture. This general approach is applied to texture painting, cloning and warping. These global operations are performed interactively, most often directed with just a single mouse movement. Recent enhancements to our system include user-controlled sharpness, Boolean similarity expressions and the adaptive synthesis of cloning textures.

Results Publications

Video

Links

|