|

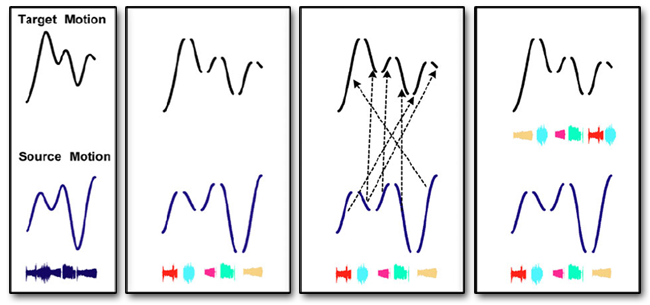

The automatic generation of audio has been explored both as artifact in itself and for the accompaniment of existing animations. The most recent work automatically generates soundtracks for input animation based on existing animation soundtrack. This technique can greatly simplify the production of soundtracks in computer animation and video by re-targeting existing soundtracks. A segment of source audio is used to train a statistical model which is then used to generate variants of the original audio to fit particular constraints. These constraints can either be specified explicitly by the user in the form of large-scale properties of the sound texture, or determined automatically and semi-automatically by matching similar motion events in a source animation to those in the target animation. Earlier work explored connections between music and mathematics. MWSCCS is a concurrent stochastic process algebra for musical modeling and generative composition. This allows the creation music directly from mathematical processes.

Publications

Sample Video

|