|

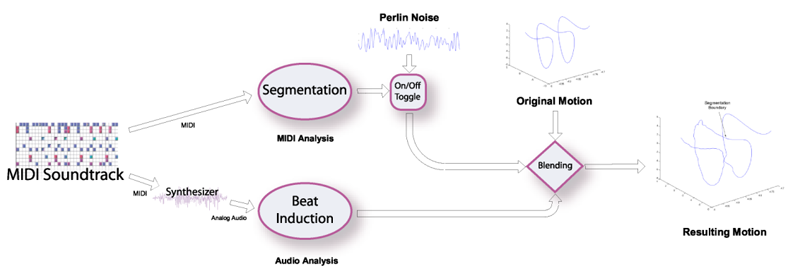

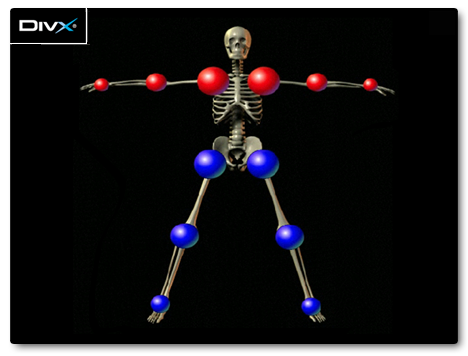

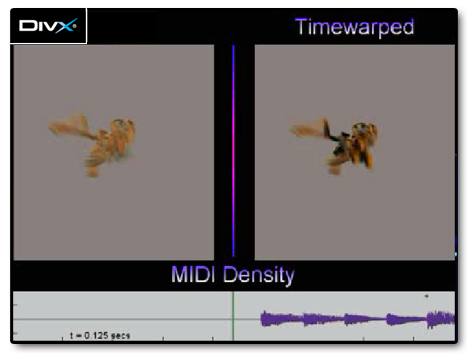

Two research projects have been undertaken in the area of motion editing. The first is a framework for synchronising motion curves to music in computer animation. Motions are locally modified using perceptual cues extracted from the music. The key to this approach is the use of standard music analysis techniques on complementary MIDI and audio representations of the same soundtrack. The second project allows the replicated editing of human movement contained in a motion capture sequence or database of sequences. To this end, we rapidly retrieve perceptually similar occurrences of a particular motion in a long motion capture sequence or unstructured motion capture database. The user is then able to replicate editing operations with minimal input: one or more editing operations on a given motion are made to affect all similar matching motions.

Publications

Video 1

Video 2

|